ChatGPT may need 30,000 NVIDIA GPUs. Should PC gamers be worried?

ChatGPT's surge in popularity will require thousands of GPUs to meet demand.

What you need to know

- ChatGPT will require as many as 30,000 NVIDIA GPUs to operate, according to a report by research firm TrendForce.

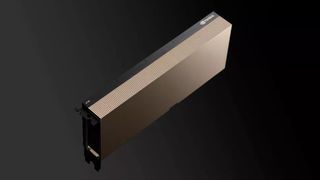

- Those calculations are based on the processing power of NVIDIA's A100, which costs between $10,000 and $15,000.

- It's unclear if gamers will be affected by so many GPUs being dedicated to running ChatGPT.

ChatGPT stormed onto the tech scene near the end of 2022. By January of this year, the tool reportedly had 100 million monthly users. It would only get more users in the following month, as Microsoft announced a new version of Bing powered by ChatGPT. With the technology receiving so much interest and real-world use, it's not surprising that companies stand to profit from ChatGPT's popularity.

According to research firm TrendForce, NVIDIA could make as much as $300 million in revenue thanks to ChatGPT (via Tom's Hardware). That figure is based on TrendForce's analysis that ChatGPT needs 30,000 NVIDIA A100 GPUs to operate. The exact figure that NVIDIA stands to make will depend on how many graphics cards OpenAI needs and if NVIDIA gives the AI company a discount for having such a large order.

If NVIDIA prioritizes placing components within graphics card meant to run ChatGPT, it could affect the availability of other GPUs.

ChatGPT uses a different type of GPU than what people put into their gaming PCs. An NVIDIA A100 costs between $10,000 and $15,000 and is intended to handle the demand ChatGPT places on systems. So, it may seem like gamers would be unaffected by such a large number of A100 GPUs being used to operating ChatGPT but that's not entirely the case.

The global chip shortage was caused by a shortage of chips, not entire graphics cards. Because of that, we saw strange situations such as car companies and computer graphics card makers competing for the same components. Of course, most cars don't have gaming GPUs, Tesla being the exception, but chips are used in manufacturing, computing, and many other industries.

If NVIDIA prioritizes placing components within graphics card meant to run ChatGPT, it could affect the availability of other GPUs.

Another factor in all of this is Microsoft and OpenAI's recently extended partnership. While ChatGPT may require processing power equivalent to 30,000 NVIDIA A100 GPUs, that power could come in the form of Microsoft's own Azure systems. If that's the case, the number of physical GPUs needed to run ChatGPT would be drastically different.

Get the Windows Central Newsletter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Sean Endicott brings nearly a decade of experience covering Microsoft and Windows news to Windows Central. He joined our team in 2017 as an app reviewer and now heads up our day-to-day news coverage. If you have a news tip or an app to review, hit him up at sean.endicott@futurenet.com.