YouTube ramps up campaign against AI slop — with stricter monetization caveats on inauthentic and repetitive videos

The updated monetization policies are designed to help better identify mass-produced and repetitive content as AI becomes more prevalent.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

Over the past few months, YouTube's user experience has seemingly been on a downward spiral. Google recently ramped up its aggressive campaign against ad blockers by intentionally slowing down YouTube videos and even preventing playback for users with the extensions installed on their devices.

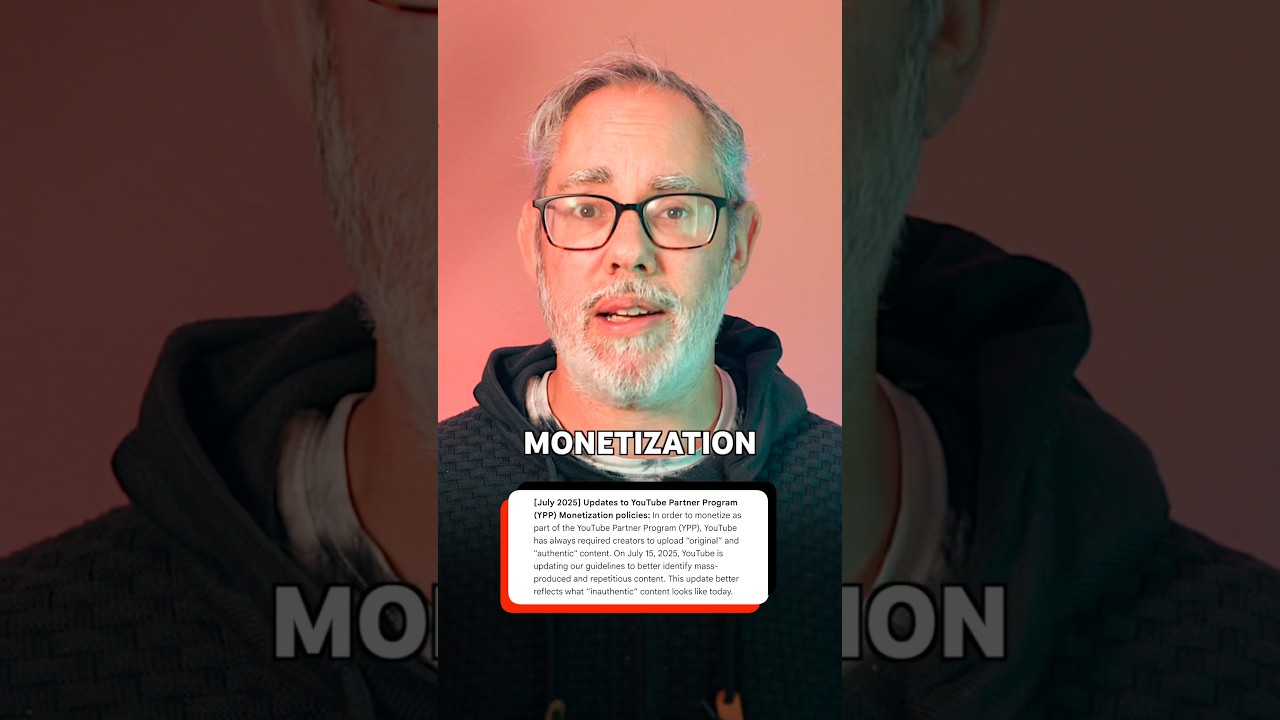

More recently, the video-sharing platform announced its plans to update its monetization policies. The new policies feature stricter restrictions, predominantly centered on "inauthentic content" under the YouTube Partner Program guidelines.

The information was quickly picked up by creators around the world, but misconstrued to mean that the platform was getting ready to demonetize a wide range of videos, including AI-generated content, clips, and reactions (via The Verge).

However, YouTube Head of Editorial & Creator Liaison Rene Ritchie recently dismissed these claims via a video update. The executive explained that the new monetization policies were just a "minor update" to YouTube's existing Partner Program guidelines.

Ritchie further explained that the updated policies are designed to identify mass-produced or repetitive content better, further clarifying that this type of content has been ineligible for monetization for years, since most viewers often consider it as spam. He further explained that uploading original and authentic content has always been a mandatory requirement for creators on YouTube.

According to YouTube:

“On July 15, 2025, YouTube is updating our guidelines to better identify mass-produced and repetitious content. This update better reflects what ‘inauthentic’ content looks like today.”

All the latest news, reviews, and guides for Windows and Xbox diehards.

To that end, it is still unclear what the updated policies will constitute and what type of content falls under the monetization bracket. AI-generated content is increasingly becoming a significant concern, especially with the emergence of sophisticated tools like OpenAI's Sora and Google's Veo.

Last year, days before the impending US Presidential elections, Elon Musk's Grok, which he often touts as the most powerful AI in the world, was spotted spreading misinformation. Grok generated and spread "false information on ballot deadlines" following President Biden's withdrawal from the race for the White House.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.