The human cost behind ChatGPT is worse than you think

TIME has done an exposé on the human labor from Kenya that’s used to make OpenAI’s ChatGPT safe.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

What you need to know

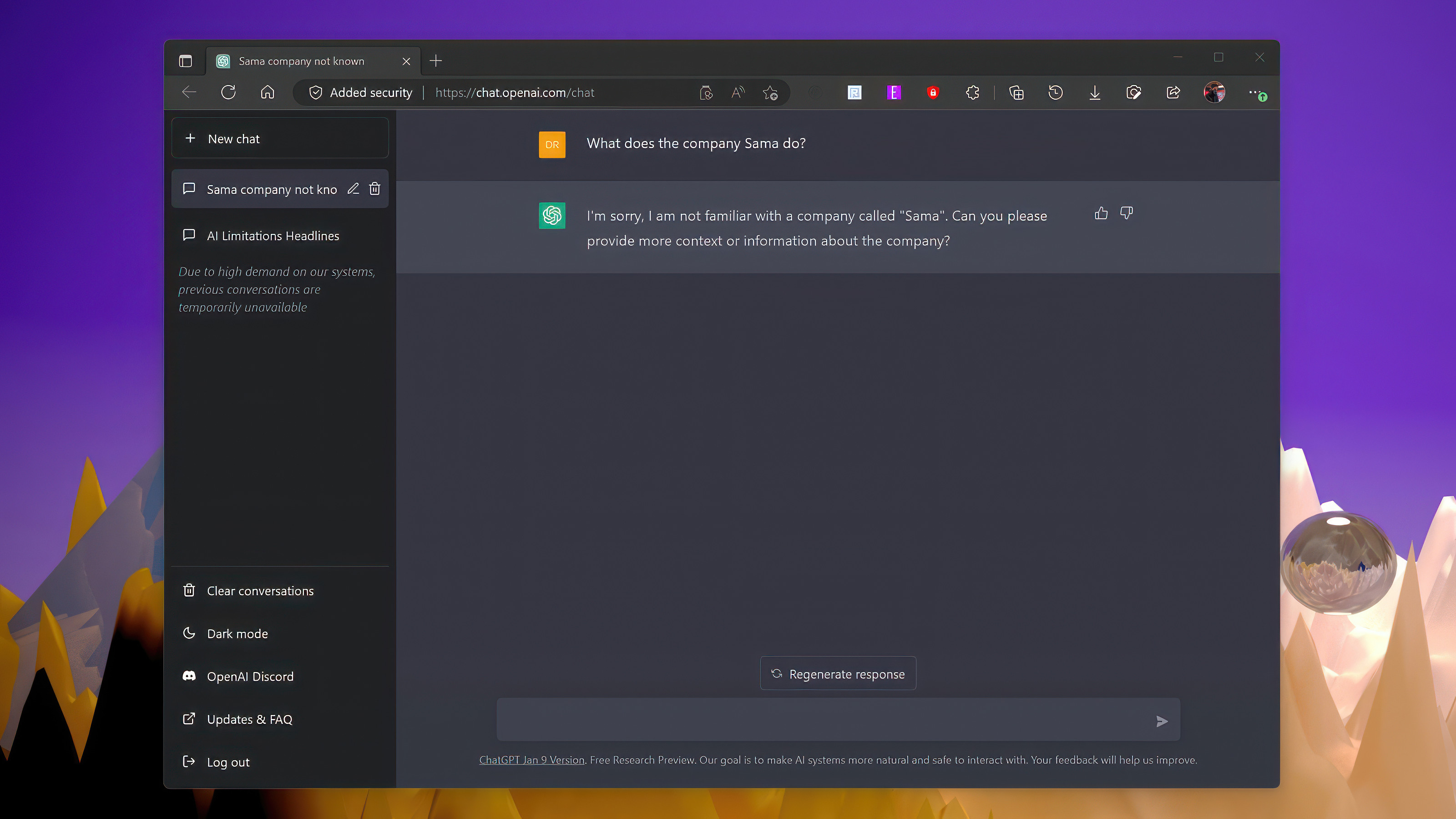

- TIME detailed how a San Francisco company called Sama helped build a safety system for ChatGPT.

- Sama employees, based in Kenya, reportedly made around $1.32 and $2 per hour, depending on seniority and performance.

- Sama was later tasked with labeling explicit images for an unknown OpenAI project but later pulled out of the contract due to legality concerns and severed ties with OpenAI.

- The story continues to highlight the human impact behind the nascent AI revolution.

In a complicated story from TIME, work between OpenAI, the company behind ChatGPT, and Sana, a San Francisco-based firm that employs people in Kenya named Sama. Sama works with some of the biggest companies in the tech industry, including Google, Meta, and Microsoft, on labeling content in images and text for explicit content.

Microsoft already has invested $1 billion into OpenAI, with a possible $10 billion more on the way. Microsoft plans to put AI into everything and reportedly leverage ChatGPT with Bing.

Sama is based in San Fran, but the work is performed by workers in Kenya, earning around $1.32 and $2 per hour. Unfortunately, to keep ChatGPT “safe” for users, OpenAI needs to feed it a lot of data from the internet, which is all unfiltered. So instead of using humans to filter out all the bad stuff, OpenAI (and companies like Meta with Facebook) employ other AI tools to remove that content from the data pool automatically.

But to train those safety tools, humans are needed to index and label content.

Like the 2019 story on Facebook from The Verge, which highlighted the psychological impact of such content on the workers, Sama employees also suffered a similar fate:

“One Sama worker tasked with reading and labeling text for OpenAI told TIME he suffered from recurring visions after reading a graphic description of a man having sex with a dog in the presence of a young child. “That was torture,” he said. “You will read a number of statements like that all through the week. By the time it gets to Friday, you are disturbed from thinking through that picture.” The work’s traumatic nature eventually led Sama to cancel all its work for OpenAI in February 2022, eight months earlier than planned.”

The overall contract with Sama was $200,000, and that contract stipulated it would pay “an hourly rate of $12.50 to Sama for the work, which was between six and nine times the amount Sama employees on the project were taking home per hour.”

All the latest news, reviews, and guides for Windows and Xbox diehards.

Later, Sama began to pilot a new project for OpenAI unrelated to ChatGPT. However, instead of text this time, it was imagery, including some illegal under US law, such as child sexual abuse, bestiality, rape, sexual slavery, and death and violence. Again, workers were to view and label the content so that OpenAI’s systems could filter out such things.

Sama, however, canceled all work with OpenAI soon after. The company says it did so over concern over the content (and legal issues). However, employees were told that TIME’s earlier investigation into Sama and Facebook, where Kenyan employees were used as moderators, prompted the turnaround due to the bad PR the company was receiving.

Of course, while Sama is out of the picture for OpenAI, the work must continue, and it’s unclear who else is doing it.

Windows Central’s Take

The Sama story is, unfortunately, not unusual. Content moderation on Facebook also faced similar complaints a few years ago, where moderators had to view horrific imagery daily to remove it from the site. The goal, of course, is not to subject humans to that kind of content and have AI and machine learning (ML) do it instead – but to train those systems, humans have to be part of the initial labeling of data.

The other issue is the focus on pay. While $1.32 is terrible for anyone in an industrialized market, for those in emerging economies like Kenya, it is pretty decent, falling around a secretary or school teacher’s pay level. TIME even notes how while Sama has cut ties with OpenAI, its employees are left with no jobs. From the same article:

“… one Sama employee on the text-labeling projects said. “We replied that for us, it was a way to provide for our families.” Most of the roughly three dozen workers were moved onto other lower-paying workstreams without the $70 explicit content bonus per month; others lost their jobs. Sama delivered its last batch of labeled data to OpenAI in March, eight months before the contract was due to end.”

So, the issue cuts both ways. On the one hand, no human should have to view such content daily (even though Sama claims psychological resources were offered to employees); on the other, some people would prefer to do that work rather than nothing.

The more significant issue is it is not clear what the alternative is to all this if AI is to have a future. Unfortunately, at least initially, humans need to be a part of this training process. Still, the impact on those individuals (and society at large) is something that can’t be measured or ignored.

Daniel Rubino is the Editor-in-Chief of Windows Central. He is also the head reviewer, podcast co-host, and lead analyst. He has been covering Microsoft since 2007, when this site was called WMExperts (and later Windows Phone Central). His interests include Windows, laptops, next-gen computing, and wearable tech. He has reviewed laptops for over 10 years and is particularly fond of Qualcomm processors, new form factors, and thin-and-light PCs. Before all this tech stuff, he worked on a Ph.D. in linguistics studying brain and syntax, performed polysomnographs in NYC, and was a motion-picture operator for 17 years.