Meet the Orb — Sam Altman's Tools for Humanity pays you $42 in crypto to become a verified human before an AGI apocalypse

While AGI may bring big gains in education, health, and computing, it could also arrive with a deep identity crisis for humanity.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

Generative AI is gaining broad adoption across the world. As such, it's increasingly becoming difficult to tell what's real from AI-generated content. Last year, former Twitter CEO Jack Dorsey indicated that it'll be impossible to tell what's real from the fake in the next 5-10 years "because of the way images are created, deep fakes, and videos."

This issue is becoming more prevalent as models scale greater heights and become more advanced. As we venture into the agentic AI era, models are now more capable and can handle redundant and repetitive tasks with minimal or no human intervention.

While this seems great on paper, the AI revolution also ships with its fair share of challenges. Key stakeholders in the AI landscape, including OpenAI CEO Sam Altman (who's also partly contributed to the challenge), predict a paradigm shift for humanity after hitting the AGI (artificial general intelligence, an AI system that surpasses human cognitive capabilities).

The executive says that as we edge closer to AGI, it's increasingly becoming important to have elaborate systems in place, which will make it easy to tell humans and AI agents apart.

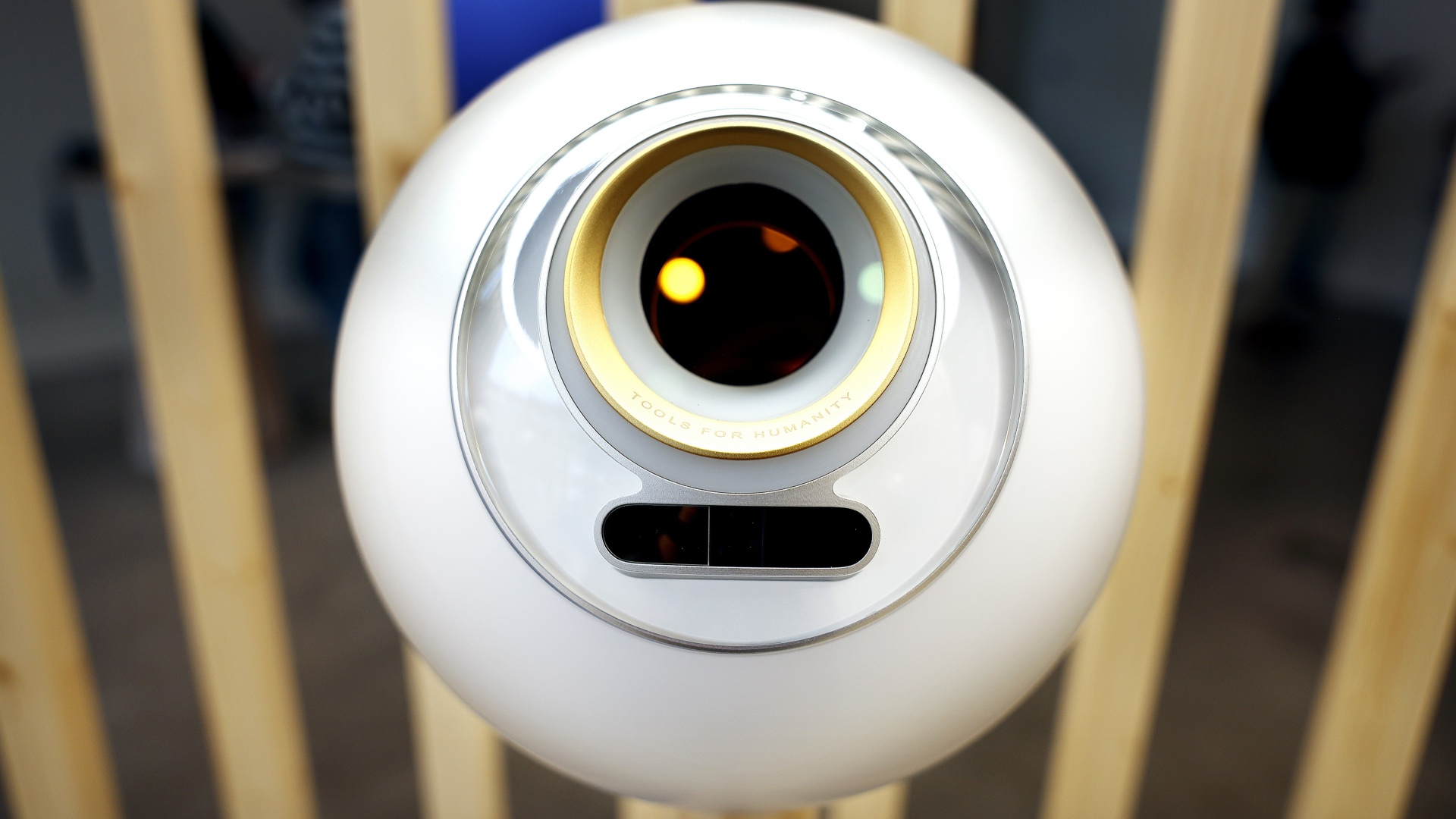

Enter Tools for Humanity's Orb (via TIME).

For context, "Orb" is a next-generation device used to identify and authenticate humans in the AGI era. It's essentially a spherical blob with a camera lodged in the center.

The premise is simple: you're supposed to look into the camera and allow the device to capture unique elements and ciliary zones of your iris. As such, the device verifies your humanity based on a 12,800-digit binary number, also known as the iris code, which is sent to an app on your phone.

All the latest news, reviews, and guides for Windows and Xbox diehards.

But this isn't just a simple registration process; you get paid, too.

Well, sort of. People who register receive a packet of cryptocurrency called Worldcoin. Each user who registers will receive $42 in their digital wallets as compensation for becoming a verified human.

Why does Orb matter?

According to OpenAI CEO Sam Altman:

“We needed some way for identifying, authenticating humans in the age of AGI. We wanted a way to make sure that humans stayed special and central.”

The executive seemingly has it all figured out. For context, he co-founded Tools for Humanity in 2019. He envisioned a future where the company would help preserve humanity after OpenAI developed advanced AI models, marking the end of one era on the Internet.

At this juncture, it could almost be impossible to tell what's real and what's fake.

Tools for Humanity's Orb seems like the perfect solution for the imminent danger to humanity. The company hopes to register up to 50 million people by the end of this year, but that goal seems more like a stretch, especially since the company has only managed to register 12 million people since mid-2023.

However, Tools for Humanity's Orb is currently rolling out in the United States with plans to set up approximately 7,500 stations across American cities in the next 12 months. It will be interesting to see if this will help the company achieve its goals before the year ends.

Humanity isn't the only thing at stake with the rapid emergence and adoption of AI across the world. It could also potentially impact how the internet works. Up until now, the common consensus is that the information and ads shared on the Internet are consumed by humans. However, this might not be necessarily true in the AI era.

While speaking to TIME, Tools for Humanity CEO Alex Blania indicated:

“The Internet will change very drastically sometime in the next 12 to 24 months. So we have to succeed, or I’m not sure what else would happen.”

AI is undoubtedly advancing at a rapid rate, creating an urgent need for elaborate measures to prevent it from spiraling out of control while simultaneously preserving humanity.

While Tools for Humanity has categorically indicated that it doesn't collect biometric data through its Orbs, further indicating that iris images are deleted after verification, trust remains a major concern among most users and AI critics.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.