This is what happened when Boston Dynamics' robots started to speak, powered by ChatGPT

A group of engineers leverage ChatGPT capabilities to create a robot tour guide.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- A group of engineers recently developed a robot by leveraging generative AI capabilities to serve as a tour guide across Boston Dynamics' premises.

- Boston Dynamics trained its AI systems on a massive dataset. The development team admits that while the invention is impressive, they encountered several issues, including hallucination episodes.

- The robot is based on OpenAI's GPT-4 model, but the development team employed prompt engineering techniques to establish control over its responses.

In every sense of the word, generative AI is reshaping how we go about our day-to-day activities in one way or another. From helping students solve complex math problems, writing poems, and even generating images based on your imagination and prompts. Based on these instances, the future looks bright as the technology will help users explore more avenues as well as untapped opportunities.

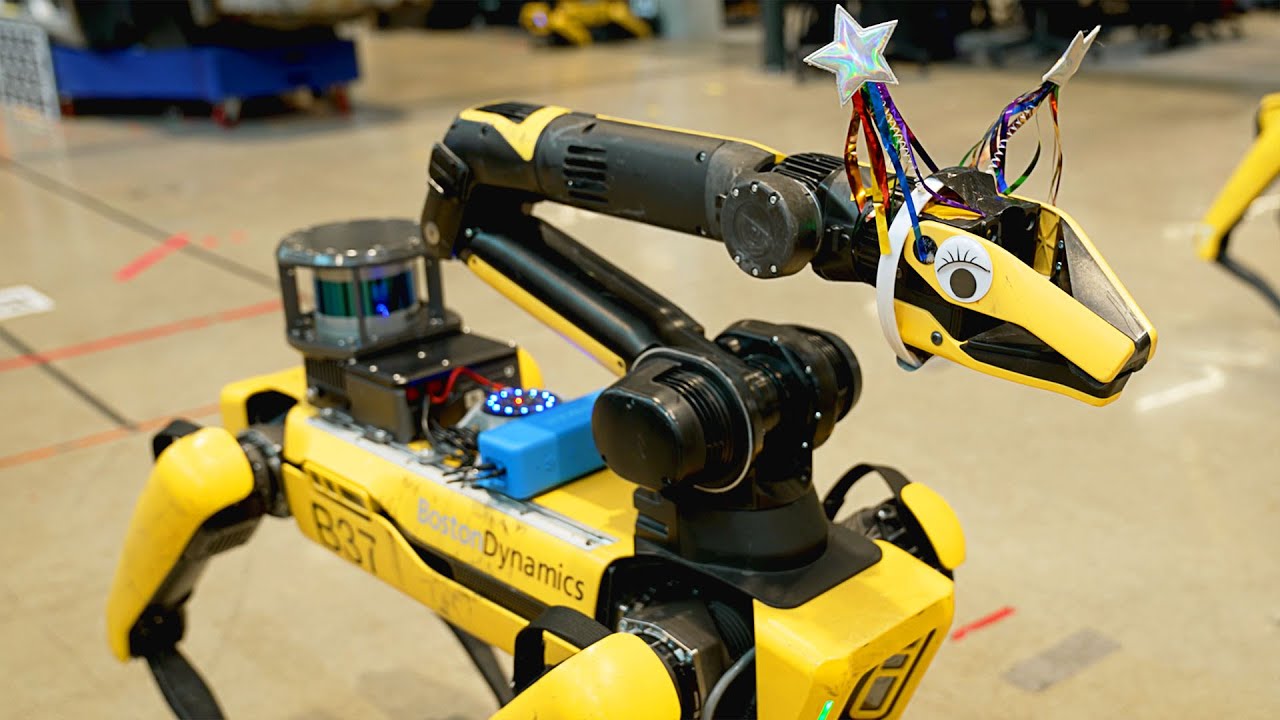

Speaking of untapped opportunities, Boston Dynamics recently documented new heights it achieved by leveraging generative AI capabilities. The company created a robot that's designed to serve as a tour guide using its Spot robot, integrated with ChatGPT and other AI models.

The American engineering and design company specializes in developing robots, including their famed dog-like "Spot." The robots are designed to make work easier for users as they take on repetitive, dangerous, and complex tasks, ultimately boosting productivity while simultaneously asserting the user's safety.

In particular, we were interested in a demo of Spot using Foundation Models as autonomy tools—that is, making decisions in real-time based on the output of FMs. Large Language Models (LLMs) like ChatGPT are basically very big, very capable autocomplete algorithms; they take in a stream of text and predict the next bit of text. We were inspired by the apparent ability of LLMs to roleplay, replicate culture and nuance, form plans, and maintain coherence over time, as well as by recently released Visual Question Answering (VQA) models that can caption images and answer simple questions about them.

Matt Klingensmith, Software Engineer

The emergence of large AI systems trained on a massive dataset sparked Matt Klingensmith's interest (Principal Software Engineer) mainly because of the Emergent Behavior that's part of these models. Emergent Behavior is the ability of AI-powered chatbots to perform tasks outside the data they are based and trained on.

Matt viewed this as a great opportunity, which led to the commencement of the project earlier this year in the summer with the aim of exploring its impact on robotics development.

How does the robot tour guide work?

The software engineer disclosed that developing a robot tour guide was the easiest and fastest way to test this theory. Essentially, the robot has the capability to walk around the company premises looking at objects.

What's more, it leverages a VQA, which is a captioning model to describe the objects within its view, further elaborating on its description using a large language model (LLM). Through the LLM, the robot can also answer questions posed by its audience and even plan the next actions it should take.

All the latest news, reviews, and guides for Windows and Xbox diehards.

While LLMs like Bing Chat have faced their fair share of setbacks including hallucination episodes, this was not a major concern for the robot's development team. Instead, the team was more focused on the entertainment and interactive aspects. Besides, the robot's ability to walk around was already figured out in Spot's autonomy SDK. Boston Dynamics leverages the Spot SDK to support the development of autonomous navigation behaviors for the Spot robot.

For communication purposes, the team 3D printed a vibration-resistant mount for a Respeaker V2 speaker bundled with a ring-array microphone with LEDs on the robot tour guide. This way, the robot is able to listen to its audience and respond to their queries.

Building on this premise the team integrated OpenAI's ChatGPT API starting with the GPT-3.5 model, but eventually transitioned to GPT-4 once it shipped to general availability to further improve the robot's communication skills. To ensure that the robot doesn't spiral out of control or give distasteful responses, the team employed prompt engineering techniques.

According to the robot's development team:

"Inspired by a method from Microsoft, we prompted ChatGPT by making it appear as though it was writing the next line in a Python script. We provided English documentation to the LLM in the form of comments. We then evaluated the output of the LLM as though it were Python code."

The team also revealed that the LLM integrated into the robot also had access to the Spot autonomy SDK, a detailed map of the tour site bundled up with one-line descriptions of each location, as well as the capability to respond and ask questions.

Text to Speech conversion

While the robot heavily relies on ChatGPT for communication, it's obvious that the chatbot is text based. This is why the development the cloud service ElevenLabs, to serve as a text-to-speech tool.

The team also incorporated robot’s gripper camera and front body camera into BLIP-2. This way, it's easier for it to interpret what it sees and provide context. According to the team that BLIP-2 ran the images and visuals "either in visual question answering mode (with simple questions like “what is interesting about this picture?”) or image captioning mode" at least once a second.

Life-like conversations

The team also wanted to present a life-like experience for the audience while interacting with the robot during the tour. As such, the team incorporated some default body language to bring this experience to life. Thanks to the Spot 3.3 release, the robot is able to direct its arm to the nearest person while explaining a particular concept.

The development process turned out to be quite the spectacle, as the team ran into several surprises. For instance, when asked who Marc Raibert was, the robot responded by stating that it didn't know and recommended heading to the IT help desk for further assistance. Strangely enough, the development team didn't prompt the LLM to seek further assistance. According to Matt, the robot must have associated the location of the IT help desk with the action of asking for help.

Matt admits that while the robot tour guide is impressive, it was spotted hallucinating severely and making up things. Not forgetting the adverse impact on the robot's performance in the event that it was unable to establish a stable internet connection.

Moving forward, the team aims to explore this avenue even more, especially after discovering that it is possible to integrate the results of several general AI systems together.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.