AI-powered malware eludes Microsoft Defender's security checks 8% of the time — with just 3 months of training and "reinforcement learning" for around $1,600

AI-assisted malware can apparently bypass Microsoft Defender for Endpoint without relying on the internet for its training data.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

Security is increasingly becoming a growing concern among most people, especially as generative AI gains broad adoption and scales greater heights. In 2023, a study by Microsoft revealed that hackers were adopting AI tools like Microsoft Copilot and OpenAI's ChatGPT to deploy their phishing schemes against unsuspecting users.

Now, bad actors are getting more creative and are coming up with ingenious ways to bypass sophisticated security systems and gain unauthorized access to sensitive data. In recent weeks, we've seen multiple users come up with well-curated scams to lower ChatGPT's guardrails, prompting the tool to generate valid Windows 10 activation keys.

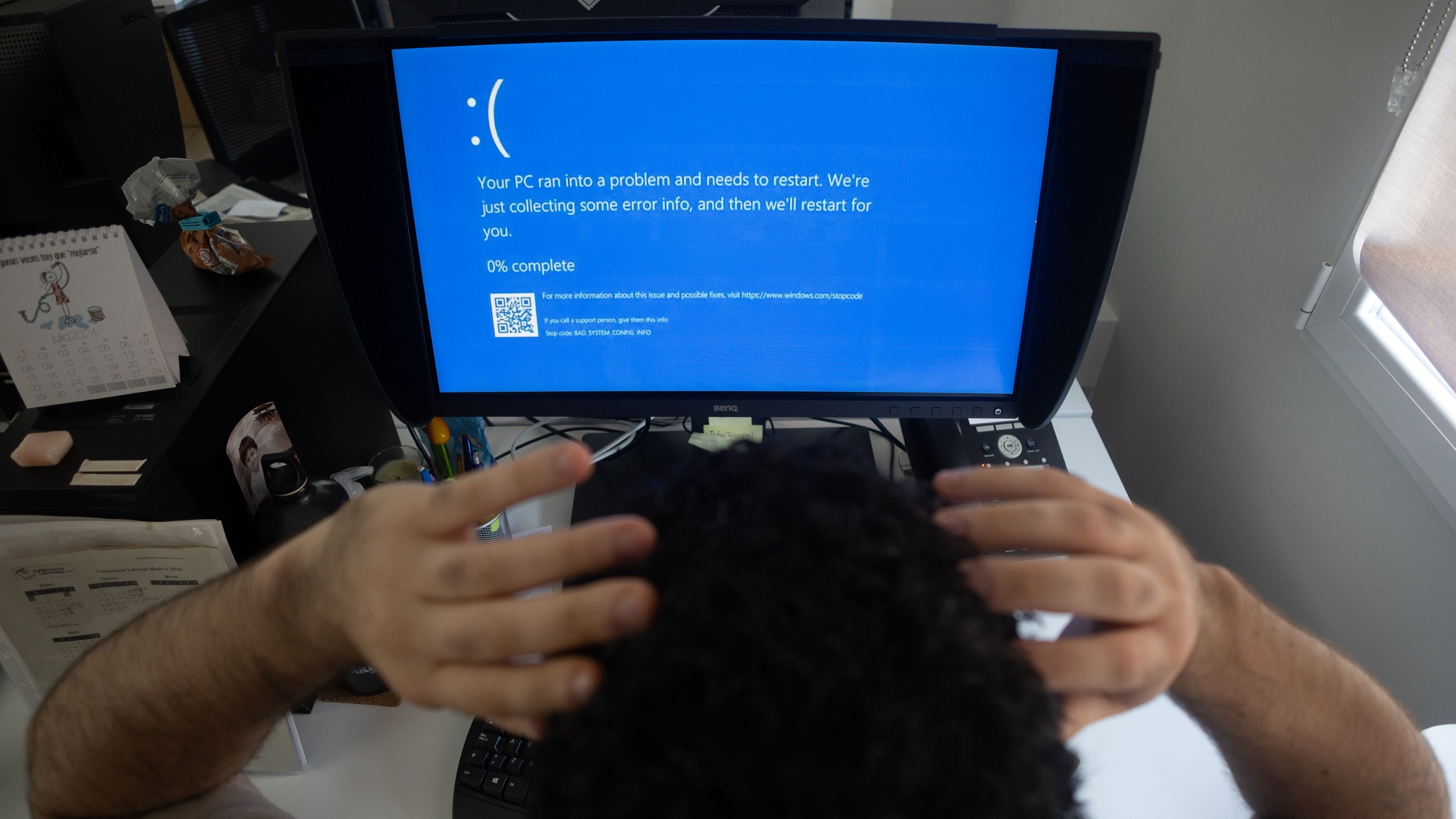

Windows users are probably familiar with Microsoft Defender, a sophisticated security platform designed to protect and defend users from malicious breaches orchestrated by hackers.

While the tool shows great promise, a Dark Reading report reveals Outflank researchers are set to release new AI malware at the cybersecurity event "Black Hat 2025" in August with the capability to bypass Microsoft Defender for Endpoint's security (via Tom's Hardware).

Speaking to Dark Reading, Outflank principal offensive specialist lead, Kyle Avery, disclosed that he spent approximately 3 months developing the AI malware, additionally spending $1,500 - $1,600 training the Qwen 2.5 LLM to bypass Microsoft Defender.

Interestingly, the dedicated AI sleuth shared some insights about OpenAI's GPT models compared to its recent flagship reasoning models like o1. While he admits that GPT-4 was a major upgrade from GPT-3.5, he explains that OpenAI's o1 reasoning model shipped with sophisticated capabilities that made it particularly adept at coding and math.

AI and Reinforcement Learning forges a perfect hacker's paradise

However, these capabilities seemingly capped the model's writing capabilities, which the researcher claims is by design. He made the conclusion after DeepSeek shipped its R1 open-source model, which was accompanied by a research paper detailing its development.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Avery says that DeepSeek leveraged the reinforcement learning technique to improve its model's capabilities across a wide range of subjects, including coding. As such, he applied this theory when developing the AI malware to bypass Microsoft Defender's security solutions.

The researcher admits that the process wasn't a walk in the park since LLMs are predominantly trained using internet data, which made it difficult for him to access traditional malware to train the AI-driven equivalent.

This is where Reinforcement Learning came in clutch. The researcher put Qwen 2.5 LLM in a sandbox with Microsoft Defender for Endpoint. Then, he wrote a program to grade how close the AI model came to outputting an evasion tool.

According to Avery:

"It definitely cannot do this out of the box. One in a thousand times, maybe, it gets lucky and it writes some malware that functions but doesn't evade anything. And so when it does that, you can reward it for the functioning malware."

"As you do this iteratively, and it gets more and more consistent at making something that functions, not because you showed it examples but because it was updated to be more likely to do the sort of thought process that led to the working malware."

Ultimately, Avery plugged in an API, making it easier to query and retrieve similar alerts generated by Microsoft Defender. This way, it was much easier to the model to develop malware which is less likely to trigger alerts when bypassing the software's security blocks.

The researcher eventually unlocked his desired output, successfully using AI to generate malware with the capability of circumventing Microsoft Defender's sophisticated security solutions approximately 8% of the time.

In comparison, Anthropic's Claude AI replicated similar results less than 1% of the time, while DeepSeek's R1 came in at a distant 1.1% chance. It'll be interesting to see if companies like Microsoft will up the ante in their security solutions as AI scams and malware increasingly become more sophisticated. At this point, they basically have no choice.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.