OpenAI Unveils Two New AI Models: gpt-oss-120b and gpt-oss-20b — and run locally on Snapdragon PCs and NVIDIA RTX GPUs

Windows PCs powered by Snapdragon processors or NVIDIA RTX GPUs can run OpenAI's gpt-oss-20b model natively.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

OpenAI just released two generative AI models: gpt-oss-120b and gpt-oss-20b. While neither of them is the highly anticipated GPT-5, they mark a significant step forward for OpenAI.

Both gpt-oss-120b and gpt-oss-20b are open-weight models, meaning the parameters used to train the models are available to the public. OpenAI has not released an open-weight model since GPT-2 in 2019.

"We're excited to make this model, the result of billions of dollars of research, available to the world to get AI into the hands of the most people possible," said OpenAI CEO Sam Altman to Wired.

Neither of the models is meant to replace OpenAI's GPT models, such as the upcoming GPT-5. Both gpt-oss-120b and gpt-oss-20b are limited to text-only, though they are capable of browsing the web and working with cloud-based models to help with tasks.

The new models use chain-of-thought reasoning, which breaks big tasks down into smaller tasks to yield better results. It's a more "human" way to approach problem solving.

You can download the models through Hugging Face.

OpenAI partnered with several development platforms regarding its new models, including Azure, Hugging Face, vLLM, Ollama, AWS, and Fireworks.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Coinciding with the release of gpt-oss-120b and gpt-oss-20b, Ollama released a new app that makes it easier to use local LLMs on a Windows 11 PC.

AI without the cloud

I'm going to focus a bit more on gpt-oss-20b because it is capable of running on PCs powered by Snapdragon processors. That's a big deal for Snapdragon-powered computing, which is often limited by compatibility issues.

This time, it is Snapdragon-powered PCs that are supported on day one (though Qualcomm cannot claim exclusivity to supporting the model).

Qualcomm discussed the milestone:

"OpenAI has open-sourced its first reasoning model, gpt-oss-20b, a chain-of-thought reasoning model that runs directly on devices with flagship Snapdragon processors. OpenAI’s sophisticated models have been previously confined to the cloud. Today marks the first time the company is making its model available for on-device inference."

The gpt-oss-20b, which delivers similar results to o3-mini on certain benchmarks, can run on devices with only 16GB of RAM.

That means the model can run on many of the best Copilot+ PCs.

Today was a milestone for Qualcomm, but NVIDIA was not left out. Both gpt-oss-120b and gpt-oss-20b were trained on NVIDIA H100 GPUs. The GPU giant shared the news in a blog post.

The more powerful of the models required 2.1 million hours of training on those GPUs. The less powerful gpt-oss-20b only took around one-tenth of that time, as reported by Hawkdive.

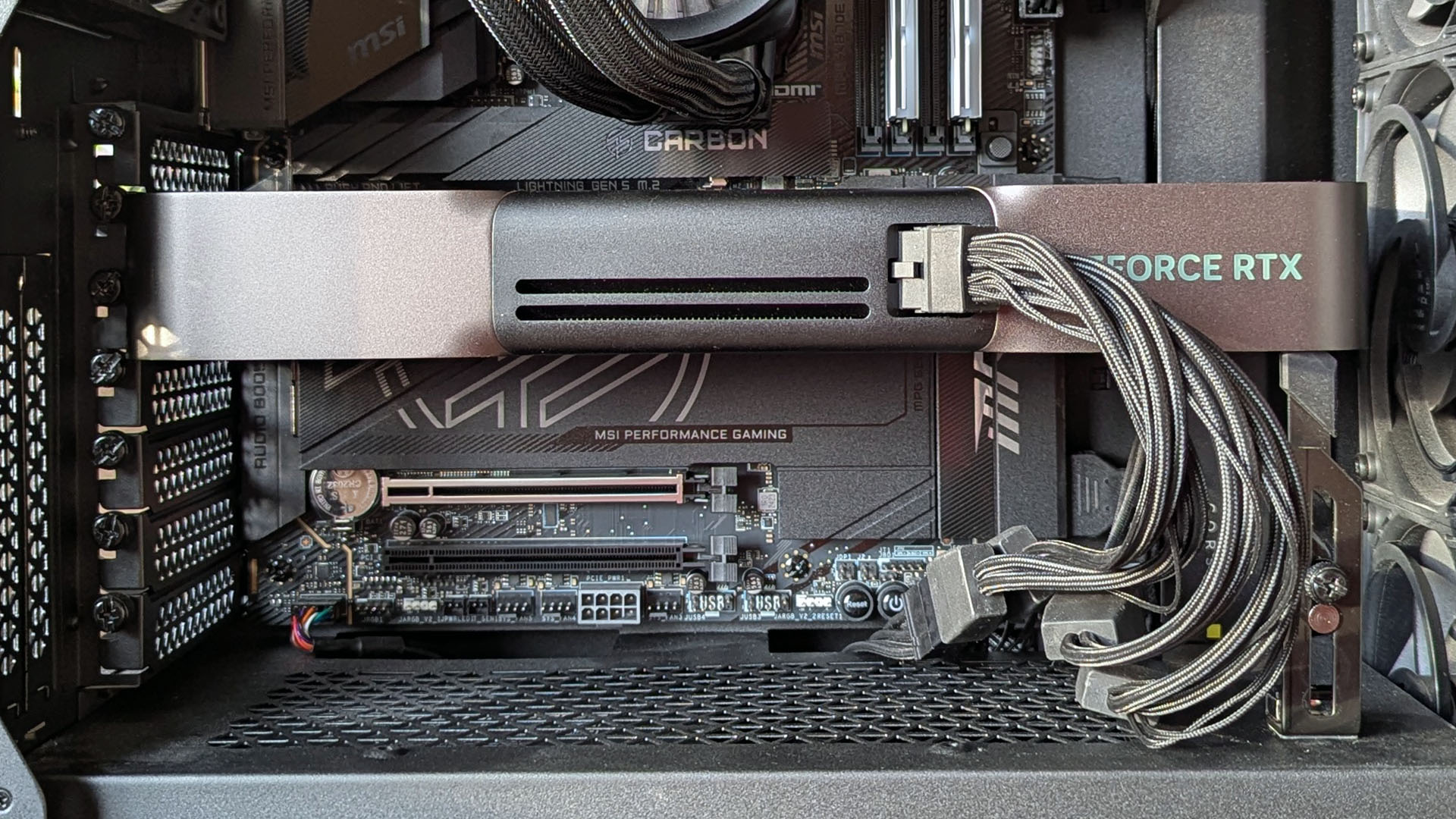

NVIDIA GeForce RTX GPUs can run the gpt-oss-20b model locally. Specifically, the model is supported on systems with at least 16GB of VRAM.

You'll need an NVIDIA RTX PRO GPU if you want to run the more powerful gpt-oss-120b model.

NVIDIA is now a $4 trillion company thanks in large part to its dominance in AI. The company is so dominant in the AI space that President Trump gave up any plans to break it up to increase competition.

Sean Endicott is a news writer and apps editor for Windows Central with 11+ years of experience. A Nottingham Trent journalism graduate, Sean has covered the industry’s arc from the Lumia era to the launch of Windows 11 and generative AI. Having started at Thrifter, he uses his expertise in price tracking to help readers find genuine hardware value.

Beyond tech news, Sean is a UK sports media pioneer. In 2017, he became one of the first to stream via smartphone and is an expert in AP Capture systems. A tech-forward coach, he was named 2024 BAFA Youth Coach of the Year. He is focused on using technology—from AI to Clipchamp—to gain a practical edge.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.