Early Xbox One X benchmarks provide a peek at its potential

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

The release of the Xbox One X this fall will herald the arrival of the most powerful console on the market with its 6 teraflops of GPU power. We'll have to wait to get a comprehensive look at what that means for the likes of 4K gaming and increased fidelity, but a new report from Digital Foundry at Eurogamer offers a tantalizing look at some early benchmarks that provide a glimpse at the console's potential.

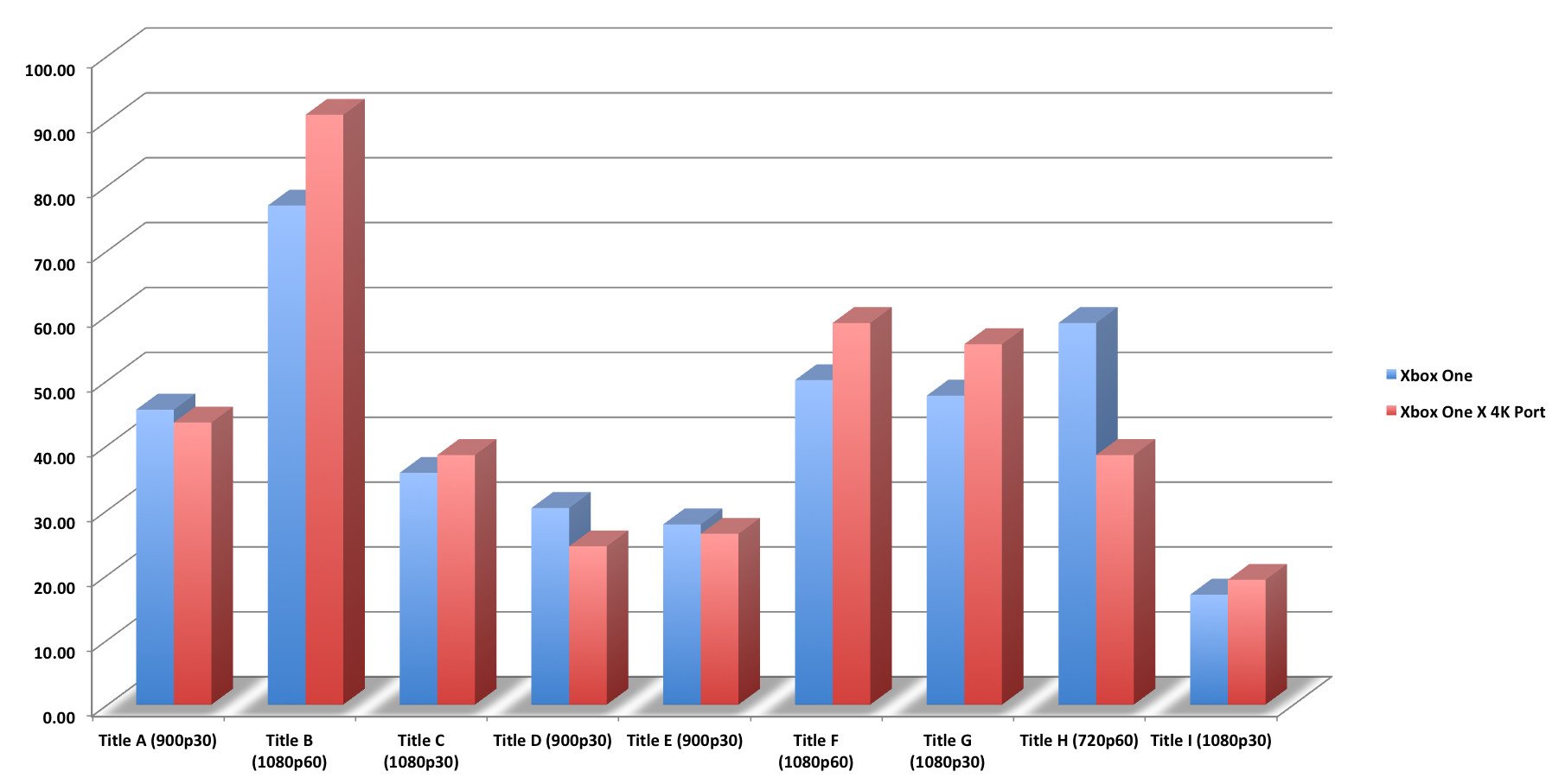

As explained by Digital Foundry's Richard Leadbetter, the benchmarks cited in the report, sourced from developer contacts, are early and represent rudimentary ports of existing games to the Scorpio engine, pushed up to 4K, and without optimizations in place to take advantage of any new hardware features. And while the nine games listed in the benchmark examples aren't listed by name, Leadbetter was able to make some educated guesses on the identity of a few based on the details provided (engine type, target resolution, genre, etcetera).

Despite the lack of optimizations for the new hardware, the early benchmarks show some interesting results. The title that is most likely Forza Motorsport 7 (Title B), for example, was able to achieve 4K at around 91 fps, which translates to using 65.9 percent of available GPU power at its cap of 60 fps. That leaves plenty of headroom for further visual enhancements.

Slightly less impressive is what is assumed to be Gears of War 4 (Title C), which achieves 38.5 fps at 4K, which translates to using 78.1 percent of available GPU power at a cap of 30 fps. As Leadbetter points out, however, this could be an indication of these early, basic benchmarks underestimating final results. Gears of War 4 on Xbox One X, for example, is expected to feature higher-resolution textures and a number of other visual enhancements.

Digital Foundry also provided a bit more insight into the architecture of the console's GPU:

There's more too, based on the documentation we've seen. The fundamental architecture of the Xbox One X GPU is a confirmed match for the original machine (believed to be the case for PS4 Pro too) with additional enhancements. There are other features, including AMD's delta colour compression, which sees performance increases of seven to nine per cent in two titles Microsoft tested. DCC is actually a feature exclusive to the DX12 API. In fact, DX11 moves into 'maintenance mode' on Xbox One X, suggesting that Microsoft is keen for developers to move on. There are benefits for both Xboxes in doing so - and there may be implications here for the PC versions too. We could really use improved DX12 support on PC, after all.

Some titles listed in the benchmarks displayed some performance issues which would likely be rectified by optimizations at the engine level for the Xbox One X's hardware features. Considering the results are based on the most basic of ports, the net results are fairly promising (with the exception of a single outlier on the chart above) for Xbox gamers looking to make the jump to 4K.

For more, Eurogamer's full, in-depth report is definitely worth a read if you're considering moving to the new console. The Xbox One X will launch on November 7 starting at $499.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Dan Thorp-Lancaster is the former Editor-in-Chief of Windows Central. He began working with Windows Central, Android Central, and iMore as a news writer in 2014 and is obsessed with tech of all sorts. You can follow Dan on Twitter @DthorpL and Instagram @heyitsdtl.