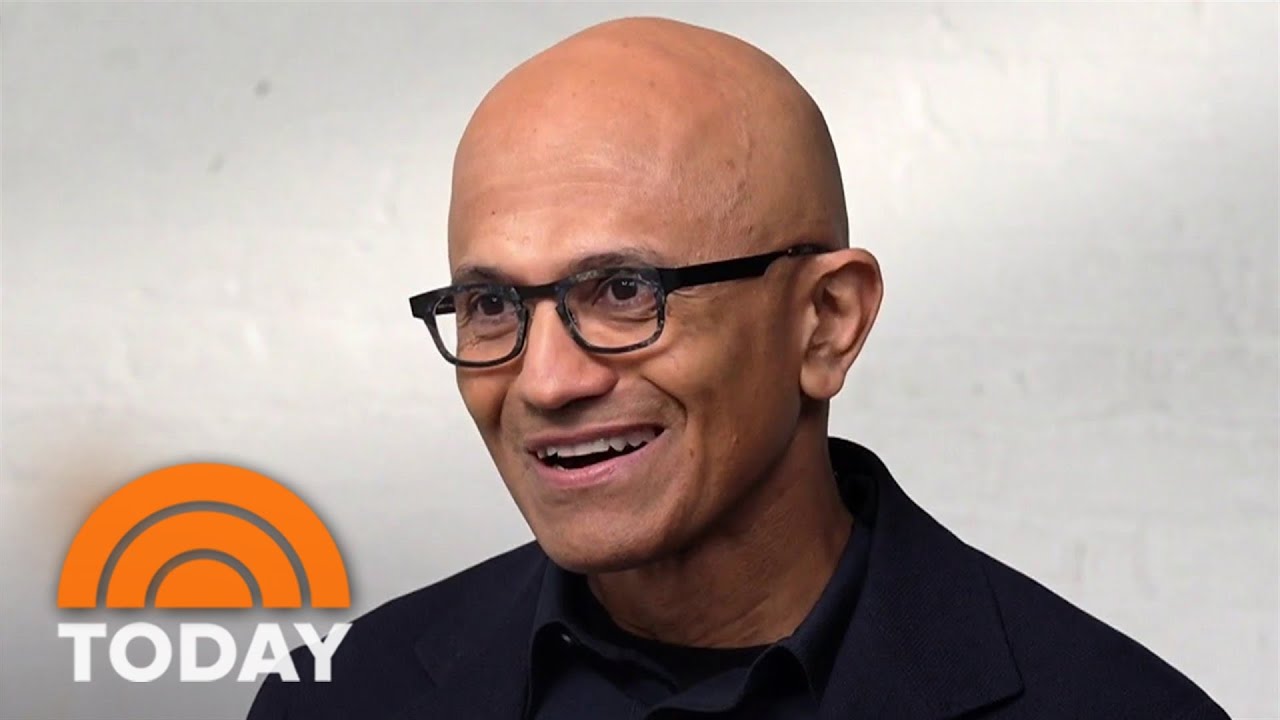

Microsoft CEO Satya Nadella says there's 'enough technology' to protect the US presidential election from AI deepfakes and misinformation in an interview

Microsoft has put elaborate measures in place to protect the impending US election from AI-generated misinformation.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- While appearing on an interview at NBC's Nightly News Exclusive segment hosted by Lester Holt, Microsoft CEO Satya Nadella pointed out that there's enough technology in place to protect the forthcoming US presidential elections from AI deepfakes and misinformation.

- This includes watermarking, detecting deepfakes, and content IDs.

- Microsoft also plans to empower voters with authoritative and factual information about the impending election via its search engine, Bing.

While generative AI use cases in our day-to-day lives may vary, most users are more inclined to leverage its capabilities across various fields, including medicine, education, and as a productivity tool at work. Whereas, some users've outrightly indicated that they'll not use the technology, further citing their concerns which are centered around its safety and lack of guardrails.

Still, the technology greatly impacts the information users interact with while scouring the web. In the past few months, there's been a ridiculous amount of reports revolving around AI-generated content coupled with deepfakes. With the US elections around the corner, misleading information could steer voters in the wrong direction, which might in turn have dire consequences in the long run.

Biden's administration issued an Executive Order last year to address some of these concerns, and while it's a step in the right direction, some issues seem to persist. Microsoft's CEO, Satya Nadella, recently touched base with Lester Holt at NBC's Nightly News Exclusive segment to talk about the measures in place to prevent AI from being used to spread wrong information about the forthcoming 2024 presidential election.

The interview kicks off with Holt asking Microsoft's Satya Nadella what measures are in place to protect and guard the impending elections from deepfakes and misinformation. While this is the first time an election is about to take place with AI being widespread across all spheres of the world, the CEO pointed out that this isn't the first time Microsoft is dealing with misinformation, propaganda campaigns by adversaries, and more.

Satya Nadella confidently indicated there are elaborate measures in place to protect the election from these issues, including watermarking, detecting deepfakes, and content IDs. He further added that there's enough technology to combat disinformation and misinformation brought about by AI.

Is Microsoft doing enough to prevent misinformation and AI deepfakes from negatively impacting the electoral process?

Last year, a report cited instances where Microsoft Copilot (formerly Bing Chat) was found to mislead users and voters by furnishing them with false information regarding the forthcoming elections. Researchers indicated the issue was systemic, as the AI-powered chatbot also provided users with misleading information when trying to learn about the election process in Germany and Switzerland. This isn't entirely a surprise, as multiple reports cite that AI-powered chatbots have declined in accuracy and are getting dumber.

Microsoft has already highlighted its plan to protect the impending election's integrity from AI deepfakes. It plans to empower voters with authoritative and factual election news on Bing ahead of the poll. Bing's market share continues to stagnate despite Microsoft's multi-billion dollar investment in AI, which has made it extremely hard to crack Google's dominance in the category.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Google and Bing have been under fire for featuring deepfake porn among its top results, and the issue is seemingly getting worse with viral images of pop star Taylor Swift surfacing across social media recently. Microsoft's Satya Nadella pointed out that explicit content generated using AI is alarming and terrible.

While unconfirmed, it's believed that pop icon's deepfakes were generated using Microsoft Designer. However, a new update has since shipped regulating how users interact with the tool, including blocking nudity-based image generation prompts. This is on top of the newly imposed Disrupt Explicit Forged Images and Non-Consensual Edits (DEFIANCE) Act, which is designed to regulate and prevent such occurrences.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.