Upgrading from NVIDIA's GTX 1070 GPU to GTX 1080 Ti: Is it worth it?

Is going from a GTX 1070 to a GTX 1080 Ti a huge leap?

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

NVIDIA's GTX 1070 one of the best graphics card options, but its GTX 1080 Ti is an absolute beast. Or that's what we're led to believe, anyway. For less than the cost of a Titan Xp you can have performance that is very close, and on paper at least, the GTX 1080 Ti is a sizeable jump forward from the GTX 1070.

I just upgraded from the 1070 to the 1080 Ti, so I was interested to see just how big a leap forward it was.

From GTX 1070 GPU to GTX 1080 Ti

The particular card I'm using is a fairly standard affair. It's not the Founders Edition, but it is a similar reference design model from Zotac with the blower-style cooler. It has no factory overclock or any of that fanciness. So you get 3,584 Cuda cores, 11GB of GDDR5X memory and a 352-bit memory bus.

I needed to upgrade the power supply in my Alienware Aurora R5 first since Dell only provided a 450W unit. A 600W minimum is recommended for the GTX 1080 Ti, but for my own choice I went for the EVGA Supernova G3 750W.

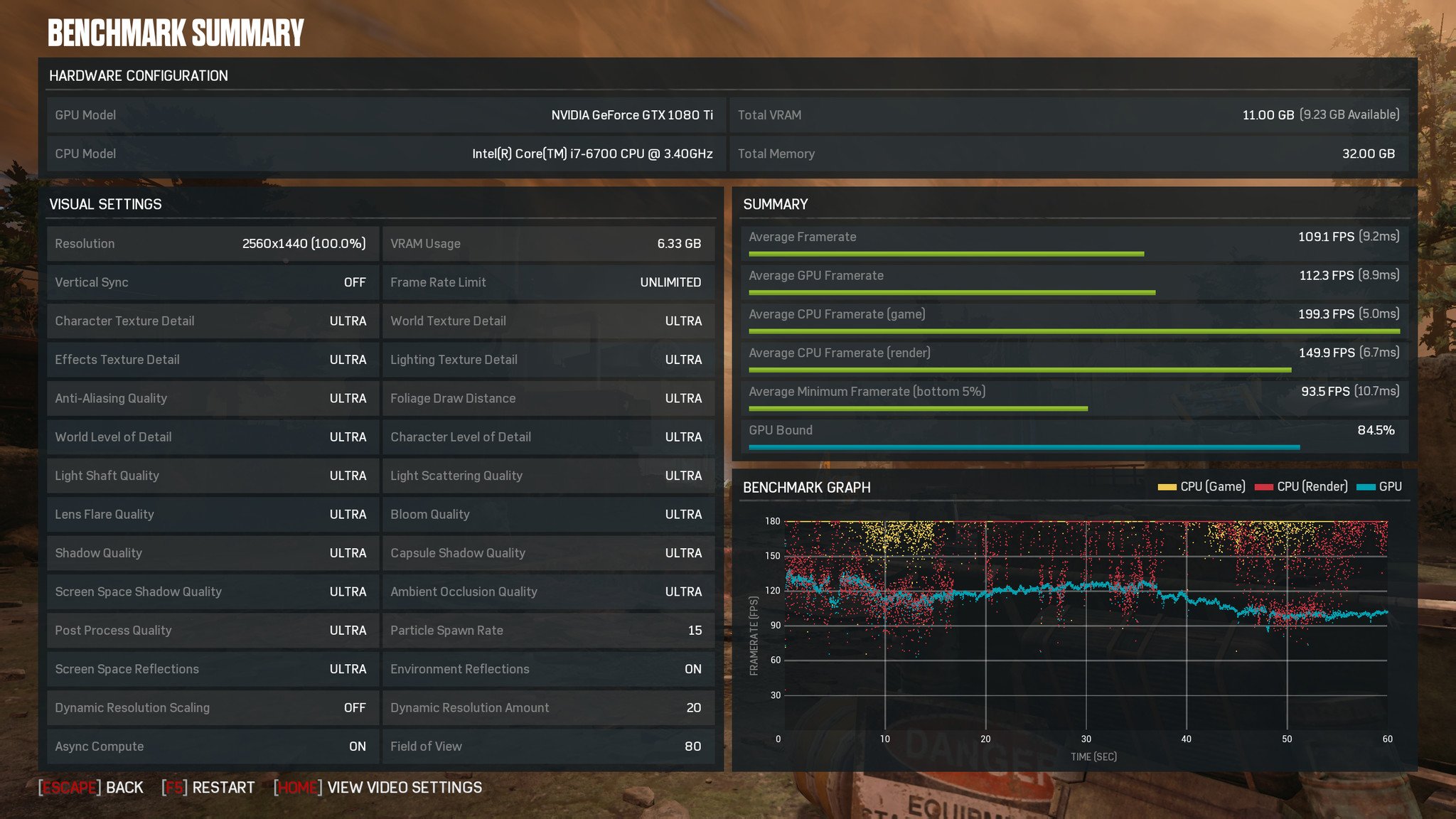

So, on to some benchmarks. Comparisons were all made at 1440p on whatever the maxed out settings were in each of these games. The GTX 1070 was never pushed as a card for 4K gaming. I also use a 1440p monitor with my PC so it's the fairest comparison I could make. The rest of the PC contains an Intel Core i7-6700 processor and 32GB of RAM.

All the latest news, reviews, and guides for Windows and Xbox diehards.

| Game | GTX 1070 | GTX 1080 ti |

|---|---|---|

| Gears of War 4 | 67.2 FPS avg | 109.1 FPS avg |

| Rise of the Tomb Raider | 71.2 FPS avg | 113.1 FPS avg |

| GTA V | 62.7 FPS avg | 73.7 FPS avg |

| PUBG | 41.6 FPS avg | 68.9 FPS avg |

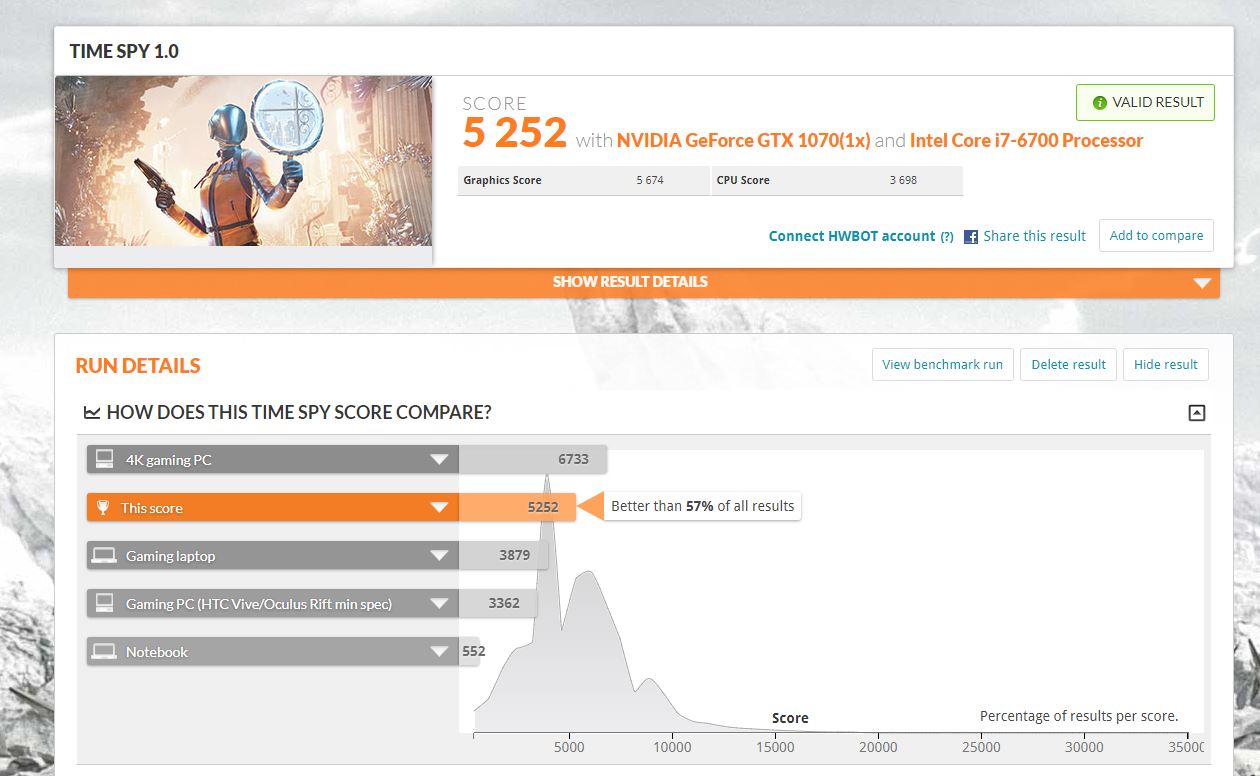

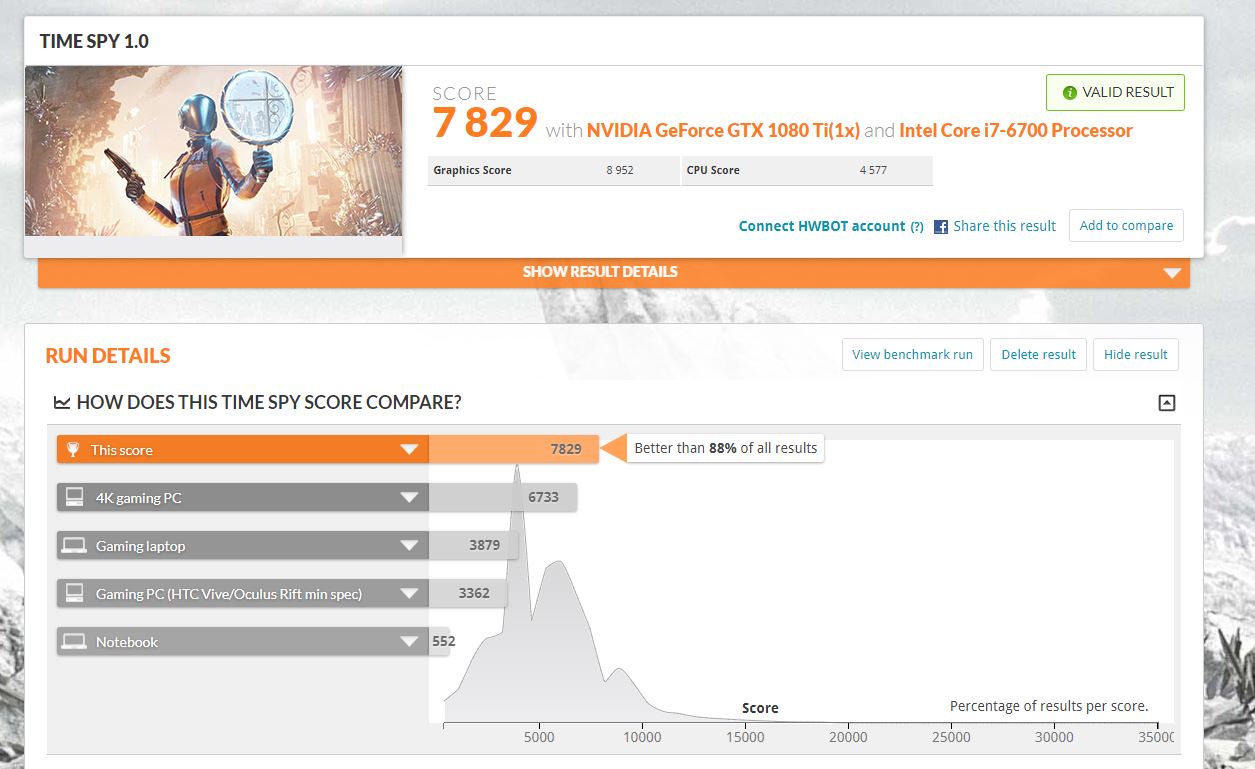

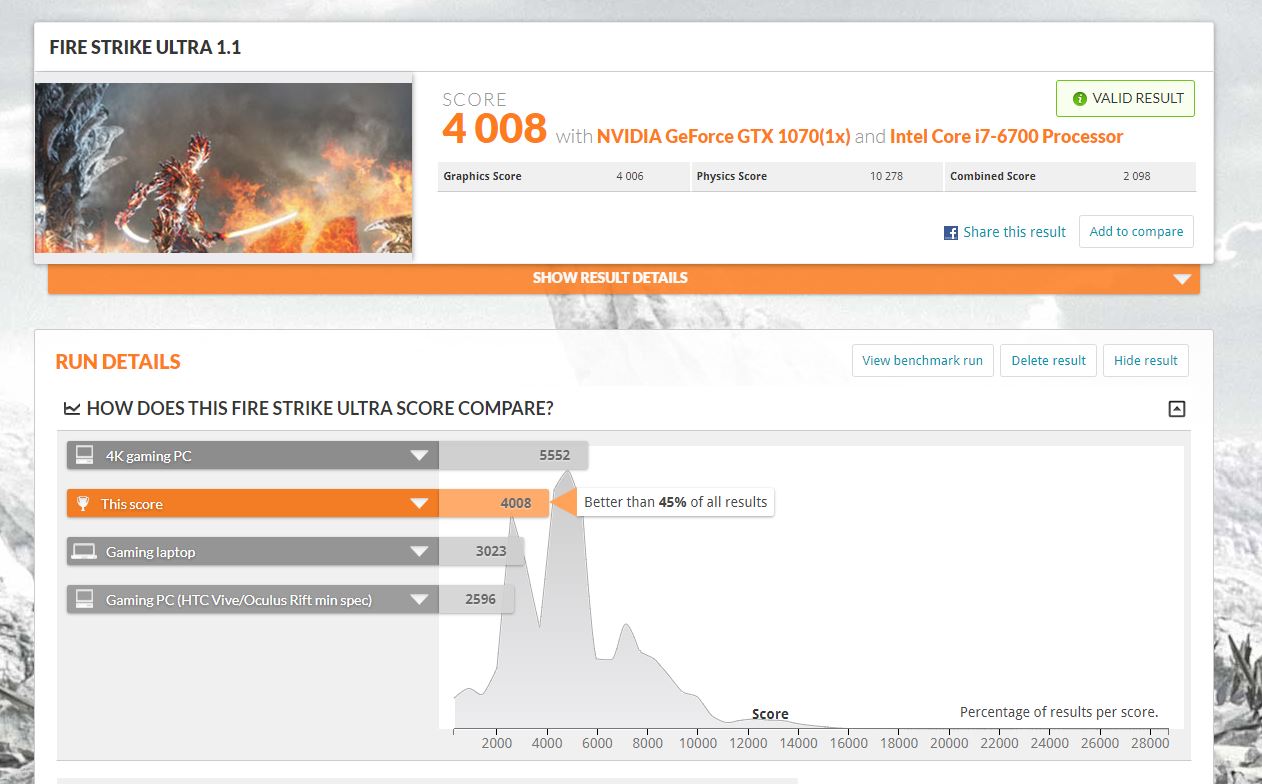

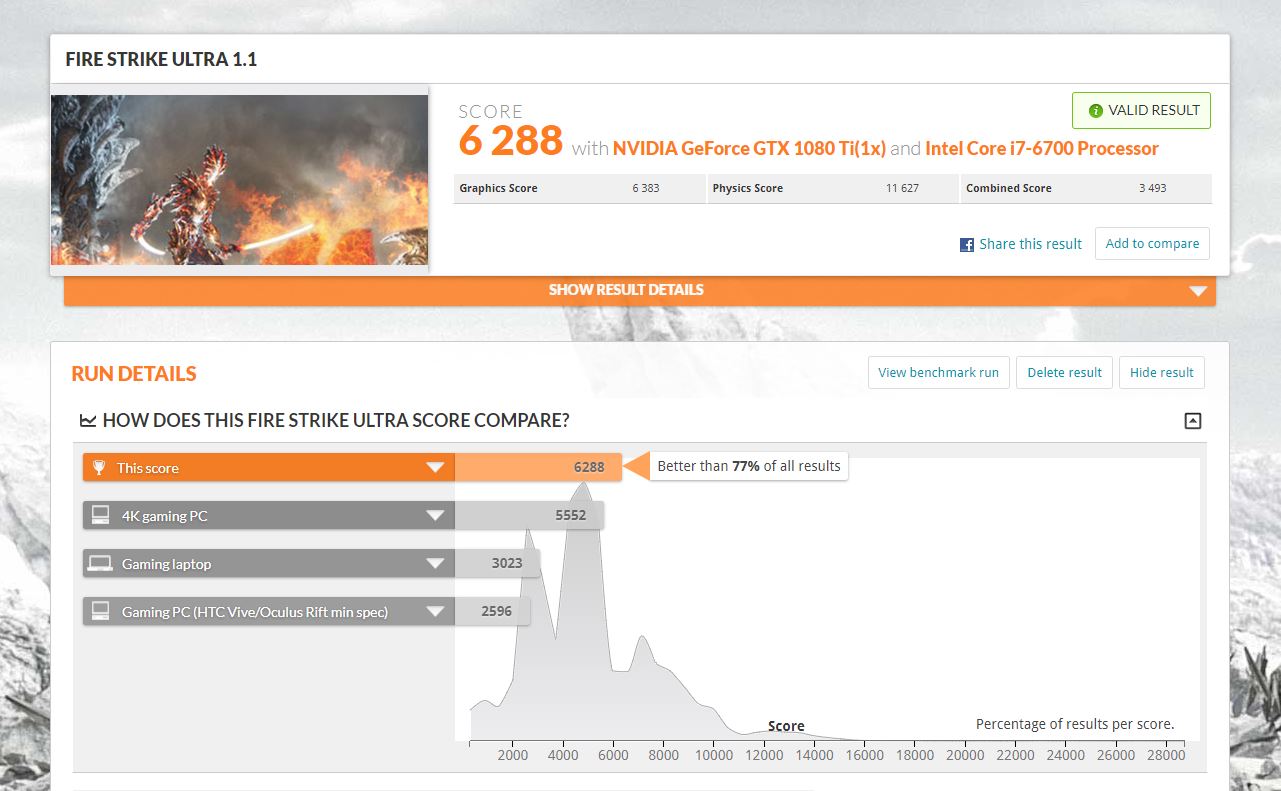

When it comes to synthetic benchmarks, I ran both cards through 3DMark's Time Spy for DX12 and Fire Strike Ultra.

The numbers speak for themselves, and with both games and in the synthetic benchmarks I'm experiencing a huge increase in performance. It varies as to just how much, but it's never a case of being "just a bit better."

It's a lot better in most cases. The smallest performance increase comes in GTA V, which seems to be fairly CPU intensive anyway.

PlayerUnknown's Battlegrounds offered the biggest test so far at maxed out settings, but the optimization on that game is still way off. I play it and over 10 million other people play it, so I was curious to see how much I could get from the GTX 1080 Ti.

Bottom line

Whether it was a necessary upgrade isn't a question, because I already knew it wasn't, especially not when this particular card costs around $800. The GTX 1070 has served me well and never really left me wanting for more. The GTX 1080 Ti, though, is in a different ball park. Part of the reason to upgrade was in preparation for a high-end VR future, but even in gaming, you're getting insane performance from one of these cards without it even breaking a sweat.

If you do make the upgrade, you'll know where the money went.

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine