All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

NVIDIA has received a fair amount of flak lately for its decision to release modern RTX 50-series graphics cards (GPU) with 8GB of VRAM.

There are 8GB versions of the RTX 5060 Ti alongside pricier 16GB models; the RTX 5060 non-Ti only has an 8GB version; and the RTX 5050, well, it has bigger problems than its 8GB of VRAM.

While NVIDIA made sure to boast about lower VRAM requirements from its latest GPU generation at its CES 2025 keynote — before all of these Blackwell GPUs hit the market — many users continue to experience problems running certain games at higher settings with an 8GB GPU.

The 8GB VRAM drama could soon come to an end, at least if some early tests performed by X user @opinali (via Wccftech) using NVIDIA's new Neural Texture Compression (NTC) and Microsoft's DirectX Raytracing 1.2 Cooperative Vector are true.

First look at NVIDA's Neural Texture Compression with DXR1.2 Cooperative Vector!First , this needs a preview driver (590.26), I installed that so you don't have too-and it corrupted the screen, only after a few hard-resets it decided to work.😅🧵 https://t.co/szgX1jVtcYJuly 15, 2025

User opinali got their hands on an early NVIDIA preview driver (version 590.26) and, combined with an RTX NTC SDK beta from Github, performed some rendering tests to see how the new combination of NTC and DXR's Cooperative Vectors performs.

These tests are early and rudimentary, but on an NVIDIA RTX 5080 GPU, they're certainly promising.

Not only does the combination of NVIDIA NTC and DXR 1.2 Cooperative Vectors improve performance by nearly 80% while rendering, but it also drops VRAM usage by up to 90%. That's not a typo — I mean 90%.

All the latest news, reviews, and guides for Windows and Xbox diehards.

How does it perform? Disabling v-sync, RTX 5080, demo at the startup position: (explained next tweet)Default: 2,350fps / 9.20MBNo FP8: 2,160fps / 9.20MBNo Int8: 2,350fps / 9.20MBDP4A: 1,030fps / 9.14MBTranscoded: 2,600fps / 79.38MBJuly 15, 2025

I know that textures aren't the only load given to a GPU's VRAM, but they do take up a lot of space.

As opinali explains, "textures can be 50%-70% of the VRAM used by games, so this is HUGE. In a real game, considering bandwidth, GPU copy costs, cache efficiency ... I bet NTC will be easily a net win in [performance and FPS] too."

And as seen in the early tests from opinali, it also significantly improves the overall rendering performance, jumping from about 1,030 FPS using DP4A (standard) to 2,350 FPS using the default NTC and Cooperative Vectors setting.

It's impressive stuff, to say the least. If these same rendering gains arrive on the user end of the pipeline, the argument over whether or not 8GB VRAM is enough could become a moot point.

What is Neural Rendering and what are Cooperative Vectors?

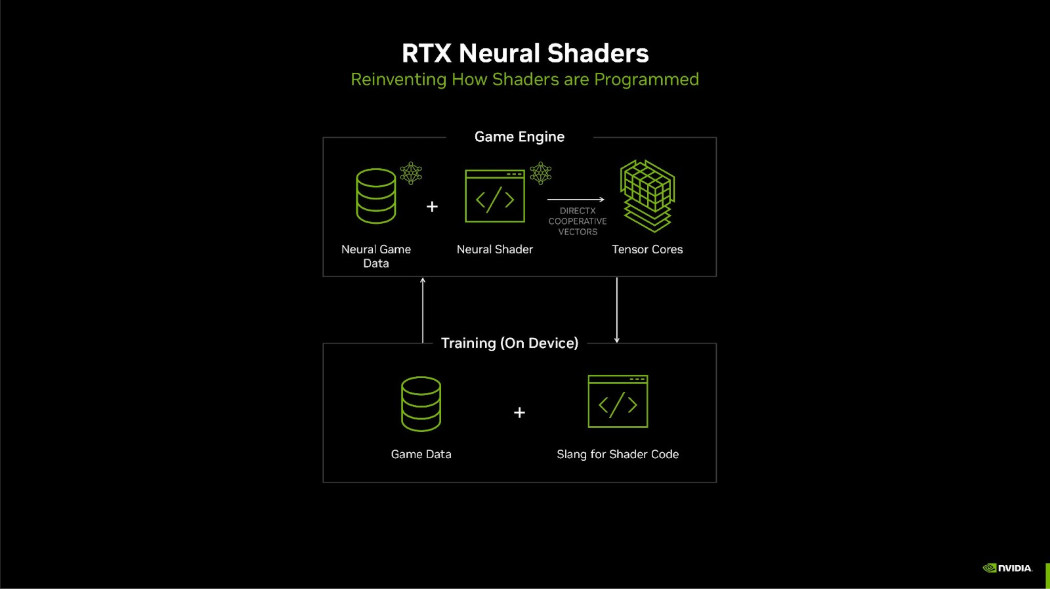

Taking a step back for a moment, Microsoft announced in January 2025 that it's working with NVIDIA to bring neural rendering methods to DirectX via Cooperative Vectors.

NVIDIA's Neural Shaders are essentially small neural networks added to programmable shaders. The tech can reduce VRAM requirements by more than sevenfold, according to NVIDIA, compared to standard texture compression.

Neural Materials are made to handle more complex shaders, boosting processing time by up to fivefold, according to NVIDIA. And Neural Radiance Cache improves path-traced indirect lighting performance.

Microsoft's DirectX 12 Cooperative Vectors are what allow NVIDIA's neural network to operate in real time using AI hardware, including NVIDIA GPUs.

This is the first real look at what NVIDIA and Microsoft's partnership is set to hold, and it looks very promising.

What about AMD and Intel GPUs?

Now some very early testing for RDNA 4! Here's the RTXNTC sample on my 9070 XT, with AA=Off like my previous tests.AMD's DXR 1.2 preview driver is not shipping yet, but they have Vulkan already... however, it doesn't work here so the sample only runs in DP4A mode.🧵 https://t.co/uG5Ik84VYS pic.twitter.com/OQnmmzFt2fJuly 15, 2025

Opinali returned to X bearing some information regarding AMD RDNA 4 performance using Neural Texture Compression with an AMD Radeon RX 9070 XT.

While there is not yet a DXR 1.2 driver available for AMD cards, opinali was only able to run the rendering test in DP4A mode. Early results are nevertheless promising.

They saw the 9070 XT beat NVIDIA's RTX 5080 by about 10% using Vulkan, with a 110% increase using DirectX 12 in DP4A rendering. It's important to note that NVIDIA's preview driver isn't great, and that's likely why there's such a massive increase on the DX12 side.

These are all very early tests, but it's looking promising for RDNA 4 GPUs as well as NVIDIA's Blackwell hardware.

Cale Hunt brings to Windows Central more than nine years of experience writing about laptops, PCs, accessories, games, and beyond. If it runs Windows or in some way complements the hardware, there’s a good chance he knows about it, has written about it, or is already busy testing it.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.