Intel XeSS steps into the ring alongside AMD FSR and NVIDIA DLSS, new details revealed

You can never have enough supersampling.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- NVIDIA has DLSS 2.0, AMD has FSR, and soon, Intel will have XeSS.

- All these acronyms represent upscaling solutions that use technological sorcery to maintain the visual luster of a game while also boosting framerates higher than what would otherwise be possible.

- Intel's XeSS doesn't have a firm release window yet, but the more information Intel gives us about the tech, the closer we likely are to knowing when it will be available.

The 2022 Game Developers Conference (GDC) is going on right now and lots of big tech companies are providing details about their latest projects. Among the participants is Intel, which is showing off new XeSS content and sharing fresh factoids.

Among the nuggets of new info on XeSS is that it aims to allow upscaling from 1080p to 4K and provide cross-platform functionality to games on a generic basis so that it doesn't need to be specially optimized to work with specific titles. Its SDK will be open source as well.

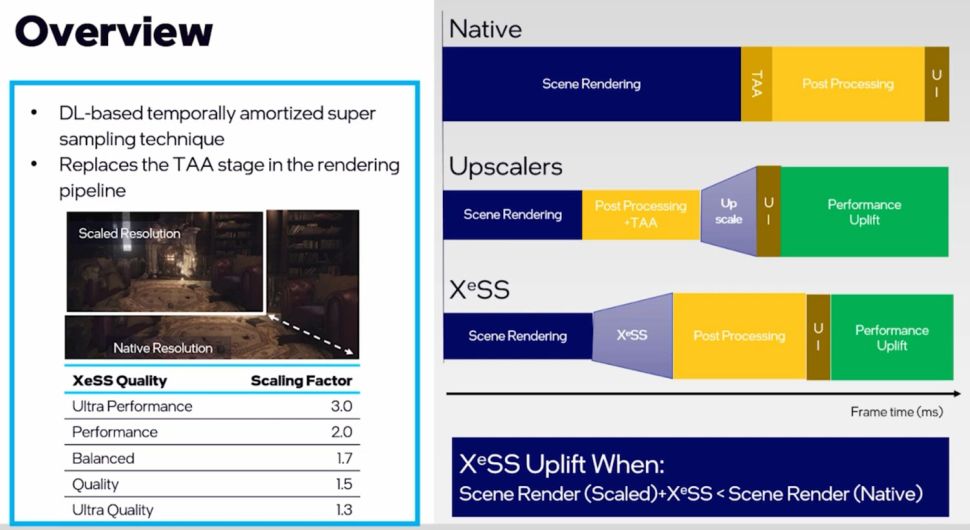

The upscaler will come in five flavors: Ultra performance, performance, balanced, quality, ultra quality. Ultra performance will have the maximum scaling factor of 3.0, while ultra quality will be all the way down at 1.3. It's said that XeSS will be able to top out at higher scaling ratios than DLSS and FSR. Naturally, NVIDIA and AMD still have advantages of their own.

You can check out the nitty-gritty technical details of XeSS over at Intel's site, wherein it has lots of content exploring all the ins and outs of its invention.

If you're wondering what NVIDIA Deep Learning Super Sampling and AMD FidelityFX Super Resolution are (since they're a big part of the discussion around Intel XeSS and what it brings to the table), they're similar framerate-boosting solutions with their own unique designs, perks, and drawbacks. You can learn more at our AMD FSR vs NVIDIA DLSS breakdown. And note that AMD FSR just got a showcase for its FSR 2.0 upgrade that'll fully support Xbox Series X & S, so get ready for the supersampling competition to heat up.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Robert Carnevale was formerly a News Editor for Windows Central. He's a big fan of Kinect (it lives on in his heart), Sonic the Hedgehog, and the legendary intersection of those two titans, Sonic Free Riders. He is the author of Cold War 2395.