Microsoft acquires Semantic Machines in push toward conversational AI

Taking talking tech to the fore.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

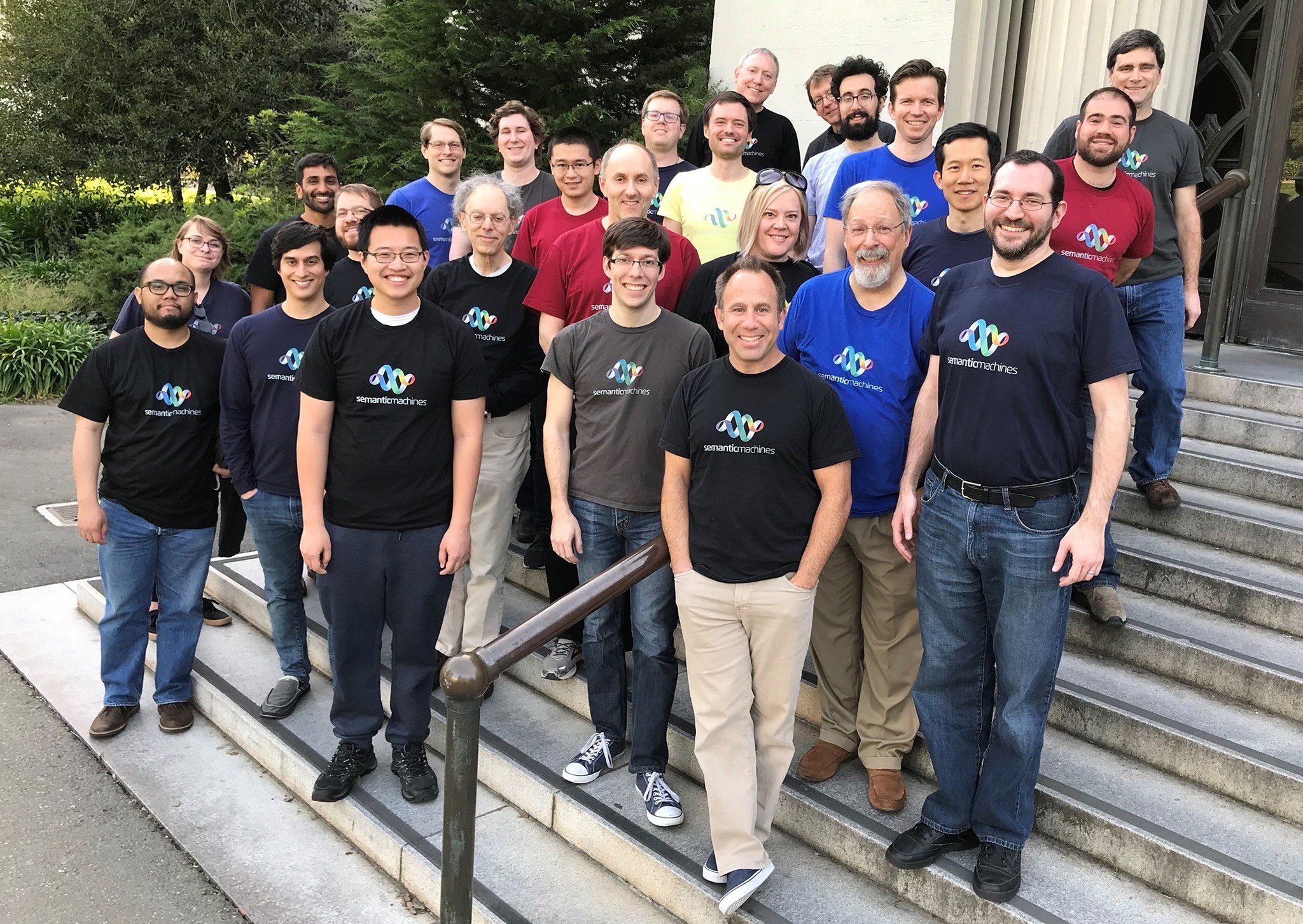

Microsoft today announced that it has acquired Berkeley, California-based AI company Semantic Machines. The acquisition, Microsoft says, will help it build upon its burgeoning AI efforts to make speaking with digital assistants like Cortana and bots more like carrying on a natural conversation.

Currently, interacting with bots and digital assistants involves issuing simple commands or queries, such as asking for the latest sports score or setting a reminder. Going forward, Microsoft wants to evolve these interactions to be much closer to how you would speak another human. That's where Semantic Machines comes in. "Their work uses the power of machine learning to enable users to discover, access and interact with information and services in a much more natural way, and with significantly less effort," Microsoft says.

Semantic Machines counts among its team several prominent natural language AI researchers, including UC Berkeley professor Dan Klein and Stanford University professor Percy Liang, along with former Apple chief speech scientist Larry Gillick. The company is led by technology entrepreneur Dan Roth.

As part of the acquisition, Microsoft says it will open a conversational AI center in Berkeley:

With the acquisition of Semantic Machines, we will establish a conversational AI center of excellence in Berkeley to push forward the boundaries of what is possible in language interfaces. Combining Semantic Machines' technology with Microsoft's own AI advances, we aim to deliver powerful, natural and more productive user experiences that will take conversational computing to a new level. We're excited to bring the Semantic Machines team and their technology to Microsoft.

The acquisition comes not long after Microsoft touted a major breakthrough in conversational AI, which allows chatbots to talk and listen at the same time. This allows digital assistants and bots to have more of a flowing conversation with one another, operating much closer to how humans talk with one another. This week, the Microsoft's AI chief Harry Shum revealed that those capabilities, known as "full duplex," will be made available to developers and partners in Asia on June 1 for one of its chatbots, Xiaoice:

This week, I announced some very exciting new developments and features for Xiaoice. We're making her full duplex capabilities available for partners and developers to use in their applications. We're also introducing new features to help Xiaoice connect with families. With input from parents and kids, she'll be able create a 10-minute customized audio story for kids in about 20 seconds.

With Google recently showing off some similarly impressive tech of its own in this regard, it looks like competition in the conversational AI space is really heating up.

Eventually, we can likely expect these advancements to make their way to Microsoft's cognitive services for developers, Cortana, and chatbots like Zo in the U.S.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Dan Thorp-Lancaster is the former Editor-in-Chief of Windows Central. He began working with Windows Central, Android Central, and iMore as a news writer in 2014 and is obsessed with tech of all sorts. You can follow Dan on Twitter @DthorpL and Instagram @heyitsdtl.