Radeon will support next-gen HDR displays in 2016

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

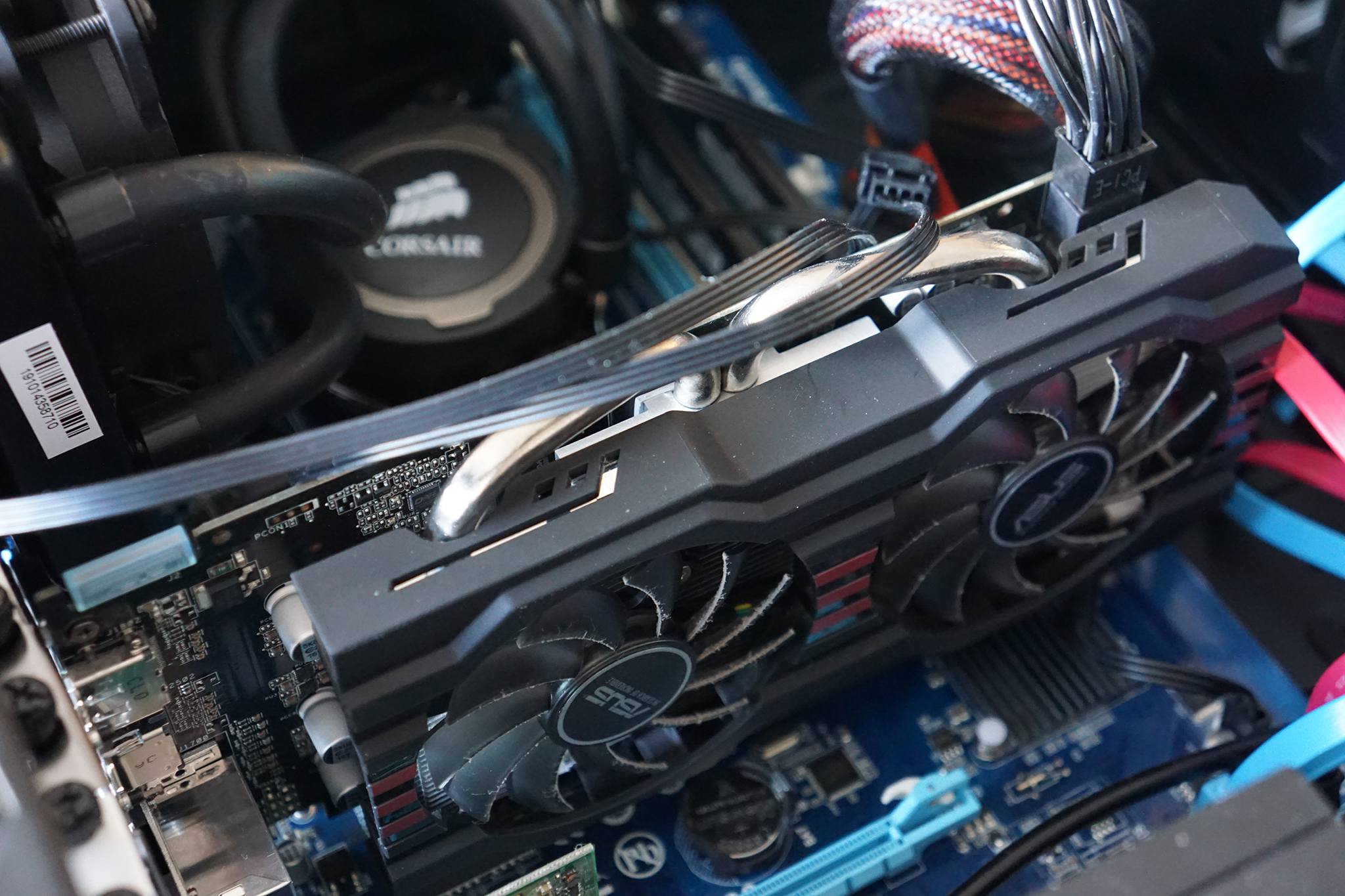

Radeon has announced that High Dynamic Range (HDR) displays will be fully supported in 2016 by select graphical processor cards already available, as well as those released next year. AMD is banking on the technology to really ramp up the visual experience for gamers.

HDR displays will be the next big advancement, explained AMD employees in a recently released video, touching on how they plan to improve the quality and standard of display devices. It's stated that current displays can only achieve a fraction of the luminance which a human eye can perceive.

It's not all about brightness, however. With HDR comes a new color standard, Rec2020, which will cover 75.8% of the human chromaticity diagram, as opposed to current SRGB's 35.9%. This will dramatically increase the total number of colors produced by screens that the human eye can see.

AMD expects that said displays will hit the market in 2016 and the company is making sure its GPUs will be supporting more intense gaming and movies. As well as future cards, there are a select number of options in the R9 300 series that are HDR compatible.

Source: Twitter

All the latest news, reviews, and guides for Windows and Xbox diehards.

Rich Edmonds was formerly a Senior Editor of PC hardware at Windows Central, covering everything related to PC components and NAS. He's been involved in technology for more than a decade and knows a thing or two about the magic inside a PC chassis. You can follow him on Twitter at @RichEdmonds.