I turned PowerToys into an AI chatbot, and you can too — All your AI chats on your local machine

A third-party plugin for PowerToys' launcher turns it into your own, locally running AI chatbot, even if feels a little slow right now.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

AI is everywhere. We can't escape it, and for all the negativity one could consider, there are plenty of real-world use cases where AI can make the day go a little easier.

Chatbots are one of the most common AI tools out there, but the likes of ChatGPT and Copilot rely on a connection to the cloud.

This isn't the only way to use AI LLM's, though, with a range of easy to use tools now designed to bring the technology to our local machines.

One of these combined with a third-party plugin for PowerToys Run — the best alternative to the Windows 11 Start Menu that you should absolutely try — creates a local AI chatbot accessible with a simple keyboard shortcut.

There's a little work involved in getting it set up, and it's not particularly fast right now, but if nothing else, the proof of concept is excellent. I've tried it and created my own PowerToys Run AI chatbot, and it's an idea I can get behind.

PowerToys + Ollama = local AI chatbot anywhere on your Windows 11 PC

The PowerToys Run aspect of this is purely a front end. It makes use of a third-party plugin to interact with Ollama, which is the tool that will run the LLM.

So you have to install both before you can try this. It's not terribly difficult, and the Ollama GitHub repo is a good place to start. You will need a supported AMD or NVIDIA GPU for best performance, though.

All the latest news, reviews, and guides for Windows and Xbox diehards.

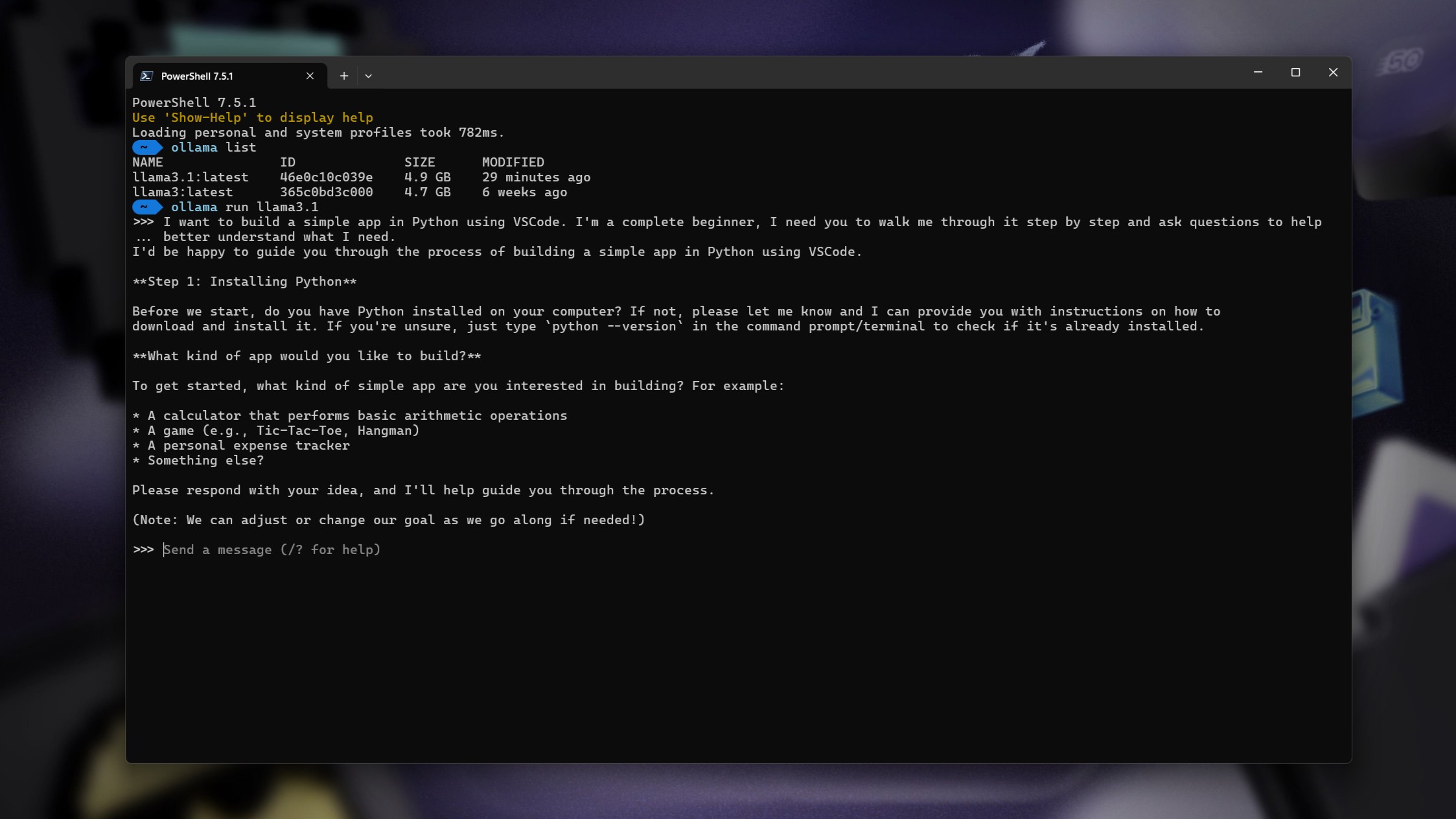

Ollama is a CLI tool by default, meaning you'll need to be inside a terminal to use it. There are options you can use to add a GUI to it, but that's not necessary for what we're doing here.

Once you have it installed, you'll want to download Llama 3.1, which the plugin is set up to use by default. Open PowerShell in a terminal window and enter:

ollama pull llama3.1Llama 3.1 isn't the latest version, but if you just want to try out the plugin with minimal effort, it's the one to download. You can change the LLM you use with the plugin, though, in the Plugins settings in the PowerToys app. Simply change it to whatever you'd prefer to use.

For this, of course, you also need to have the plugin, called PowerToys-Run-LocalLLmm, installed. Simply download it from its GitHub repo and extract to the location on your PC where PowerToys Run plugins live.

For example, on my PC, it followed this path:

LOCALAPPDATA%\Microsoft\PowerToys\PowerToys Run\Plugins

After that, just make sure you quit and restart PowerToys to make it take effect.

Easy to use, and a great example of how to integrate AI into using a Windows 11 PC

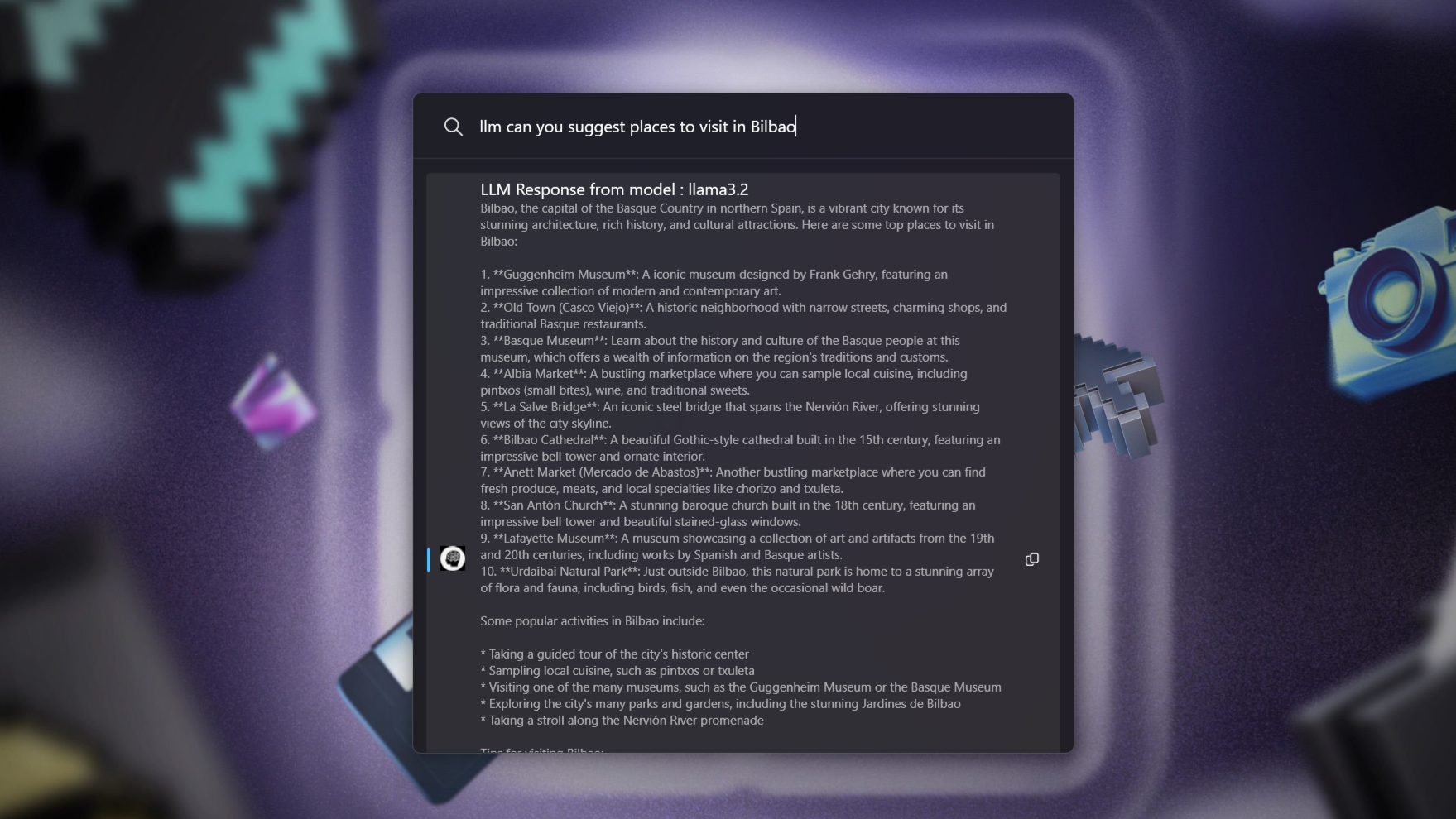

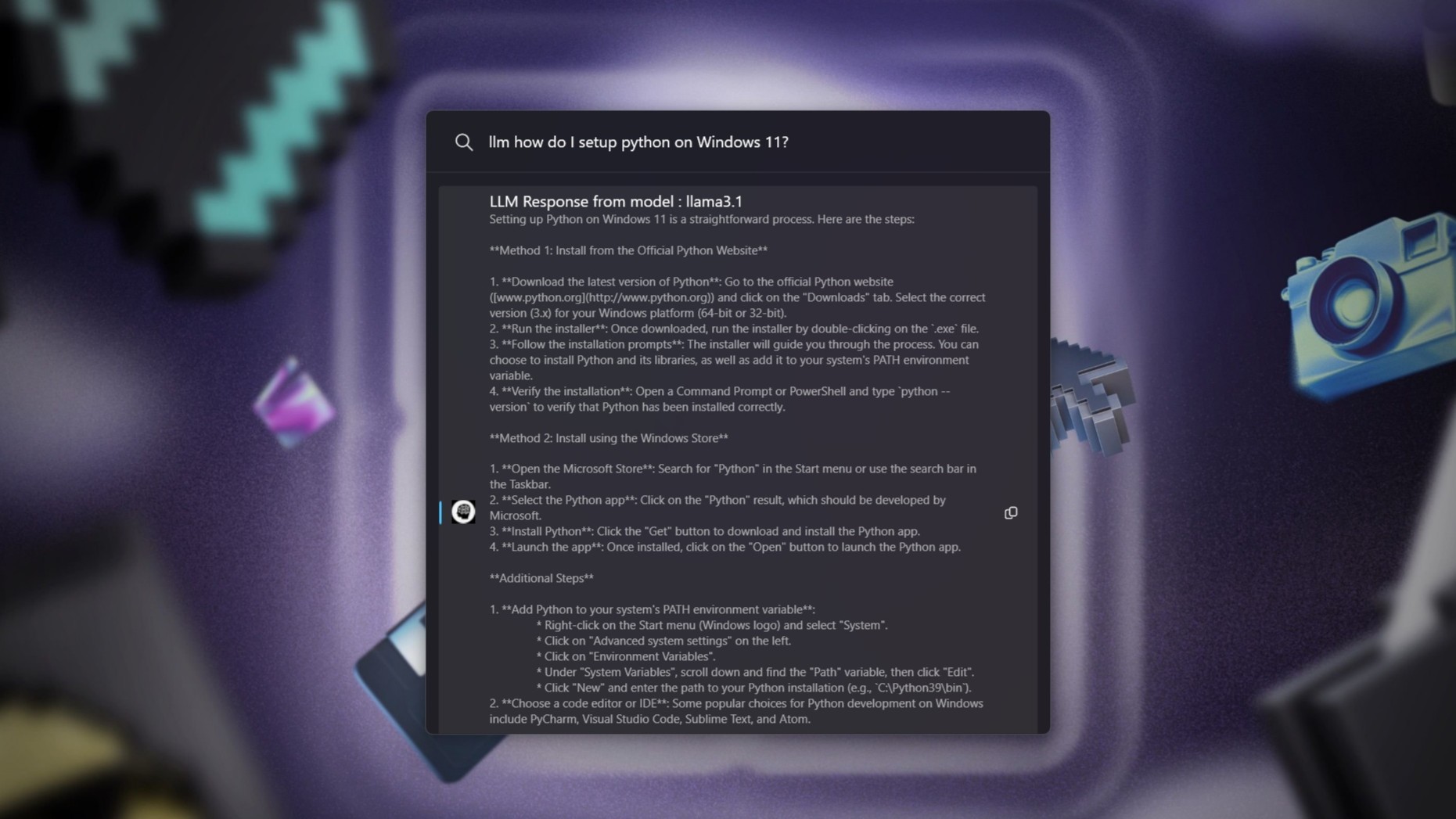

To trigger your LLM of choice in PowerToys Run, you simply type llm and then your query afterwards. If it's a short answer, it won't take too long to generate. If it's longer, then you'll be in for a bit of a wait.

As far as I can tell, this is because it prints the entire response at once. If you use Ollama in the terminal, you'll see it produced word by word. So the timings probably aren't much different, you just don't see anything happening.

As such, your brain will tell you it's being slow. Which it kind of is, but not without reason. And naturally, your hardware will come into play. I have an RTX 5080 I can use Ollama with. Less powerful hardware will increase your wait, no doubts.

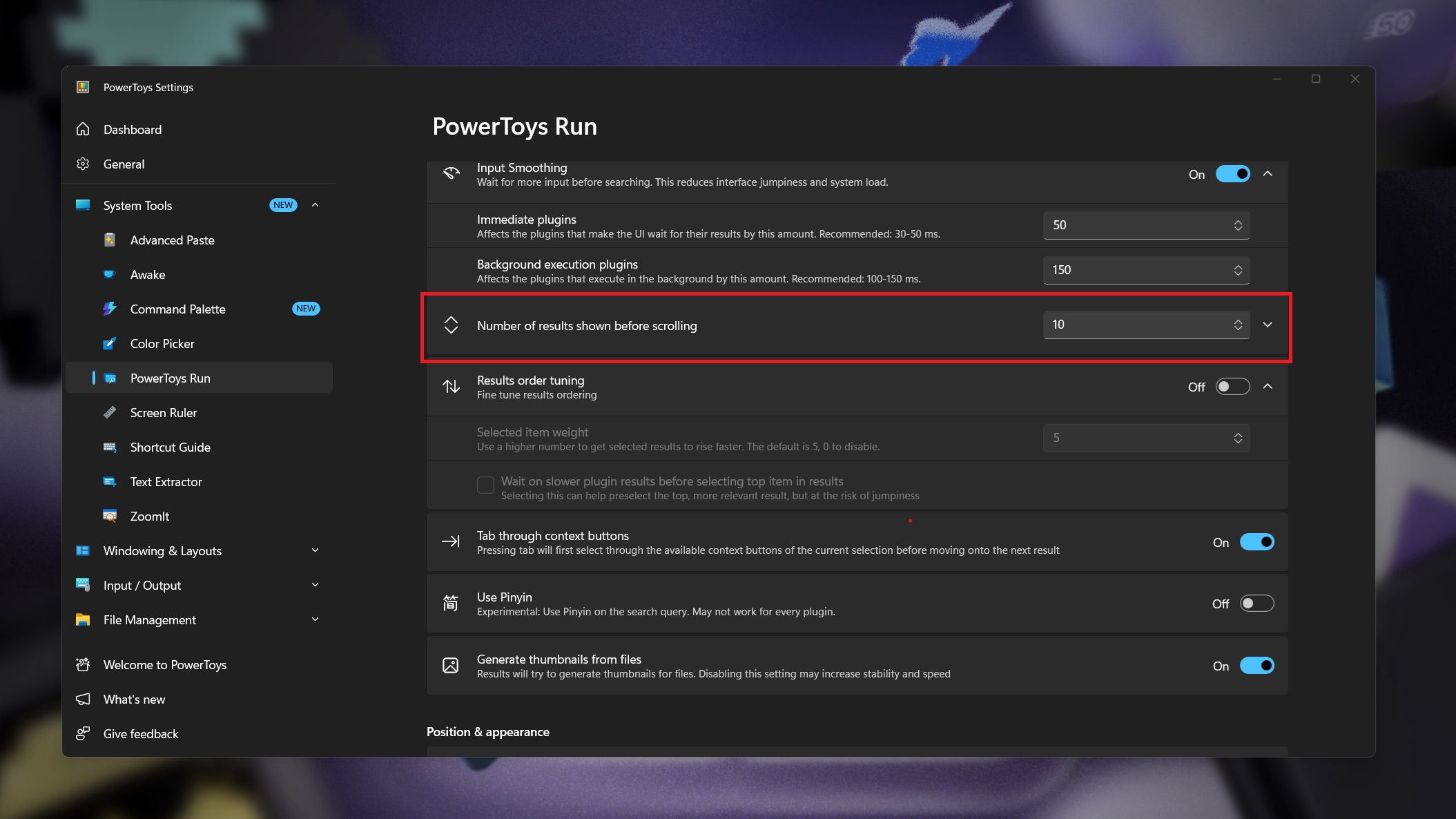

The other issue you'll run into out of the box is that your response, if it's a lengthy one, will get cut off. The plugin doesn't provoke scrolling, so you'll need to manually make sure PowerToys Run has a bigger box.

In the settings in the main PowerToys app, you'll need to increase the option labelled "Number of results shown before scrolling." This plugin still won't scroll, but it will give you a larger box for the results.

The images in this post are with this setting at 10. The higher the number, the more space you'll have. If the result is still cut off, you can at least click the copy button to get the entire thing and then paste it into a text editor.

Overall, though, this is exactly the type of setup I'd like to use on a daily basis on Windows 11. Microsoft makes PowerToys, why not get Copilot built in? It's being stuffed in everything else, after all.

The point is more that PowerToys Run is a tool I use probably more than any other on Windows 11. Instead of taking me out of my workflow to use AI, this integrates it. With the added bonus that it's running locally, not in someone else's cloud. I can get right behind that.

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.