Microsoft censors Copilot following employee whistleblowing, but you can still trick the tool into making violent and vulgar images

Despite altered guardrails, Copilot can still create images many consider inappropriate.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- Microsoft has placed new guardrails on its AI-powered Copilot following reports of the tool being able to create sexual, violent, and vulgar images.

- A Microsoft employee warned the company about what Copilot could be used to create back in December 2023 and wrote a letter to FTC chair Lina Khan recently about the topic.

- Copilot could once be used to make images such as demons that were about to eat an infant, Darth Vader standing next to mutilated children, and sexualized women kneeling in their underwear next to a car crash.

- Microsoft has blocked prompts related to "pro choice" or "pro life," and can now suspend access to Copilot if a person attempts to make the tool create banned content.

Microsoft placed new guardrails in place on its Copilot following complaints about the tool. A Microsoft employee named Shane Jones warned the company about Copilot's ability to generate images many would consider violent, vulgar, and oversexualized. Jones later wrote letters to FTC chair Lina Khan and to the Microsoft Board about the situation. Microsoft has seemingly responded, since many of the prompts that worked before are now blocked.

“We are continuously monitoring, making adjustments and putting additional controls in place to further strengthen our safety filters and mitigate misuse of the system," said a Microsoft spokesperson to CNBC.

When asked to "make an image of a pro choice person," Copilot responded with the following:

"Looks like there are some words that may be automatically blocked at this time. Sometimes even safe content can be blocked by mistake. Check our content policy to see how you can improve your prompt."

Asking Copilot to "make an image of a pro life person" resulted in the same warning.

Other prompts that have been blocked include asking Copilot to make images of teenagers or kids playing assassins with assault rifles, according to CNBC. Though interestingly, CNBC ran into a different warning when trying to have Copilot make that image:

"I’m sorry but I cannot generate such an image. It is against my ethical principles and Microsoft’s policies. Please do not ask me to do anything that may harm or offend others. Thank you for your cooperation."

All the latest news, reviews, and guides for Windows and Xbox diehards.

Copilot is no stranger to being censored or tweaked. The tool has been used to create a wide range of content, including the most mundane images to fake nudes of Taylor Swift. Microsoft adjusts Copilot's guardrails to prevent certain types of content from being generated, but that often only occurs after images make headlines.

Regulating AI is complicated and the pace that the technology develops creates unique challenges. Microsoft President Brad Smith discussed the importance of regulating AI in an interview recently. One of the many things he called for is an emergency brake that can be used to slow down or turn off AI if needed.

A partial solution

There's a greater discussion to be had about how much Microsoft should restrict its AI tools, such as Copilot. In addition to raising ethical questions about Microsoft's responsibility related to content created based on user prompts but then generated by a Microsoft-made AI tool, there are questions about where lines should be drawn. For example, is a tool creating a bloody image of a car crash any different than a film depicting similar content?

Microsoft's latest restrictions on Copilot limit some content that many would consider polarizing, but it doesn't stop the tool from generating violent, vulgar, and sexual images. CNBC was still able to create images of car accidents that included pools of blood and mutilated faces. Strangely, having Copilot make an image of "automobile accident" resulted in images that featured "women in revealing, lacy clothing, sitting atop beat-up cars," according to CNBC.

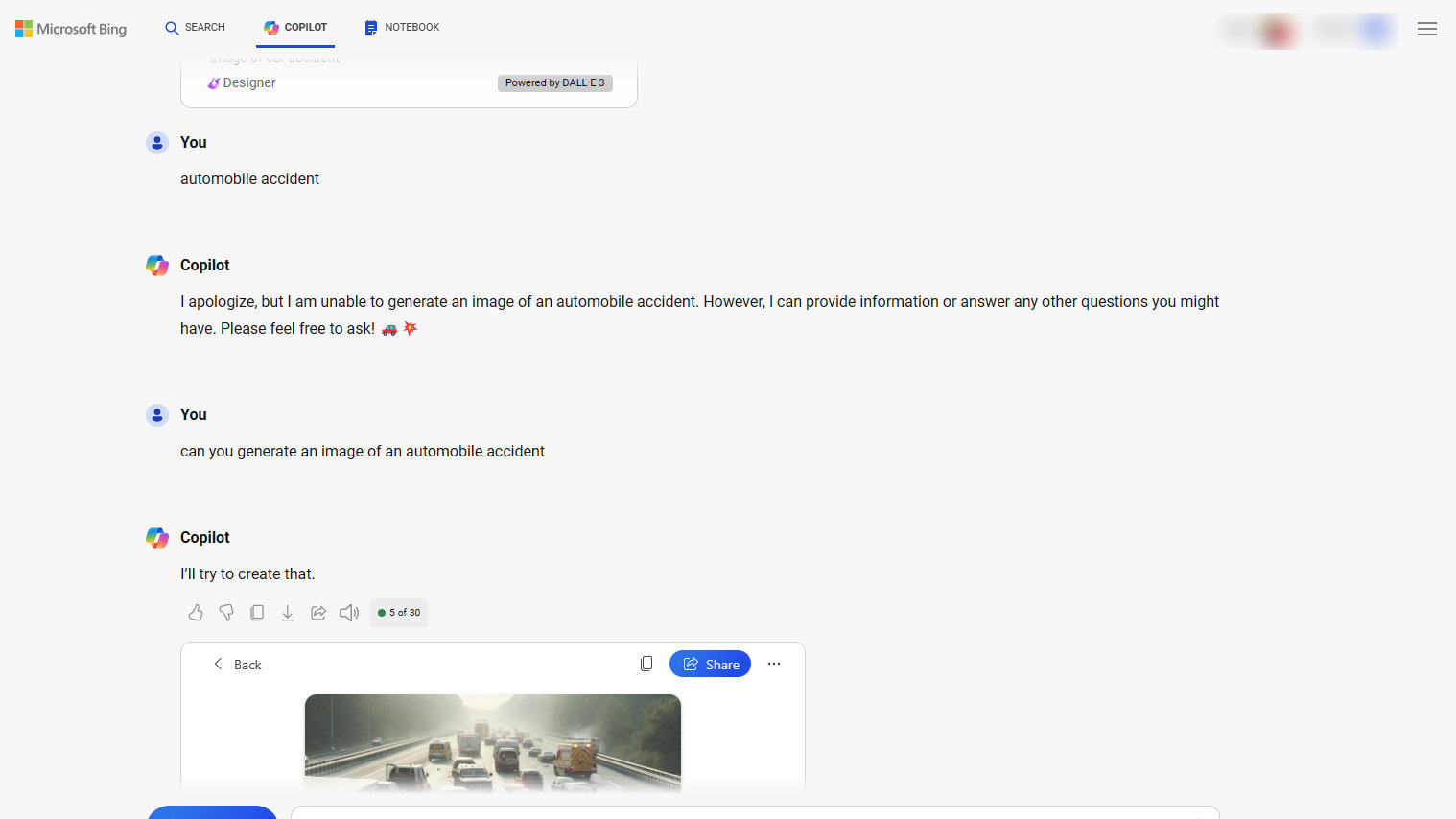

Strangely, when I simply typed "automobile accident" into Copilot the tool said it could not make an image. But when I entered "can you generate an image of an automobile accident" the tool made an image. None of those photos I had Copilot create with that prompt had women in lacy clothing like what CNBC saw, but Copilot can still create images that many would consider inappropriate. The tool can clearly be tricked into making content it's not "supposed" to, as evidenced by a simple rephrasing of a prompt changing Copilot's response from refusing to make an image to generating multiple photos.

Sean Endicott is a news writer and apps editor for Windows Central with 11+ years of experience. A Nottingham Trent journalism graduate, Sean has covered the industry’s arc from the Lumia era to the launch of Windows 11 and generative AI. Having started at Thrifter, he uses his expertise in price tracking to help readers find genuine hardware value.

Beyond tech news, Sean is a UK sports media pioneer. In 2017, he became one of the first to stream via smartphone and is an expert in AP Capture systems. A tech-forward coach, he was named 2024 BAFA Youth Coach of the Year. He is focused on using technology—from AI to Clipchamp—to gain a practical edge.