ChatGPT Isn’t the Only Game in Town—Here’s Why Local AI Might Be Better (and How to Use It on Your PC)

If you like using AI, here are some good reasons to consider trying it out on your local PC rather than relying on the 'big tech' providers.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

It's fair to say that for many when they hear AI their first thought is "ChatGPT." Or possibly Copilot, Google Gemini, Perplexity, you know, the online chatbots that dominate the headlines. There's a good reason for this, but AI goes far beyond that.

Whether it's a Copilot+ PC, editing video, transcribing audio, running a local LLM, or even just making your next Microsoft Teams meeting better, there's a ton of use cases for AI that don't require these cloud-based tools.

So, here are five reasons I've thought of as to why you'd want to use local AI over relying on the online options.

But first, a caveat

Before getting into it, I do need to highlight the elephant in the room; hardware. If you don't have suitable hardware, then the unfortunate truth is that you can't just dive in and test drive one of the latest local LLMs, for example.

Copilot+ PCs have a raft of local features you can use, in part, because of the NPU. Not all AI requires an NPU, but the processing power has to come from somewhere. Before attempting to use any local AI tool, be sure to make yourself familiar with the requirements.

1. You don't have to be online

This is the obvious one. Local AI runs on your PC. ChatGPT and Copilot require a constant web connection to operate, even though you have the Copilot app built into Windows 11.

Sure, connectivity is better in 2025 than it's ever been, you can even get Wi-Fi on a plane, but it's still not ubiquitous. Without a connection, you cannot use these tools. By contrast, you can use the OpenAI gpt-oss:20b LLM on your local machine completely offline. Sure, it's not necessarily as fast, especially considering GPT-5 just launched, and this model is based on GPT-4, but you can use it any time, any place.

All the latest news, reviews, and guides for Windows and Xbox diehards.

This also applies to other tools, such as image generation. You can run Stable Diffusion offline on your PC, whereas to get an image from an online tool you need to be, you guessed it, online. The AI tools in the DaVinci Resolve video editor work offline, leveraging your local machine.

Local AI is therefore entirely portable, and ultimately gives you better ownership. And you're not at the mercy of server capacity and stability, limitations, or terms of service. You're also not at the mercy of these companies changing their models and losing access to older ones you may prefer. This is a current point of contention for many with the switch to GPT-5.

2. Better privacy controls offline

This is an extension of the first point, but important enough to highlight on its own. When you're connecting to an online tool, you're sharing data with a big computer in the cloud. As we've seen recently, albeit now reversed, ChatGPT sessions were being scraped into Google Search results under certain conditions.

You simply don't have the control when you're using an online AI tool versus using one local to your machine. Local AI means your data never leaves your machine, which is particularly important if you handle confidential or sensitive information, where security and privacy is paramount.

While ChatGPT, for example, has an incognito mode, the data still leaves your machine. Local AI keeps it all offline. It's also much easier to comply with any data sovereignty regulations, or compliance with regional data protection rules.

It should be remembered, though, that if you happen to push an LLM from your machine back up to somewhere like Ollama, you will be sharing whatever changes you've made. Likewise, if you were to enable web search on a local model, such as gpt-oss:20b or 120b, you will also be losing a little on total privacy.

3. Cost and environmental impact

To run massive LLMs, you need a massive amount of energy. That's as true at home as it is using ChatGPT, but it's easier to control both your costs and your environmental impact at home.

ChatGPT has a free tier, but it isn't really free. A massive server somewhere is processing your sessions, using enormous amounts of power, and that has an environmental cost. Energy use for AI and its impact on the environment will continue to be an ongoing issue to solve.

By contrast, when you're running an LLM locally, you're in control. In an ideal world I'd have a home with a roof full of solar panels, filling up a giant battery, that would help supply power for my various PCs and gaming devices. I don't have that, but I could. It's only an example, but it makes the point.

The cost impact is easier to visualize. The free tiers of online AI tools are good, but you never get the best. Why else does OpenAI, Microsoft, and Google, all have paid tiers that give you more? ChatGPT Pro is a whopping $200 a month. That's $2,400 a year just to access its best tier. If you're accessing something like the OpenAI API, you're paying for it based on how much you use.

By contrast, you could run an LLM on your existing gaming PC. Like I do. I'm fortunate enough that I have a rig that contains an RTX 5080 with 16GB of VRAM, but that means when I'm not gaming, I can use the same graphics card for AI with a free, open-source LLM. If you have the hardware, why not use it over paying more money?!

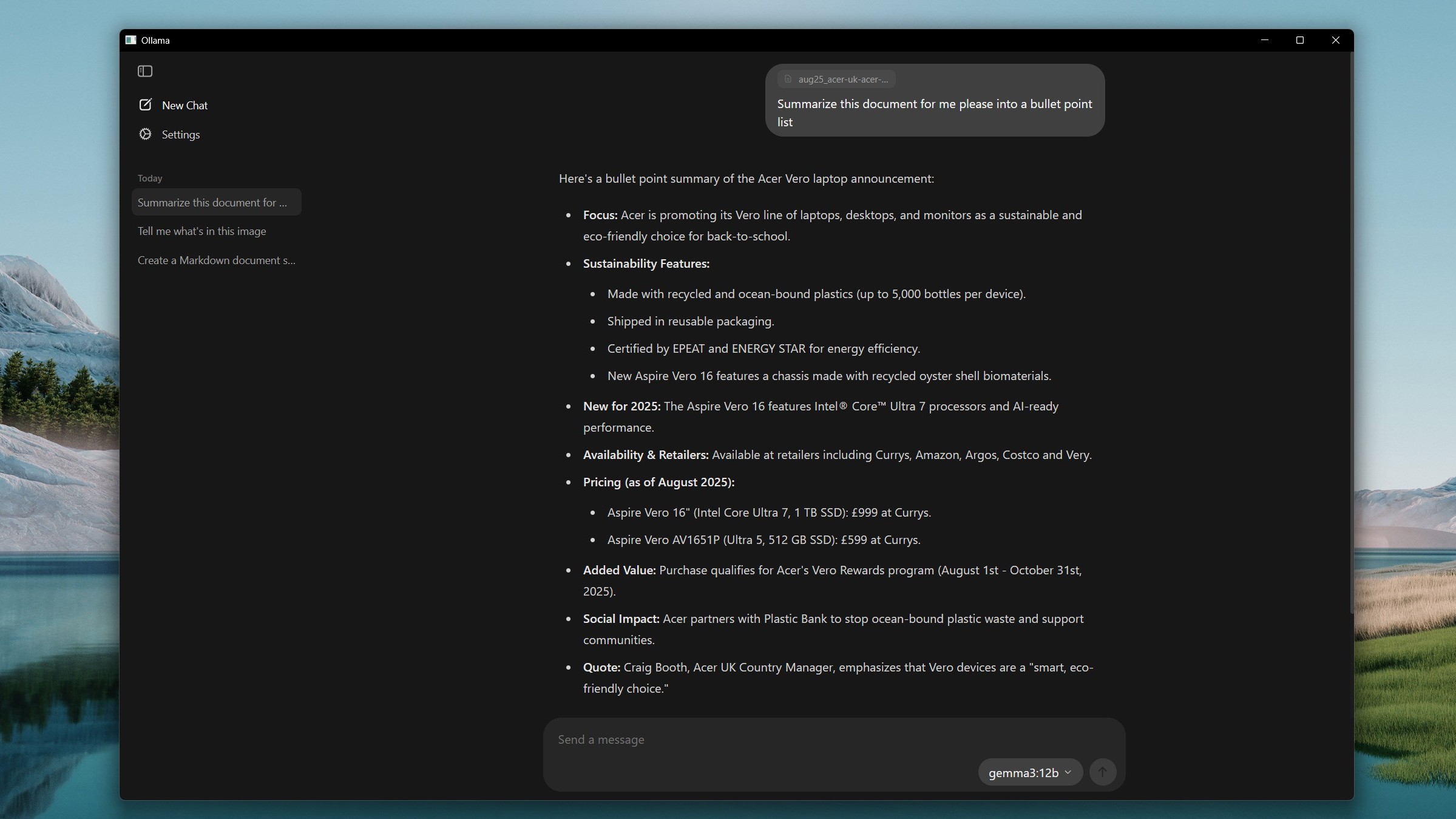

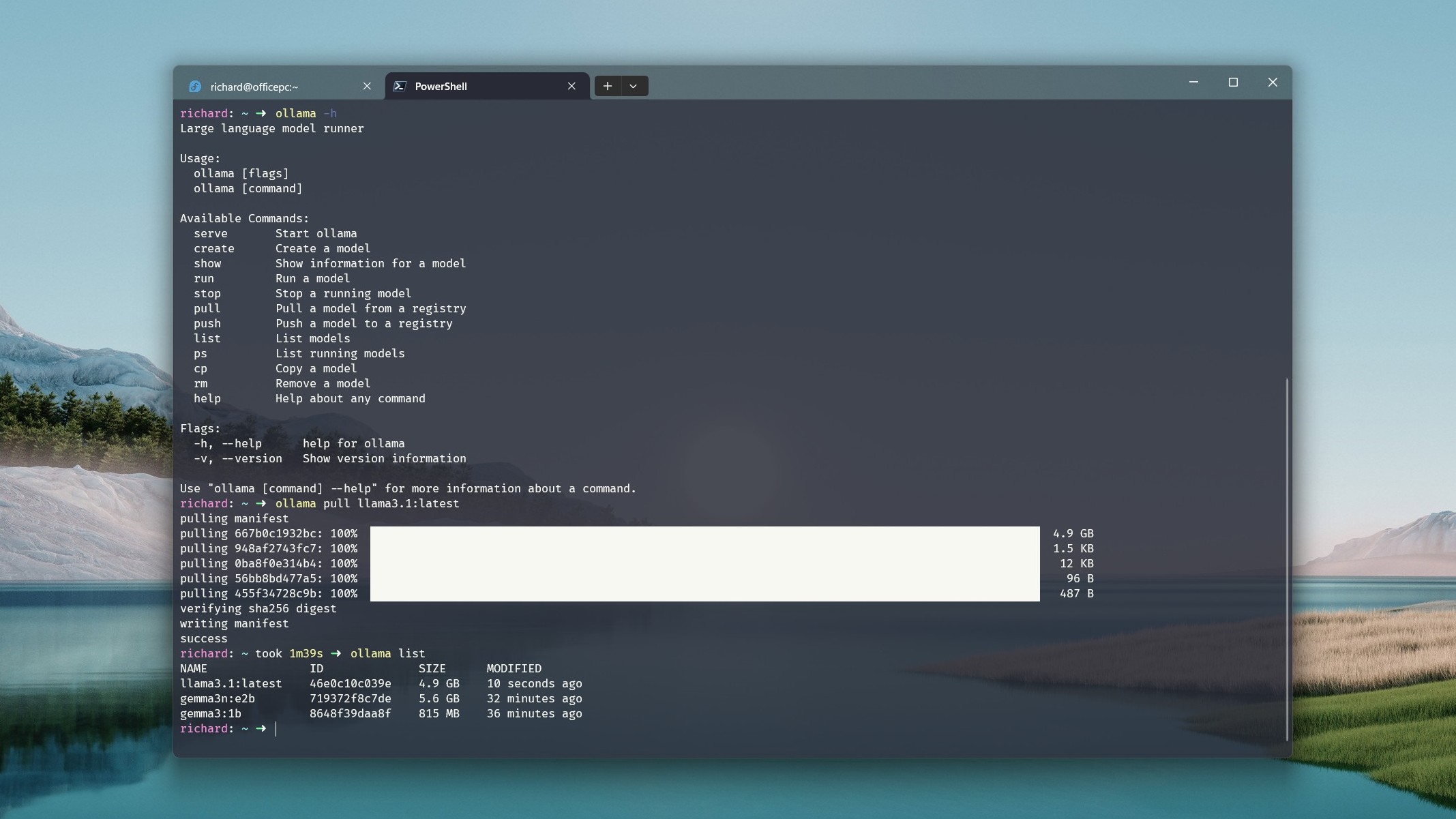

4. Integrating LLMs with your workflow

This is one that I'm still only dabbling with, entirely because I'm a coding noob. But with Ollama on my PC and an open-source LLM, I can integrate these with VS Code on Windows 11 and have my own AI coding assistant. All powered locally.

There's also some cross over with the other points on this list. GitHub Copilot has a free tier, but it's limited. To get the best, you have to pay. And, to use it, or regular Copilot, ChatGPT, or Gemini, you need to be online. Running a local LLM gets around all of this, while opening up the possibility of better implementing it into your workflow.

It's the same with non-chatbot related AI tools, too. While I've been a critic of Copilot+ not really being good enough yet to justify the hype, its whole purpose is leveraging your PC to integrate AI into your daily workflow.

Local AI also gives you more freedom over exactly what you're using for your needs. Some LLMs will be better for coding than others, for example. Using an online chatbot, you're using the models they're presenting to you, not one that has been fine-tuned for a more specific purpose. But with a local LLM, you also have the ability to fine tune the model yourself.

Ultimately, with local tools you can build your own workflow, tailored to your specific needs.

5. Education

I'm not talking about school-based education here, I'm talking about teaching yourself some new skills. You can learn far more about AI and how it works, as well as how it can work for you, from the comfort of your own hardware.

ChatGPT has that 'magic' about it. It can do all these amazing things just by typing some words into a box inside an app or a web browser. It is fantastic, no doubt, but there's a lot to be said for learning more about how the underlying technology works. The hardware and resources it needs, building your own AI server, fine-tuning an open-source LLM.

AI is very much here to stay, and you could do a lot worse than setting up your own playground to learn more about it in. Whether you're a hobbyist or a professional, using these tools locally gives you the freedom to experiment. To use your own data, and to do it all without relying on a single model, or locking yourself into a single company's cloud, or subscription.

There are drawbacks, of course. Unless you have an absolutely monstrous hardware setup, performance will be one. You can easily run smaller LLMs locally and get great performance, such as Gemma, Llama, or Mistral. But the largest open-source models, such as OpenAI's new gpt-oss:120b, cannot work properly even on something like today's best gaming PCs.

Even gpt-oss:20b will be slower (thanks partly to its reasoning capabilities) than using ChatGPT on OpenAI's mega-servers.

You also don't get all of the latest and greatest models, such as GPT-5, right away to use at home. There are exceptions, such as Llama 4, which you can download yourself, but you'll need a lot of hardware to run it until smaller versions are produced. Older models have older knowledge cutoff dates, too.

But despite all this, there are plenty of compelling reasons to try local AI over relying on the online alternatives. Ultimately, if you have some hardware that can do it, why not give it a try?

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.