AMD graphics cards see huge gains with DX12, Nvidia not so much

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

Upcoming strategy title "Ashes of Singularity" released benchmarking software a few days ago, marking the start of a global transition to DX12.

DirectX is a programming interface (API) that allows developers to tap into a device's hardware for producing complex 3D graphics. DX12 is the latest version and promises to bring more efficient CPU utilization, reduced driver overheads, and various other benefits. Lead developer Max McMullen said one of DX12's goals was to bring "console-level efficiency" to PCs. Console hardware is focussed entirely on games, where PCs are often doing other tasks even while running games.

In this context, DX12 hopes to bring significant gains to PC games across the board. However, Ashes of Singularity's benchmark is proving controversial for Nvidia's high-end cards, which so far aren't seeing the benefits.

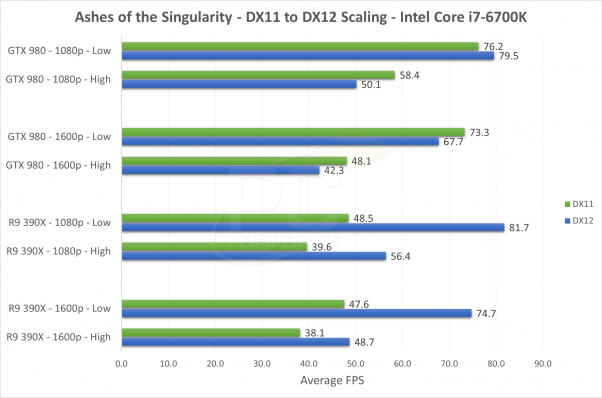

This graph shows DX12's performance (blue) against the older DX11's performance (green) using Nvidia's GTX 980 and AMD's R 390X.

PC Perspective has put various hardware configurations to the test against the new benchmarking software.

Nvidia's GTX 980 is one of the company's most powerful GPUs, yet the benchmarking software seems to show that DX12 hinders performance slightly. AMD's R9 390X, however, shows massive gains, often upwards of 80% - pushing it ahead of Nvidia's GTX 980. The disparity is problematic for Nvidia, as the R9 390X is usually cheaper than the GTX 980.

Nvidia dismissed Ashes of Singularity's benchmark, tersely describing it as inaccurate:

All the latest news, reviews, and guides for Windows and Xbox diehards.

..."We believe there will be better examples of true DirectX 12 performance and we continue to work with Microsoft on their DX12 API, games and benchmarks. The GeForce architecture and drivers for DX12 performance is second to none - when accurate DX12 metrics arrive, the story will be the same as it was for DX11."...

Ashes of Singularity's developer Oxide defended the code in a detailed blog post:

..."It should not be considered that because the game is not yet publicly out, it's not a legitimate test," Oxide's Dan Baker said. "While there are still optimisations to be had, the Ashes of the Singularity in its pre-beta stage is as or more optimised as most released games. What's the point of optimising code six months after a title is released, after all?"

At least in part, this data could show that AMD has historically poor DirectX performance when compared to Nvidia. However, Intel's integrated GPUs have seen similar gains. Check out this video below from Intel on the benefits of the tech for lower-end GPU setups, such as the Surface Pro 3:

DX12 is proving a controversial topic for various reasons, particularly if the disparity between Nvidia and AMD continues. DX12 is also heading to Xbox One, although it remains largely speculative if it'll hold any significant gains as consoles are already very optimized.

Are you a DirectX buff? How do you feel about the data? Hit the comments!

Source: PC Perspective

Jez Corden is the Executive Editor at Windows Central, focusing primarily on all things Xbox and gaming. Jez is known for breaking exclusive news and analysis as relates to the Microsoft ecosystem — while being powered by tea. Follow on X.com/JezCorden and tune in to the XB2 Podcast, all about, you guessed it, Xbox!