Microsoft's moral stance on facial recognition is good for everyone (especially Microsoft)

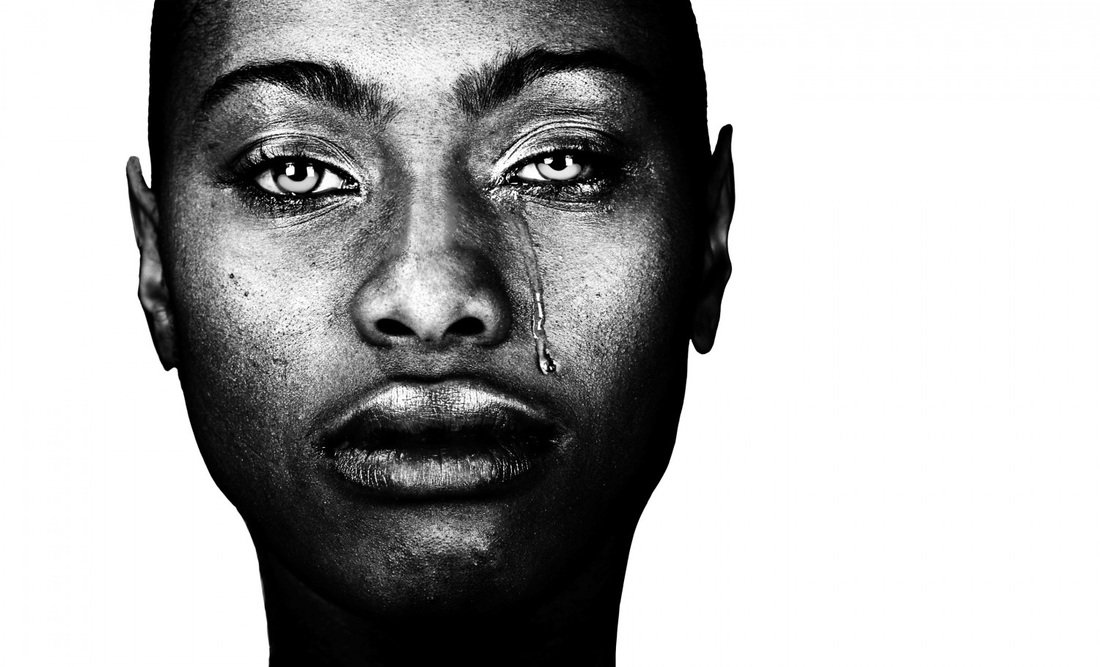

AI-driven facial recognition systems have failed to recognize women and dark-skinned people accurately. Microsoft is doing something about that – for more reasons than one.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

In recent years, AI-driven facial recognition systems have been in the news for failing to recognise dark-skinned people and women as consistently as it does white males. IBM, Microsoft, Google and other companies that are driving this technology within their products, selling it to other companies and which hope to implement it throughout governments, municipalities and the private sector have all been hit with the impact of the technology's short-comings.

Google's system identified African Americans as primates forcing the company to remove certain content from its system to preclude the association going forward. A recent study showed how IBM's and Microsoft's facial recognition tech was far less accurate at recognizing women and minorities than white men.

To prevent the bias and discrimination that these systems will have on society if left unchecked, Microsoft is leading a movement for its regulation by the government. This commitment isn't only about doing the right thing, however. Microsoft stands to make a lot of money if governments, the tech industry, the private sector and consumers perceive it as a trusted leader and provider of AI-driven facial recognition camera technology.

Immature tech is bad for business

During Microsoft's 2017 BUILD Developers Conference the company introduced its AI-driven camera technology as part of its edge computing strategy. The technology is capable of recognizing people, activity, objects and more and can proactively act based on what it sees. Microsoft demonstrated how it notified a worker on a worksite that another worker needed a tool he was near. Microsoft also showed how in a hospital setting the system, connected to a patient's data, alerted staff to his needs as it "watched" him walking, distressed, in a hallway.

The strength of this system is that it is software-based and can be deployed across camera systems already in use by businesses, schools, governments, municipalities and more.

Microsoft seeks to dominate the industry as a platform company by providing industry standard software and tools like Office, Azure and more to businesses and governments to help them "achieve more." Microsoft's AI-driven camera tech is just another platform the company hopes to sell to businesses and governments so that as those entities "achieve more" Microsoft will gain market dominance and make more money. As a relatively young technology, however, bad press is bad for business.

Bias in, bias out

The biases currently reflected in AI-driven facial recognition systems are likely a result of the "biases" (though perhaps unintentional) inherent to the machine learning processes used to train them. White males make up the majority of the people working in IT. Thus, the perspectives of the teams creating these systems are relatively homogenous. The breadth of input, the array of considerations, the assortment of models and forward-looking impact of the technology on certain groups that a more diverse team would have contributed to building and training these systems was lost.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Microsoft is cognizant of the immediate social implications and ultimately the long-term financial impact of deploying its facial recognition tech with its current limitations. Thus, it has refused two potentially lucrative (in the short term) sales. The company declined selling to a California law enforcement agency for use in cars and body cams because the system, after running face scans would likely cause minorities and women to be held for questioning more often than whites. Microsoft's president Brad Smith acknowledged that since the AI was primarily trained with white men, it has a higher rate of mistaken identity with women and minorities. Microsoft also refused to deploy its facial recognition tech in the camera systems of a country the nonprofit, Freedom House, deemed not free.

Microsoft's refusal to make these sales is consistent with its stance articulated by Smith in a blog post:

We don't believe that the world will be best served by a commercial race to the bottom, with tech companies forced to choose between social responsibility and market success.

I'm confident altruistic considerations are contributing to Microsoft's "high-road" approach to facial recognition tech. I also believe the company realizes that if other industry players take a less socially-conscious position, negative fallout could impact the techs acceptance and ultimately Microsoft's goal to provide a platform with a wide and lucrative range of potential applications.

One bad Apple ruins facial recognition for the bunch

Microsoft wants high standards of quality and accuracy for facial recognition across the entire industry so that potential customers including governments, businesses and the public will have confidence in the tech. Microsoft realizes that despite the progress it has made, based on an evaluation by the National Institute of Standards and Technology (NIST) (and as a top-ranked developer of facial recognition tech), for the tech to be accepted there must be a uniformity of standards that precludes the biases observed in early systems. This is why Microsoft is taking the lead to petition the government to take steps to regulate the tech.

Microsoft has proactively provided three areas it hopes regulation will address:

- Bias and discrimination

- Intrusion into individual privacy

- Government's mass surveillance encroachment's on democratic freedoms

In 2017 I discussed many of these same concerns. Intelligent cameras that are deployed in the private and public sector has the potential of allowing tracking of individuals, logging behavior and actions, and giving unprecedented power to governments, employers or malicious actors who have access to this data.

Reading between the PR lines

Interestingly, despite Microsoft's gung-ho advocating for user privacy, Smith is somewhat lenient regarding consumer consent and facial recognition tech. He said:

[In Europe], consent to use facial recognition services could be subject to background privacy principles, such as limitations on the use of the data beyond the initially defined purposes and the rights of individuals to access and correct their personal data. But from our [Microsoft's] perspective, this is also an area, perhaps especially in the United States, where this new regulation might take one quick step and then we all can learn from experience before deciding whether additional steps should follow.

Reading between the lines, Smith seems to be saying Microsoft's privacy position may be less aggressive than Europe's in relation to use of user data beyond initially defined purposes and limiting user access to their data. Microsoft stands to gain a lot if its continually evolving products and services, can share what they currently know and continuously learn about users from their activity with products and what intelligent cameras observe.

Image is everything

Microsoft's CEO Satya Nadella has been driving the company forward with a mission of empathy, social and environmental responsibility and inclusion for people with disabilities. I believe that those efforts along with a desire for universal standards for facial recognition tech are genuine. Still, Microsoft is a business. Parallel to these noble motives is a desire to dominate the industry and shine on Wall Street.

Microsoft is pushing for government regulation of facial recognition tech with laws that require transparency, enable third-party testing and comparisons, ensure meaningful human review, avoid use for unlawful discrimination and protect people's privacy. As noble as this sounds, I believe that Microsoft's motives are also rooted in a goal to ensure companies don't hurt the public perception of the tech before Microsoft can establish itself as the market leader.

Jason L Ward is a Former Columnist at Windows Central. He provided a unique big picture analysis of the complex world of Microsoft. Jason takes the small clues and gives you an insightful big picture perspective through storytelling that you won't find *anywhere* else. Seriously, this dude thinks outside the box. Follow him on Twitter at @JLTechWord. He's doing the "write" thing!