Fake Gemini hands-on video shows Google's sleight of hand, not the future of AI

The Gemini hands-on video was about as real as a cinematic game trailer.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

Google announced Gemini this week. Gemini will compete with ChatGPT and is a multimodal AI, meaning it can interact with text, images, audio, video, and code. Hype around Gemini was high after Google I/O, but we're now starting to get a glimpse behind the AI model's curtain, and it's not pretty.

While Gemini shows promise, we all need to temper expectations for the new tool because the hands-on video Google shared is fake. That may seem like a strong word, but Parmy Olson at Bloomberg showed how Google's video does not represent how Gemini will work in the real world.

Before I get into how Google made the video, I want to clarify that the clip is not entirely a fabrication. Google did use Gemini to identify objects and figure out what was going on in images. What Google didn't do, however, was create a genuine hands-on video that shows the actual experience you'll have when using Gemin.

When you see a hands-on video for a product, you expect content that reflects real-world usage. For example, if a YouTube reviewer did a hands-on with a new VR headset, you would want that video to show actual gameplay, what the field of vision looks like, and how well controls work. Similarly, a hands-on with a phone should show how the phone actually works, not a sped up and clipped together.

You could argue that most, if not all, product demos are clipped together and don't show any flaws of the products they highlight. But as they say, two wrongs don't make a right.

How Google faked its Gemini hands-on

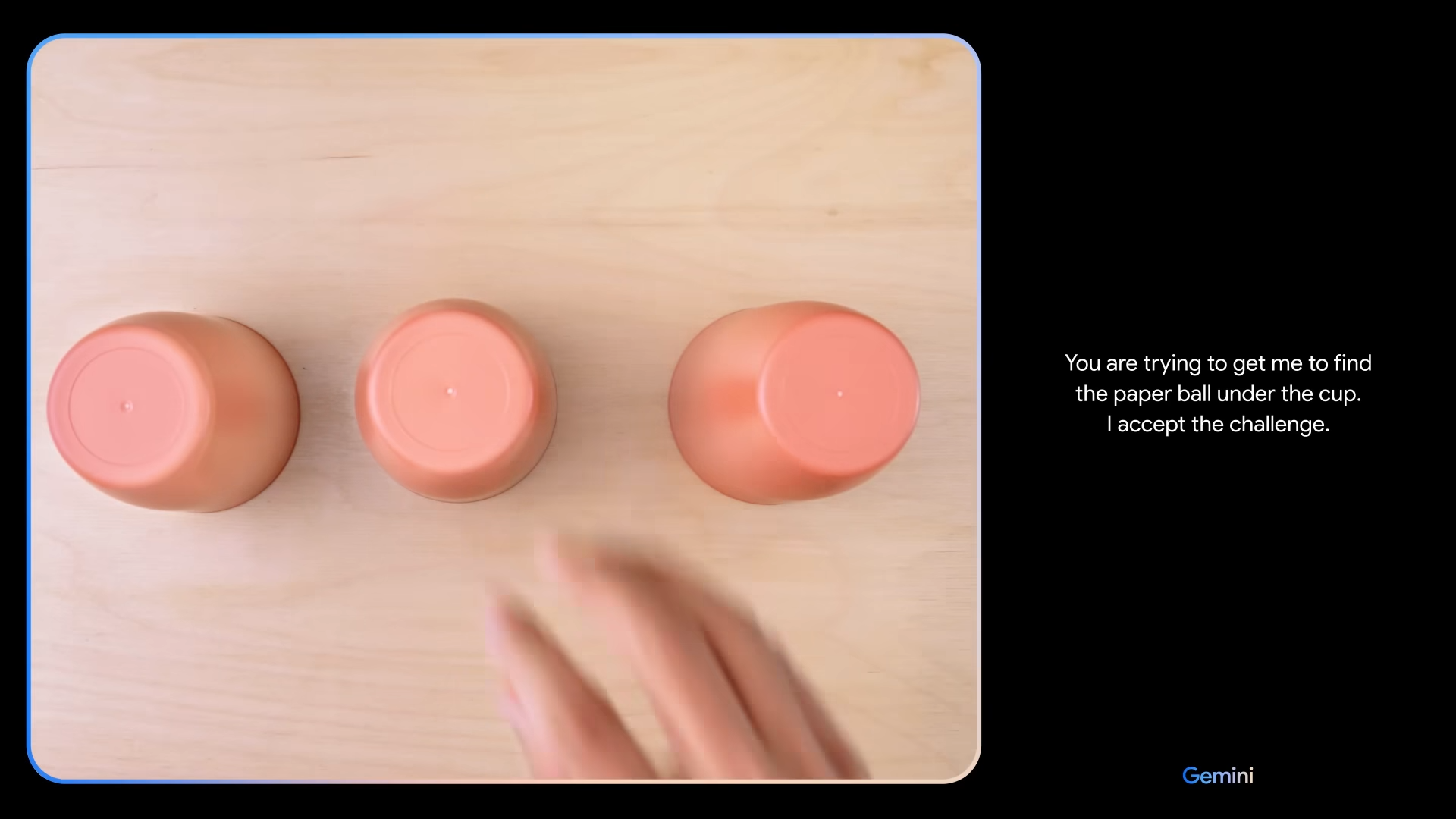

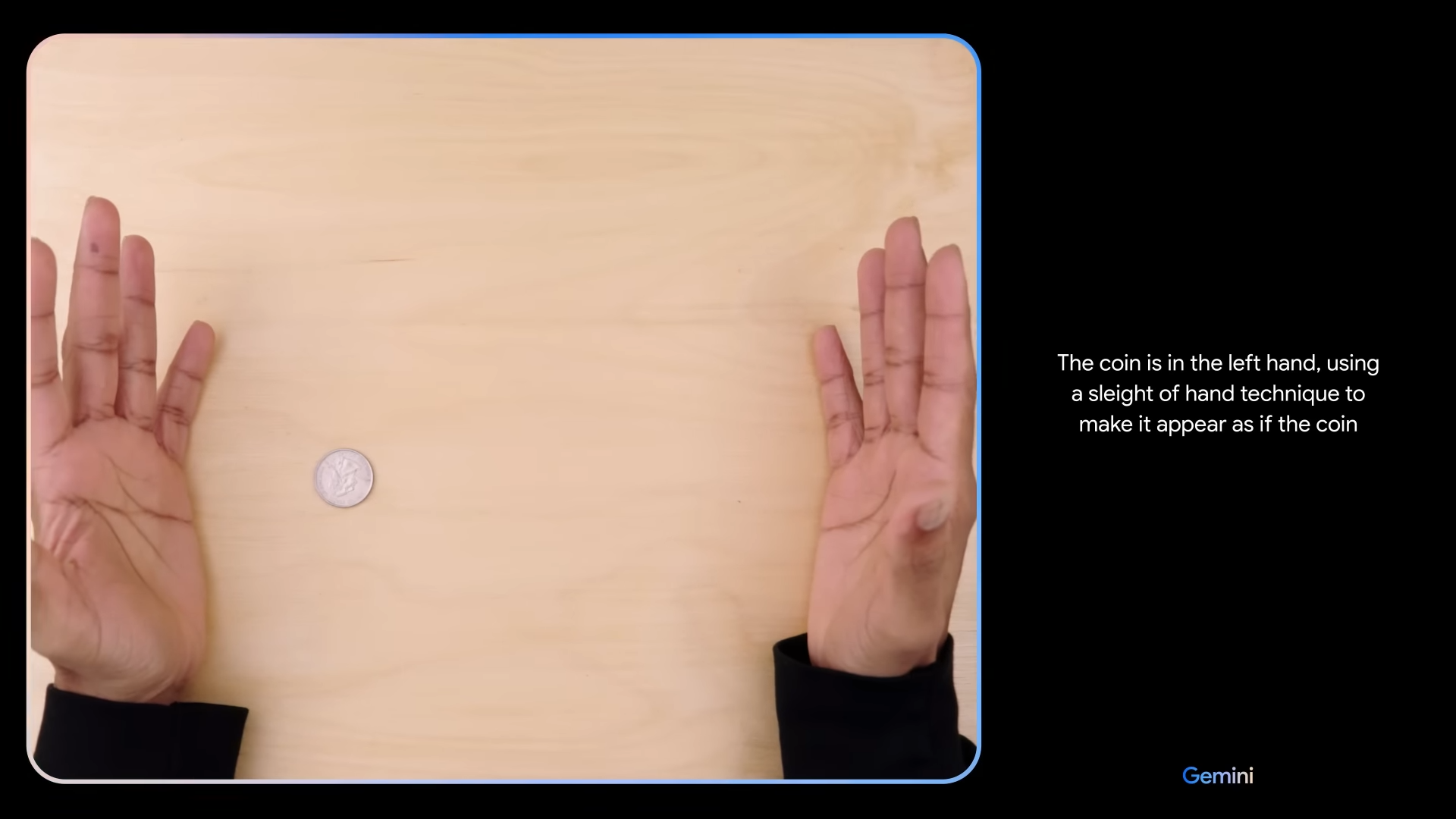

In the video, a user performs a variety of tasks, such as trying to hide a ball in cup, drawing a duck, and playing a game with a map. Throughout the clip, Gemini narrates what's going on in real-time, figuring everything out on the fly. What you don't see in the video, however, is that Google used text prompts and provided context to make the Gemini demo.

Google gave prompts to Gemini based on still image frames from the captured content. The company then prompted the AI model with text. Narration was then added afterword.

All the latest news, reviews, and guides for Windows and Xbox diehards.

In fact, the prompts shown in the video aren't even the ones given to Gemin. The video makes it seem like Gemini sees three cups placed on a table and immediately determines the user is trying to play a game. In actuality, Google trained Gemini how to play the game. It then tested Gemini on its ability to follow very specific instructions. Even in those circumstances, Gemini isn't perfect at the task.

" Of course, it won’t always get this challenge right. Sometimes the fake out move (where you swap two empty cups) seems to trip it up, but sometimes it gets that too. But simple prompts like this make it really fun to rapidly test Gemini," explained Google.

It's rather fitting that Google's hands-on video for Gemini used a trick know for sleight of hand.

Google defends its fake Gemini video

Really happy to see the interest around our “Hands-on with Gemini” video. In our developer blog yesterday, we broke down how Gemini was used to create it. https://t.co/50gjMkaVc0We gave Gemini sequences of different modalities — image and text in this case — and had it respond… pic.twitter.com/Beba5M5dHPDecember 7, 2023

Google VP of Research and Deep Learning Lead Oriol Vinyals defended the video on X.

"All the user prompts and outputs in the video are real, shortened for brevity. The video illustrates what the multimodal user experiences built with Gemini could look like. We made it to inspire developers," said Vinyals (emphasis added).

I'm surprised I have to say this. What something "could look like" is not a hands-on video.

Google did link to a blog post in its video describing how the company created the content. That blog post doesn't hide the fact that Google used several prompts and cues to get Gemini to react the way it did. But a blog post link below the "...more" section of a video description is not the same as explaining what's going on in the video. It certainly doesn't correct the incorrect use of the phrase "hands-on."

We just need a bit more transparency

I understand why Google did what it did. Amazon tried to show off the Echo Show 8 earlier this year with a true live demo, and it did not go well. Calling the device by saying "hey Alexa" resulted in slow responses. Performance wasn't great either, putting the device in a poor light.

But I'd argue that if a true live demo of a product makes that product look bad, that people should know that. If a game trailer looks amazing and the game is terrible, people would be upset about being misled. I don't see how Google's hands-on video is different.

Sean Endicott is a news writer and apps editor for Windows Central with 11+ years of experience. A Nottingham Trent journalism graduate, Sean has covered the industry’s arc from the Lumia era to the launch of Windows 11 and generative AI. Having started at Thrifter, he uses his expertise in price tracking to help readers find genuine hardware value.

Beyond tech news, Sean is a UK sports media pioneer. In 2017, he became one of the first to stream via smartphone and is an expert in AP Capture systems. A tech-forward coach, he was named 2024 BAFA Youth Coach of the Year. He is focused on using technology—from AI to Clipchamp—to gain a practical edge.