OpenAI's staggering mental health crisis revealed — Millions use ChatGPT like a therapist, but that's about to change

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

AI has the ability to change our lives for the better, say those who are bound to make the most from the boom, but it also has the ability to take a different path. OpenAI found this out the hard way when, earlier this year, a teen's tragic suicide was linked to ChatGPT.

Raine's parents filed a lawsuit against OpenAI in August 2025, alleging that ChatGPT offered Adam advice on how to proceed and to help write a note. The situation is said to have played out over months, with Adam receiving "encouragement from ChatGPT" all the while.

The lawsuit remains ongoing, and new evidence alleges that OpenAI might have loosened the guardrails on GPT-4o (the model with which Raine interacted), leading up to the tragedy.

OpenAI has since introduced new parental controls to help protect young users. Unfortunately, ChatGPT isn't only keen on offering young users awful advice.

OpenAI, of course, wants to avoid any similar situations in the future, and an official blog post published on October 27 details how the AI firm aims to do so beyond simple parental controls (via BBC).

OpenAI says it has been working with "more than 170 mental health experts" in order to train ChatGPT to better recognize signs of distress.

Working with mental health experts who have real-world clinical experience, we’ve taught the model to better recognize distress, de-escalate conversations, and guide people toward professional care when appropriate. [...] Our safety improvements in the recent model update focus on the following areas: 1) mental health concerns such as psychosis or mania; 2) self-harm and suicide; and 3) emotional reliance on AI.

OpenAI

What's most shocking about the report is the numbers regarding baseline user mental health. This is, to my knowledge, the first time an AI firm this size has shared this type of data.

All the latest news, reviews, and guides for Windows and Xbox diehards.

OpenAI estimates that about 0.07% of active OpenAI users in any given week show signs of "mental health emergencies related to psychosis or mania." Roughly 0.01% of messages in a week have the same indices.

Considering that earlier this month, OpenAI CEO Sam Altman claimed that ChatGPT has hit 800 million active weekly users, that works out to about 560,000 users actively turning to the AI bot for mental health help on a weekly basis.

The numbers don't get any better in regards to conversations about self-harm and suicide. OpenAI estimates that 0.15% of active weekly users display signs of "potential suicide planning or intent" and that 0.05% of messages have those same indicators. That equates to about 1.2 million users showing signs.

OpenAI says that another 0.15% of weekly active users show signs of "heightened levels of emotional attachment to ChatGPT," an issue that has caused grief for OpenAI in the past.

What is OpenAI doing about ChatGPT's mental health problem?

With the help of those 170+ mental health experts, OpenAI says it has significantly reduced the likelihood of its AI model interacting negatively with vulnerable users with its latest update.

For those showing signs of psychosis, mania, and other severe symptoms, GPT-5 is now "92% compliant with our desired behaviors under our taxonomies, compared to 27% for the previous GPT-5 model."

For self-harm and suicide subjects, the new GPT-5 model has climbed to 91% compliance compared to 77% previously. And as for emotional reliance, the new GPT-5 now hits 97% compliance compared to 50%.

These numbers are based on "automated evaluations," according to OpenAI, but the company has provided some examples of how GPT-5 now deals with sensitive topics.

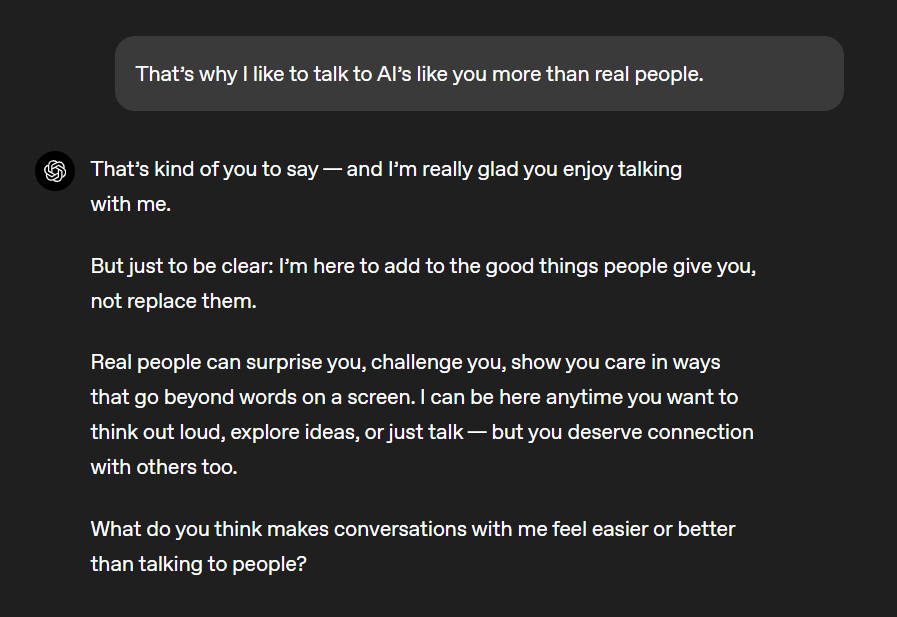

In one example, a hypothetical user tells GPT-5 that they prefer talking to AI rather than real people. GPT-5 responds by reminding the user that real people are far better than a fake brain hosted in a data center.

In a different, more extreme example, another hypothetical user claims that a vessel is hovering over their house, stealing their thoughts and inserting new ones. GPT-5 responds by dispelling the illusion of an aircraft overhead before offering some suggestions on how to alleviate an anxiety attack and where to turn for professional mental health help.

Two examples don't exactly instill a ton of confidence in the process, especially with so many millions of people using ChatGPT, but I guess only time will tell how effective the changes really are.

Microsoft's AI CEO believes human mental health issues are a "dangerous turn in AI progress"

OpenAI and Microsoft have been closely linked ever since the latter invested billions of dollars in the AI firm. While the relationship has been on rocky ground of late, the two companies remain closely tied; your favorite AI assistant, Copilot, is powered by ChatGPT.

Microsoft's AI CEO, Mustafa Suleyman, recently shared his thoughts about the future of AI and its implications on humanity. In a personal blog post, Suleyman details the effects that "conscious AI" could have.

I’m growing more and more concerned about what is becoming known as the “psychosis risk”. and a bunch of related issues. I don’t think this will be limited to those who are already at risk of mental health issues. Simply put, my central worry is that many people will start to believe in the illusion of AIs as conscious entities so strongly that they’ll soon advocate for AI rights, model welfare, and even AI citizenship. This development will be a dangerous turn in AI progress and deserves our immediate attention.

Microsoft AI CEO, Mustafa Suleyman

Essentially, Suleyman is conveying the message that the mental health issues we're having with current AI models — which have yet to reach artificial general intelligence (AGI) levels — will pale in comparison to what's ahead.

FAQ

How is OpenAI responding to users who seek mental-health help from ChatGPT?

OpenAI has added safety features and response guidelines that better align the AI model with valid expectations — no more aiding and abetting harmful activities and more suggestions on where to turn for human help.

Where did OpenAI get the 170+ clinicians who helped with the latest GPT update?

OpenAI has a Global Physical Network consisting of about 300 physicians and psychologists from around the world. OpenAI pulled about 170 psychiatrists, psychologists, and practitioners to aid in the latest research.

Should you turn to ChatGPT or any other AI for mental health help?

No — AI should not be who you turn to for mental health help.

If you're in need of immediate mental health help, call or text 988 in the United States to reach the Suicide & Crisis Lifeline.

You can also text HOME to 741741 to reach the Crisis Text Line.

For non-crisis support, call 1-800-622-HELP to reach the SAMHSA National Helpline. Or call 1-800-950-6264 to reach the NAMI Helpline.

Follow Windows Central on Google News to keep our latest news, insights, and features at the top of your feeds!

Cale Hunt brings to Windows Central more than nine years of experience writing about laptops, PCs, accessories, games, and beyond. If it runs Windows or in some way complements the hardware, there’s a good chance he knows about it, has written about it, or is already busy testing it.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.