Everything you need to know about the GPU

Ever wondered just what makes up the graphics processing unit (GPU)? Be it an integrated or dedicated card, we run through exactly what it does for your PC.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

The graphics processing unit (or GPU for short) is responsible for handling everything that gets transferred from the PC internals to the connected display. Whether you happen to be gaming, editing video or simply staring at your desktop wallpaper, everything is being rendered by the GPU. In this handy guide we'll be looking at exactly what the GPU is, how it works and why you may want to purchase one of our picks for the best graphics card for gaming and intensive applications.

You don't actually need a dedicated card to supply content to a monitor. If you have a laptop, odds are good it has an integrated GPU — one that's part of the processor's chipset. These smaller and less powerful solutions are absolutely perfect for the desktop environment, low-power devices and instances where it's simply not worth the investment on a graphics card.

Unfortunately for laptop, tablet and certain PC owners, the option to upgrade their graphics processor to the next level may not be on the table. This will result in poor performance in games (and video editing, etc.) and require owners turn down graphic quality settings the the absolute minimum in many cases. For those with a PC case, access to the insides and funds for a new card, you'll be able to take the gaming experience to the next level.

CPU vs GPU?

So why do we need a GPU if we already have a powerful central processing unit? Simply put, the GPU can handle so much more in terms of numbers and calculations. This is why it's relied upon to power through a game engine and everything that comes with, or an intensive application like a video editing suite. The massive number of cores located on the GPU board can handle processes all at a single point in time.

It's why Bitcoin miners rely on their trusty GPU for absolute power (this is known as GPGPU — general purpose graphics processing unit). Both the CPU and GPU are silicon-based micro-processors, but they're fundamentally different and are deployed for different roles. But let's not shoot the CPU down too much. You won't be running Windows on a GPU any time soon. The CPU is the brains of any PC and handles a variety of complex tasks, something the GPU cannot perform as efficiently.

Think of the CPU and GPU being like brain and brawn, the former will be able to work on a multitude of different calculations while the GPU will be tasked by software to render graphics and focus all available cores on a specific task at hand. The graphics card is brought into play when you need a massive amount of power on a single (yet seriously complex — because graphics and geometry be complicated) task. All the polygons!

The Players

Two big names dominate the GPU market: AMD and NVIDIA. The former was previously ATI and originally started with the Radeon brand back in 1985. NVIDIA came along and released their first GPU in 1999. AMD swooped in and bought ATI back in 2006 and now competes against NVIDIA and Intel on two different fronts. There's actually not that much that separates AMD and NVIDIA when it comes to the GPU — it has mainly been down to personal preference.

All the latest news, reviews, and guides for Windows and Xbox diehards.

NVIDIA has kicked things up a gear with the newly released GTX 10 series, but AMD offers affordable competitors and is expected to roll out its own high-end graphics solution sometime in the near future. The companies generally run on a parallel highway, both releasing their own solutions to tackle GPU and monitor synchronization, for example. Other parties are in play like Intel who implement their own graphics solution on-chip but you'll likely be purchasing an AMD or NVIDIA card.

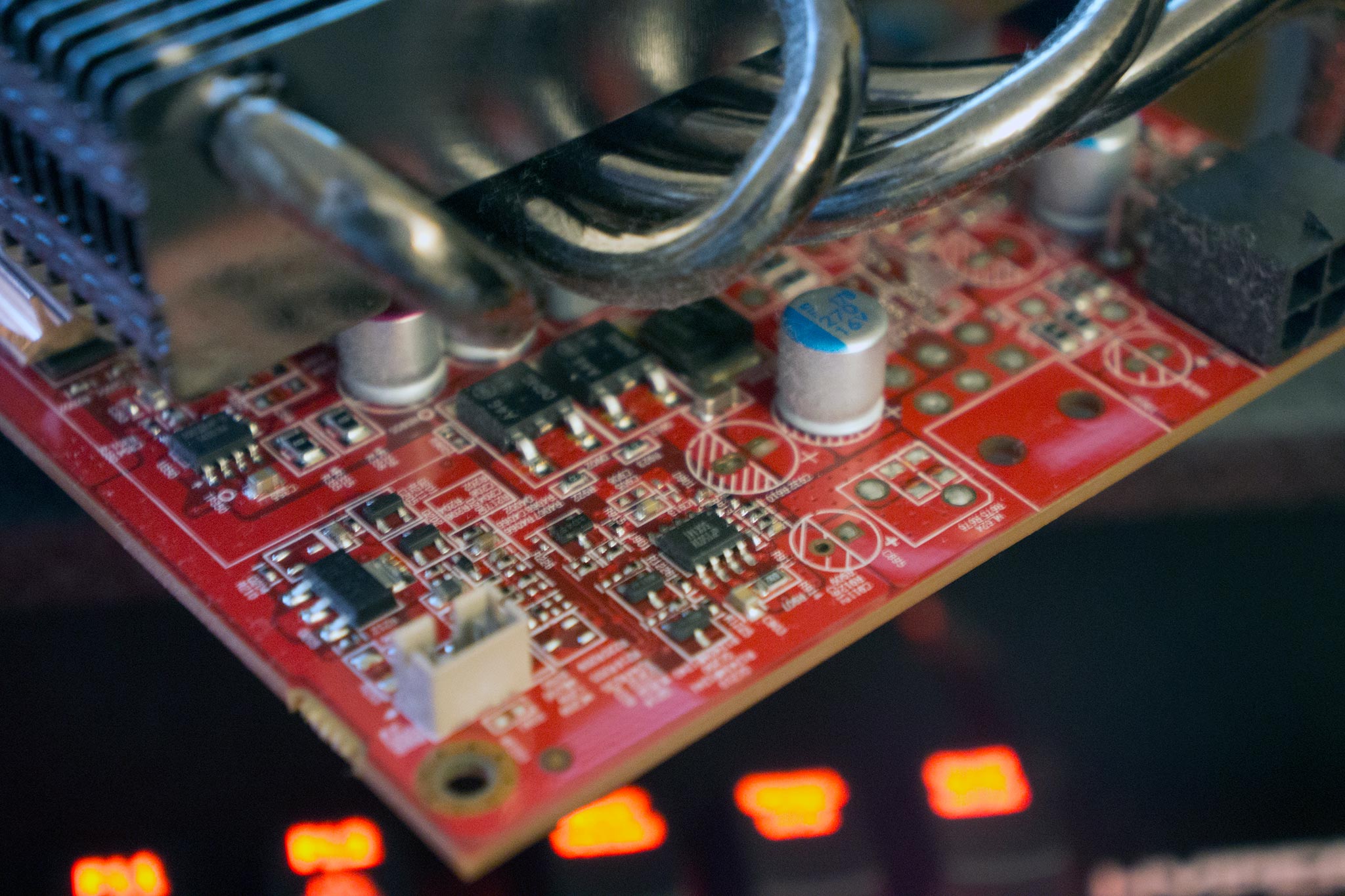

Inside the GPU

We've established the GPU as pretty much the most powerful component inside the PC, and a big part of its capability comes from the VRAM. These memory modules allow the unit to quickly store and receive data without having to route through the CPU to the RAM attached to the motherboard. The video RAM your graphics card utilizes is separate from the RAM your PC relies on.

They're similar, but totally different beasts. A system that supports DDR4 memory will be able to run a graphics card with GDDR5 RAM. The VRAM on a graphics card is used for storing and accessing data quickly on the card, as well as buffering to render frames for the monitor to display. The memory also helps with anti-aliasing to reduce the effect of "jagged edges" on-screen by approximating data and attempting to make images appear smoother.

Bumping up the resolution of a display combats this by making the pixels more numerous and smaller, and thus harder for the human eye to tell them apart (think Apple's "Retina") — unless you look really closely. Displaying content like games at a higher resolution would require more power from the GPU since you're essentially requiring the unit to pump out more data. And all this data requires cores or processors, and lots of them.

This is why modern graphics cards have hundreds and hundreds of these cores. The enormous core count is the main reason in which the GPU is substantially more powerful than the CPU with its limited number of cores when it comes to texture mapping and pixel output. While the cores themselves aren't able to power through the variety of calculations a CPU has to perform every second, they're leaders in their graphics trade.

Things get hot!

Leveraging all of this raw computational power means there's a lot of electricity running through the GPU, and lots of juice means lots of heat. The amount of heat produced by a graphics card (or processor for that matter) is measured in Thermal Design Power (or TDP for short) and watts. This isn't a direct measure of power consumption, so if you're looking at that shiny new GTX 1080 and spot its 180W TDP rating, that doesn't mean it will require 180W of current from your power supply.

You should care about this value simply because you need to know just how much cooling you're going to need in and around the card. Throwing a GPU with a higher TDP into a tight case with limited air flow may cause issues, especially if you're already rocking a powerful CPU and cooler that have pushed the case to its maximum. This is why you see massive fans on some GPUs, especially those that happen to be overclocked.

Speaking of which, yes your GPU can even be overclocked. So long as it supports this feature, has enough cooling to handle the increased heat production and you have a stable system.

Some Jargon

Architecture: The platform (or technology) that the GPU is based upon. This is generally improved by companies over card generations. An example would be AMD's Polaris architecture.

Memory Bandwidth: This determines just how efficiently the GPU can utilize the available VRAM. You could have all the GDDR5 memory in the world, but if the card doesn't have the bandwidth to effectively use it all, you'll have a bottleneck. Calculated by taking the memory interface into account.

Texture Fillrate: This is determined by the core clock multiplied by available texture mapping units (TMU). It's the number of pixels that can be textured per second.

Cores/Processors: The number of parallel cores (or processors) available on a card.

Core Clock: Identical to the clock speed of the CPU. Generally, the higher this value the faster a GPU will be able to operate. It's by no means a definitive comparison between cards, but is a solid indicator.

SLI/CrossFire: Need more power? Why not throw in two compatible GPUs and bridge them to render even more pixels? SLI and CrossFire are NVIDIA and AMD technologies that allow you to install more than one GPU card and have them work in tandem.

That's the GPU in a nutshell and we hope this small guide introduces you to the world of graphics processing. It's an important component that solely works on the same task at superb levels of efficiency to produce some wondrous views on-screen.

tl;dr

Graphics cards are best at solving graphics problems and other tasks the numerous cores are specifically designed for. This is why they're required for gaming and why more powerful cards let you game at higher fidelity and resolutions. They're more powerful than a CPU, but can only really be used for specific applications.

Rich Edmonds was formerly a Senior Editor of PC hardware at Windows Central, covering everything related to PC components and NAS. He's been involved in technology for more than a decade and knows a thing or two about the magic inside a PC chassis. You can follow him on Twitter at @RichEdmonds.