ChatGPT-generated text may have met its match with a new tool that offers 99% accuracy in detection

A breakthrough ChatGPT AI Detector tool may be able to tell human text and AI-generated content apart.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- A research team is on the verge of a breakthrough with an AI Detector tool that can identify ChatGPT-generated text, and it promises 99% accuracy.

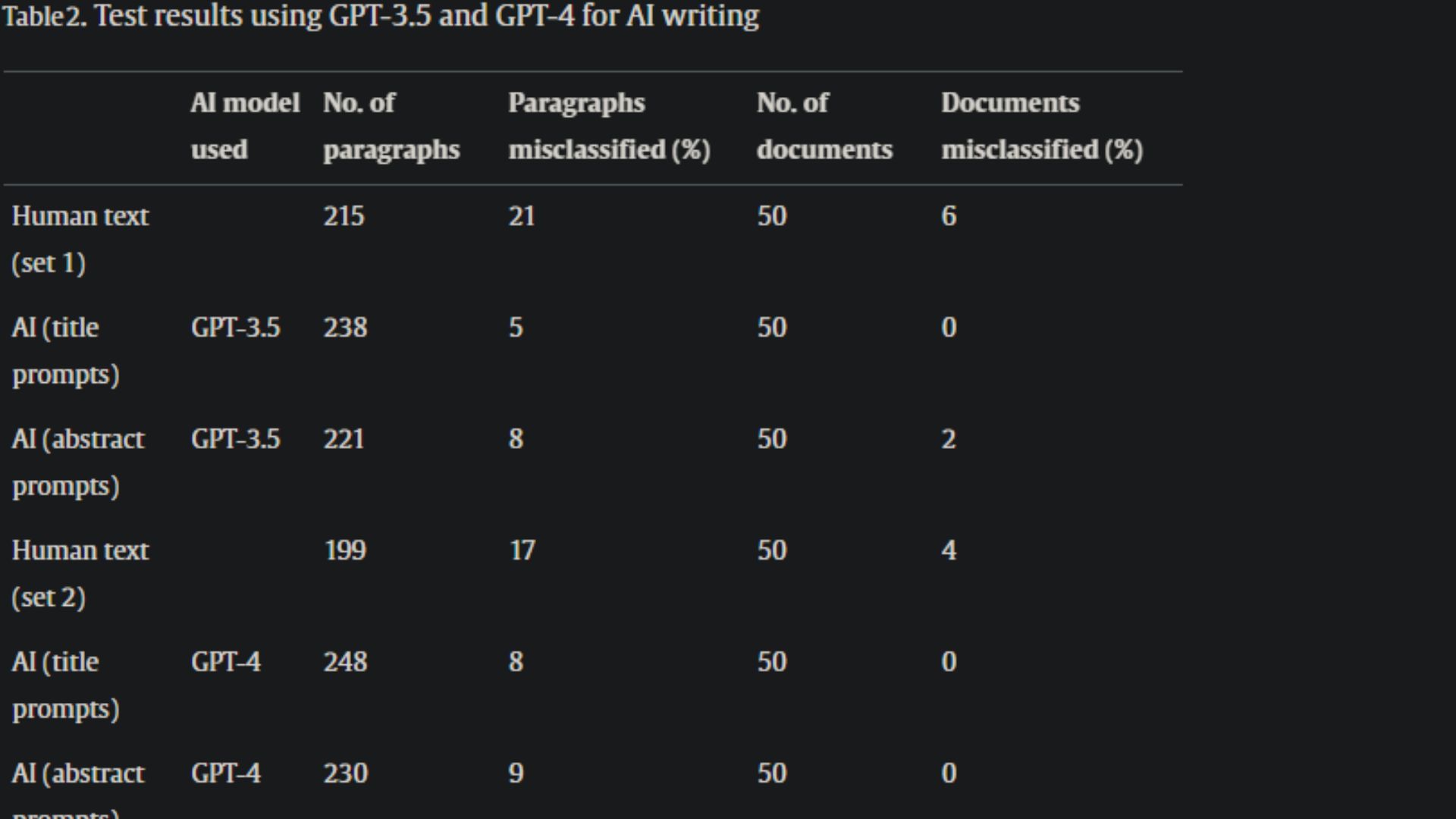

- The researchers put the tool through various tests, including using it to differentiate AI-generated text from human content.

- The results were impressive, but the tool misclassified a couple of documents as AI-generated when, in reality, they were written by humans.

- The researchers deployed XGBoost for all the experiments and tests, which relied on the R package, xGBoost, which provided a sophisticated way of gauging model optimization.

The emergence of generative AI and, notably, ChatGPT has brought forth incredible opportunities, allowing users to explore untapped potential, but it also has its setbacks in equal measure. AI safety and privacy are some of the top concerns among users, preventing the technology from realizing its full potential. However, President Biden recently issued an Executive Order addressing some of these concerns.

Authenticity and accuracy are also part of these concerns. If the recent reports citing that ChatGPT is getting dumber and losing accuracy are anything to go by, then these concerns are valid. Up until now, there's no fireproof avenue that can be explored to detect AI-generated text with unprecedented accuracy.

Luckily, a group of researchers is on the verge of a breakthrough, seemingly making it easier to identify AI-generated text. With significant updates shipping to AI-powered chatbots like Bing Chat and ChatGPT ever so often, it's getting harder to demystify text generated using these tools from human text.

Per the report, the AI detector is designed to determine AI-generated text in scientific journals. Running tests on these kinds of journals is a serious undertaking, depending on the complexity of the topic and the availability of the information on the web.

According to the researchers, the AI detector was used to help distinguish human writers from ChatGPT (GPT-3.5). They further disclosed that the methodology depended on 20 features and a machine learning algorithm, which didn't feature a "perplexity" measure. This is because they classified it as a "problematic metric," which would introduce bias toward non-native English speakers in other tools.

Furthermore, the model demonstrates 99% accuracy on differentiating human writing from texts produced by ChatGPT, and it significantly outperforms the GPT-2 Output Detector, AI detection software that was offered by OpenAI, makers of ChatGPT.

AI Detector Researchers

We recently learned that OpenAI was working on a new tool to help users identify AI-generated images. The company promised 99 percent accuracy, though it's not yet clear when it will ship to broad availability.

The researchers admit that the scope of the original work was limited, as they only tested one type of prompt from one journal. Moreover, it wasn't a Chemistry journal exclusively, not to mention that only one language model was tested.

All the latest news, reviews, and guides for Windows and Xbox diehards.

According to the researchers:

"...we radically expand the applicable scope of our recently described AI detector by applying it to new circumstances with variability in human writing, including from 13 different journals and 3 different publishers, variability in AI prompts, and variability in the AI text generating model used. Using the same 20 features as described previously, we train an XGBoost classifier with example human texts and comparator AI text. Then we assess the model by using new examples of human writing, multiple challenging AI prompts, and both GPT-3.5 and GPT-4 to produce the AI text."

The AI Detector promises 99% accuracy

The researchers indicated that the tests demonstrated that their methodology was straightforward and effective. They highlighted that the tool showcased 98%–100% accuracy when identifying AI-generated text, but this depended on the prompt and model.

The researchers indicated that the tool stacks miles ahead of OpenAI's updated classifier, which ranges between 10% to 56% in accuracy. The researchers further disclosed that the goal behind this study and invention is to provide the scientific community with an avenue that allows them to assess "the infiltration of ChatGPT into chemistry journals, identify the consequences of its use, and rapidly introduce mitigation strategies when problems arise."

What did the researchers use as the classifier for tests?

The researchers deployed XGBoost for all the experiments and tests, which relied on the R package, xgboost. It provided a sophisticated way to gauge model optimization, as the paragraph-level accuracy was measured using leave-one-introduction-out cross-validation on the training set.

The research team stated that:

"In this paradigm, all of the training data, except for that originating from the paragraphs of the introduction to be classified, would be leveraged to build a classification model. This model would then be used to classify all of the paragraphs from the left-out introduction."

The study highlights that these conditions "produced the best overall paragraph-level accuracy," which was then used across all tests.

Are the results promising?

Admittedly, the AI Detector posted impressive results, but there's room for improvement. The technology is relatively new, so the gap of error was expected. But still, misclassifying 6% of the human text tested raises eyebrows.

Reddit users in the r/science subreddit raise valid concerns about this issue. A concerned user presented a hypothetical situation where this tool was used in school, stating that the results indicate that out of every 100 students, 6 of them would be falsely accused of leveraging AI-powered tools to do their coursework. And we all know the significant setbacks that come with submitting work that you lifted from somewhere else.

This proves the importance of having dependable and accurate tools such as AI Detector, but at the same time, it's nearly impossible to assert the accuracy of these tools. Another user presented a case where a course facilitator had flagged an assignment as AI-generated. Still, after sharing this study with the teacher and even using the tool to run through the syllabus, it was flagged as AI-generated content.

While this was a really close shave, what happens when you can't back up your claims? Do you think it will get to a point where AI detectors can promise absolute accuracy? Please share your thoughts with us in the comments.

Kevin Okemwa is a seasoned tech journalist based in Nairobi, Kenya with lots of experience covering the latest trends and developments in the industry at Windows Central. With a passion for innovation and a keen eye for detail, he has written for leading publications such as OnMSFT, MakeUseOf, and Windows Report, providing insightful analysis and breaking news on everything revolving around the Microsoft ecosystem. While AFK and not busy following the ever-emerging trends in tech, you can find him exploring the world or listening to music.