How to overclock your PC monitor — why and what that means

Did you know you can overclock your PC monitor? No? Well, you can! Here's how — and why.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

Overclocking your monitor is essentially for the same purpose as overclocking your processor; to get a little more performance. It involves increasing the refresh rate beyond the stock rating, meaning it can draw more frames on the screen per second.

For most people most of the time it's not exactly an essential thing to do, but that doesn't mean it's not a neat trick or that you shouldn't do it. Here's what you need to know.

What is refresh rate?

By definition, just this:

The number of times per second that an image displayed on a screen needs to be regenerated to prevent flicker when viewed by the human eye.

So simply put, a 60Hz monitor will refresh the image 60 times every second, 75Hz will do it 75 times a second and 144Hz will refresh 144 times per second. Generally speaking, especially in computing and PC gaming, higher is better. The human eye and brain see at around 24fps, but we're capable perceiving far greater refresh rates than that.

Can any monitor do it?

Potentially. Whether you can increase the refresh rate or not depends on your specific panel. Even in identical monitors, the display panels within them are not all created equal. You may read stories of significant increases for some on your particular monitor, but your own might not be so lucky.

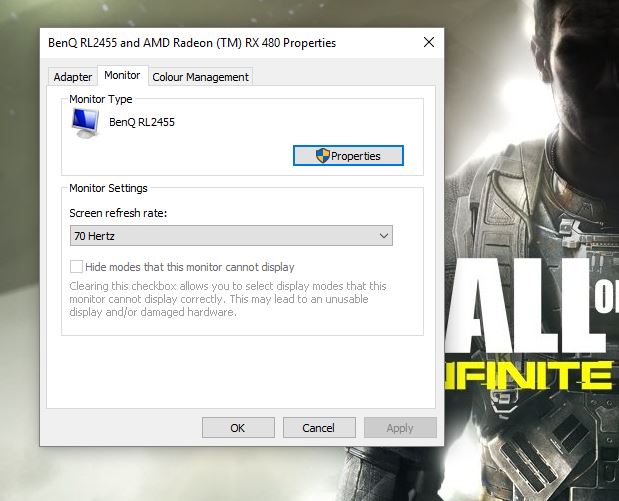

For example, I use a BenQ RL2455HM and there are many successful reports of folks overclocking from 60Hz to 75Hz. My own monitor can only go up to 70Hz.

Update: As pointed out in the comments, take every step to check your panel specs first. Not only are not all panels created equal but some manufacturers may have applied a factory overclock already. In this instance, the risks are much higher if you attempt to push the limits even further.

All the latest news, reviews, and guides for Windows and Xbox diehards.

It's very much one of those your mileage may vary situations.

How to overclock your monitor

It's actually a very straight forward process. You can either use a third-party tool called CRU, or attempt to use software from AMD, NVIDIA or Intel. All are free, so we'll look at each.

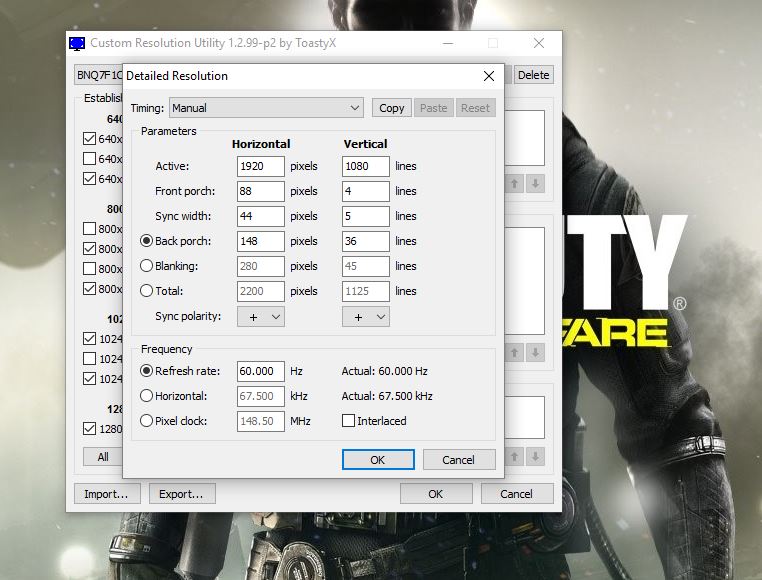

CRU - Custom Resolution Utility

This one is one of the older methods, and may not be compatible with all GPUs or integrated Intel graphics. It does, however, seem to work very well with AMD graphics. You can download it here and once installed it's a straight forward process to change up your refresh rate.

- Open CRU

- You'll see two boxes, one for detailed resolutions and standard resolutions.

- Under detailed resolutions click add.

- Click on timing and change it to LCD standard.

- Change refresh rate to something above the standard value, a good start is an increment of 5Hz.

- Click OK.

- Reboot your PC.

Next you'll need to change the refresh rate in Windows 10, steps which apply to any other method of overclocking.

- Right click on the desktop and select display settings.

- Click on advanced display settings.

- Scroll down and select display adapter properties.

- On the monitor tab select the desired refresh rate from the drop down box.

If it's worked, the monitor won't go black, basically. If you went too high the screen won't display anything and will revert to old settings after 15 seconds.

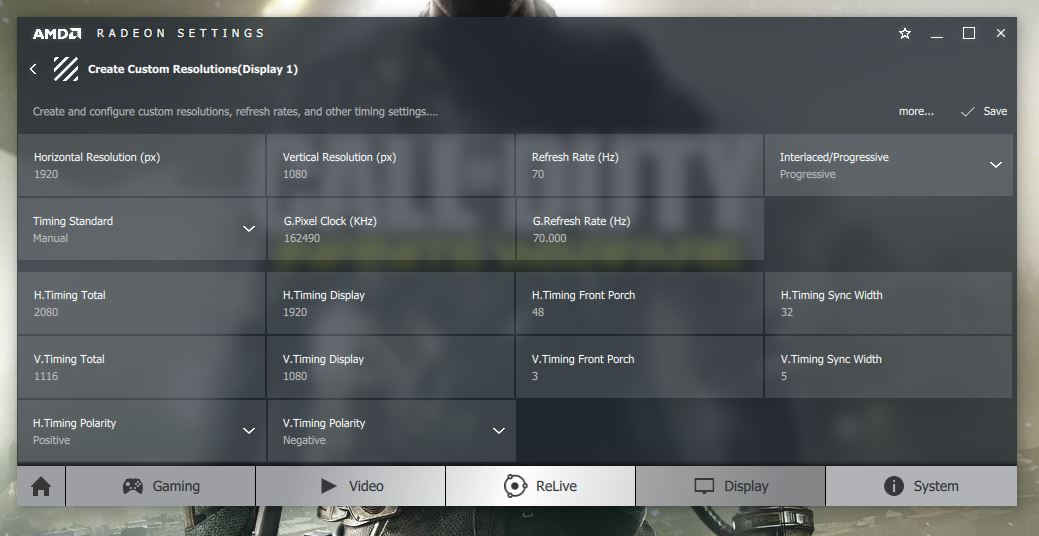

Using AMD Radeon settings

If you're using an AMD GPU you can achieve similar results using the AMD Radeon Settings application. Right click its icon in your taskbar and follow these steps.

- Right-click on the desktop and select AMD Radeon settings.

- Click on the display tab.

- Next to custom resolutions, click create.

- Change the refresh rate to your desired level.

- Click save.

Reboot and use the steps above next to change to your custom refresh rate.

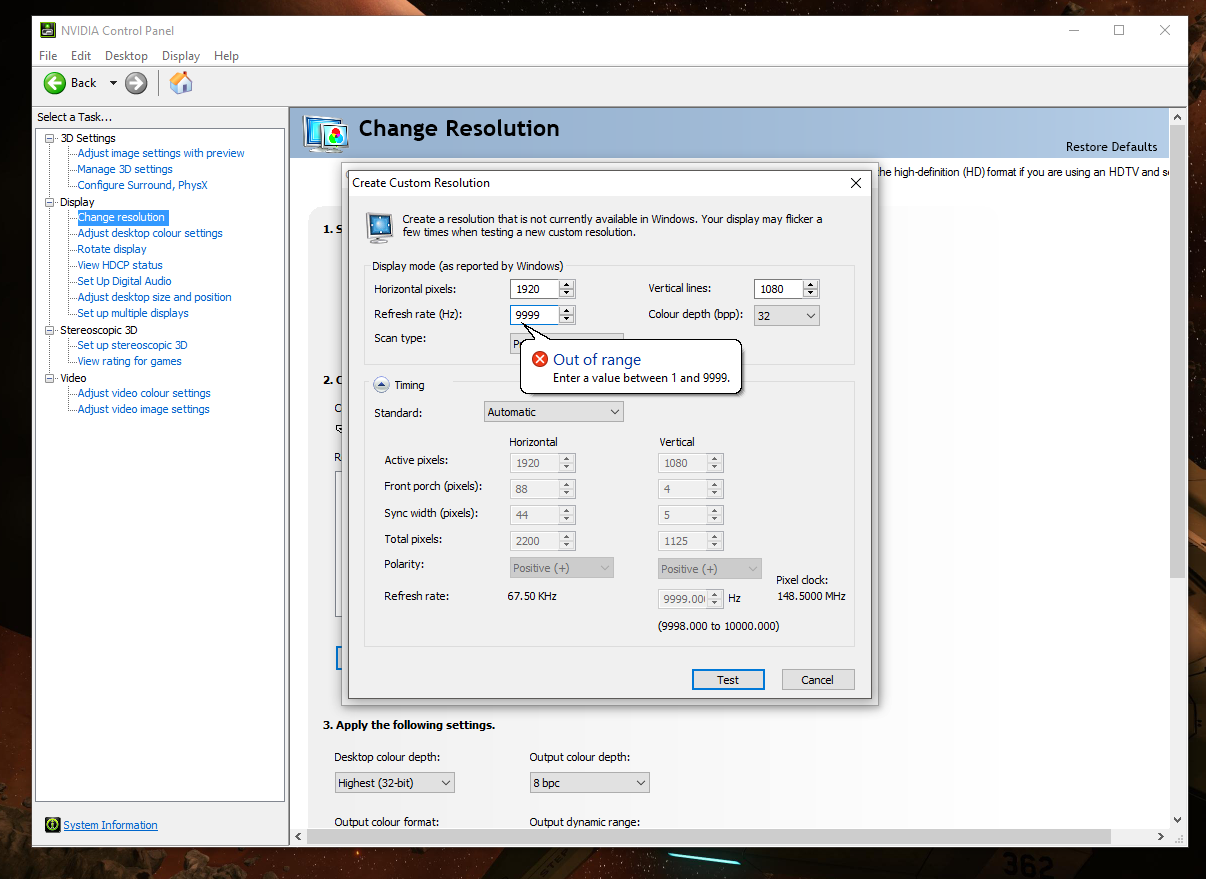

Using NVIDIA control panel

The steps for NVIDIA users are mostly similar as for AMD, with the main difference being NVIDIA's controls look a bit more utilitarian!

- Right-click on the desktop and select NVIDIA control panel.

- Expand the display menu.

- Click change resolution and then create custom resolution.

With the NVIDIA control panel you can test your created settings before applying. Once you're happy, reboot and hit the steps above to implement.

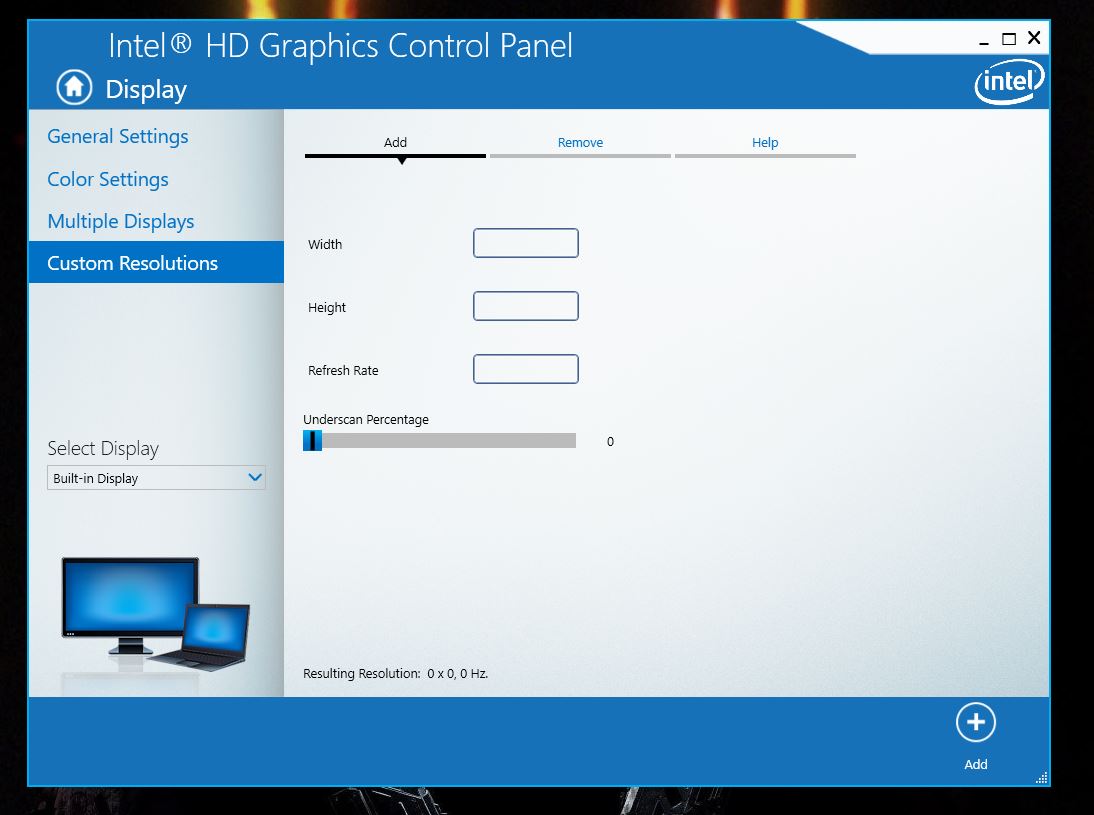

Using Intel graphics

Intel's own graphics control panel will also let you create custom resolutions and refresh rates fairly easily.

- Open Intel HD graphics control panel.

- Select display.

- On the left click on custom resolutions.

- Enter your width, height and desired refresh rate. 5 Click add.

If your display can't go any higher, you'll be prompted and will have to either quit or try again. If you get a successful lock-in, reboot and hit the steps above to make sure you've got the new one selected.

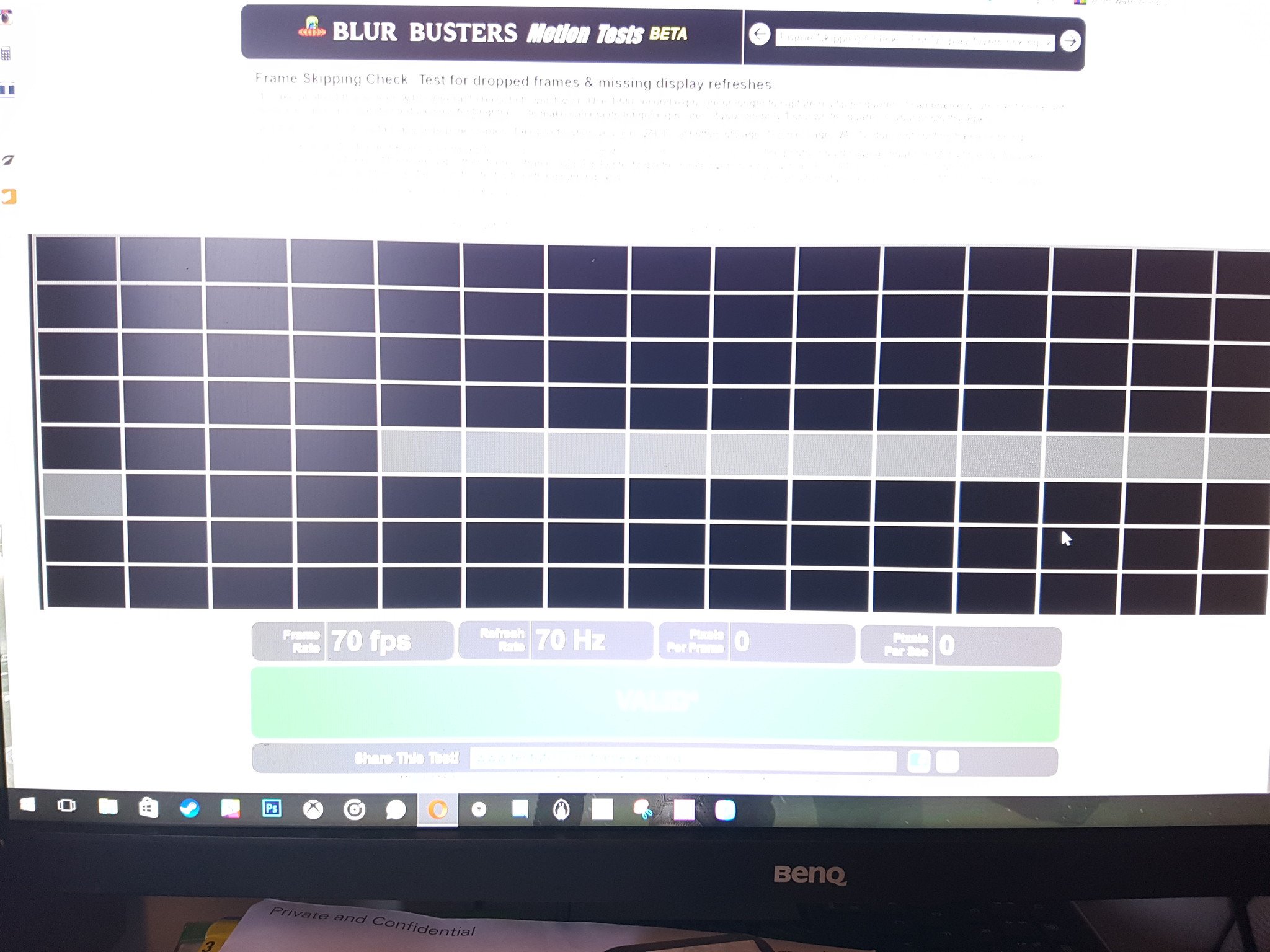

How to verify your overclock

To test and make sure that your new refresh rate is working as it's supposed to, there's a great online test that you can run. Visit http://www.testufo.com/#test=frameskipping in your browser and follow the steps on screen. It will recognize the refresh rate you've got selected for your monitor at that time.

What you basically do is take a photo of the moving graphic with a lower shutter speed and if everything is working as it should you'll get a (poor quality) photo like the one above. If the shaded boxes are in a line and unbroken, then you've been successful. If the boxes are separated, then you're getting skipped frames.

Not bullet proof and at your own risk

Just like overclocking a processor, all of this is done at your own risk. You should be able to experiment without blowing up your PC monitor, but there are never any guarantees . So be careful with your gear. Also bear in mind that the connection to your monitor from the PC could have an effect, as does the resolution. You might be able to overclock at 720p, for example, but not at 1080p.

And as already mentioned none of this is an exact science and your mileage may vary. It's hard to say we'd recommend this as an essential thing to try, but if you like to tinker there's nothing stopping you.

If you're an old hand at doing this, be sure to drop any tips or tricks into the comments below!

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine