What is TDP how does it affect your PC's performance?

TDP has everything to do with heat and little to do with power.

Should you be looking for various parts to build a PC with, or to upgrade a specific component, you may have come across a term on your travels: TDP.

But what exactly is TDP, and why should you even care about the value provided by a manufacturer? We break everything down for you.

So, what is TDP?

TDP stands for Thermal Design Power and is used to measure the amount of heat a component is expected to output when under load. For example, a CPU may have a TDP of 90W and therefore is expected to output 90W worth of heat when in use. It can cause confusion when shopping around for new hardware, as some may take the TDP value and design a PC build around that, taking note of the watt usage. But this isn't entirely accurate, nor is it completely wrong.

Our 90W TDP CPU example doesn't mean the processor will need 90W of power from the power supply, even though thermal design power is actually measured in watts. Instead of showcasing what the component will require as raw input, manufacturers use TDP as a nominal value for cooling systems to be designed around. It's also extremely rare you will ever hit the TDP of a CPU or GPU unless you rely on intensive applications and processes.

The higher the TDP, the more cooling will be required, be it in passive technologies, fan-based coolers, or liquid platforms. You'll not be able to keep a 220W AMD FX-9590 chilled with a laptop CPU cooler, for example.

Is TDP the same as power draw?

Not quite, no. TDP doesn't equate to how much power will be drawn by the component in question, but that doesn't mean you can't use the value provided as an estimation. The reading itself is based on power so it can actually prove useful when looking at what you will need to provide enough juice. Generally, a component with a lower TDP will need to draw less electricity from your power supply.

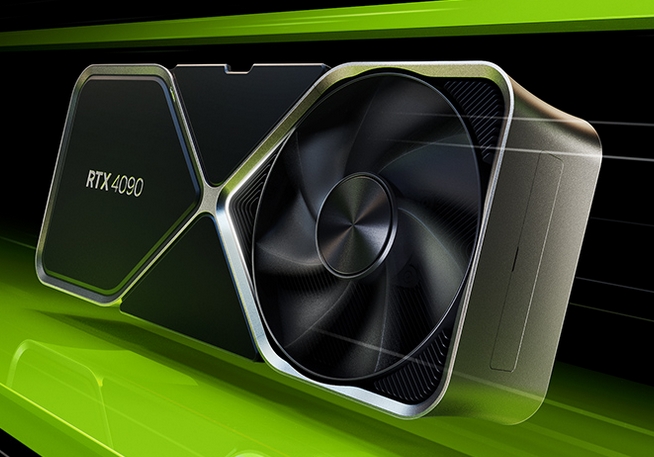

Actual readings listed by manufacturers can vary as well, depending on their own findings. So while the value of TDP may not exactly reflect how much power a part will draw in a system, it does provide solid grounds to design a cooling system around it, as well as a rough idea as to how much output a power supply (PSU) will need to have. To be safe, we usually recommend a quality brand PSU of 500W for a PC with a single GPU.

All the latest news, reviews, and guides for Windows and Xbox diehards.

| GPU | TDP | Suggested PSU |

|---|---|---|

| RTX 4090 | 450W | 850W |

| RTX 4080 | 320W | 750W |

| RTX 4070 Ti | 285W | 700W |

| RTX 4070 | 200W | 650W |

| RTX 3090 | 350W | 850W |

| RTX 3080 | 320W | 750W |

| RTX 3070 | 220W | 650W |

| Radeon RX 7900 XTX | 355W | 800W |

| Radeon RX 7900 XT | 300W | 700W |

| Radeon RX 7800 XT | 300W | 600W |

| Radeon RX 7700 XT | 245W | 550W |

| Radeon RX 7600 | 165W | 450W |

Why is TDP important when picking PC parts?

If that still has you flabbergasted with TDP, it's essentially a reading that helps determine the power efficiency and performance of a component. Using a CPU as an example, one with a higher TDP measurement will usually provide more in terms of performance but will draw more electricity from the PSU. TDP is not — however — a direct measure of how much power a component will draw, but it can be a good indicator.

You can see in our Intel Core i7-14700K review and the NVIDIA RTX 4090 review, that these powerhouses give off a lot of heat. Be sure the available cooling you have at hand is more than enough to keep components cool, especially when it comes to the GPU and CPU.

Rich Edmonds was formerly a Senior Editor of PC hardware at Windows Central, covering everything related to PC components and NAS. He's been involved in technology for more than a decade and knows a thing or two about the magic inside a PC chassis. You can follow him on Twitter at @RichEdmonds.

- Colton StradlingContributor