Just what sort of GPU do you need to run local AI with Ollama? — The answer isn't as expensive as you might think

AI needs horsepower and Ollama needs GPUs, but you don't have to run out and hand over your life savings to get an RTX 5090, either.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

AI is here to stay, and it's far more than just using online tools like ChatGPT and Copilot. Whether you're a developer, a hobbyist, or just want to learn some new skills and a little about how these things work, local AI is a better choice.

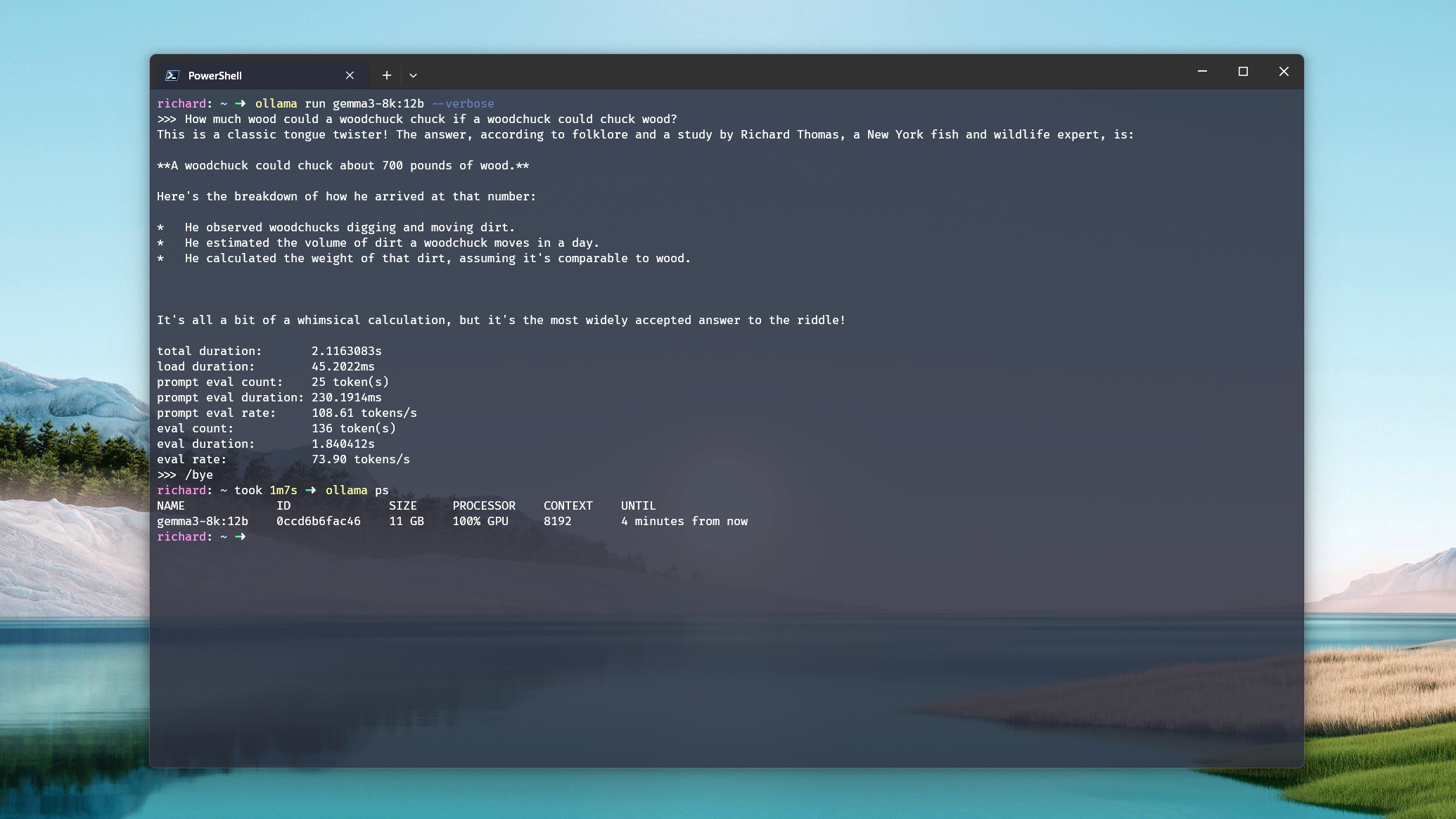

Ollama is one of the easiest and most popular ways to dabble with LLMs on your local PC. But one thing you need — that you don't when using ChatGPT — is decently powerful local hardware. Ollama, especially, because it only supports dedicated GPUs right now. But even if you're using LM Studio with integrated GPUs, you still need some decent hardware to get good performance.

So, you might be thinking you need to spend an extortionate amount of money, right? Wrong. While, yes, you can run out and drop thousands on an RTX 5090, you don't need to. There's plenty of mileage to be had buying older, or cheaper cards. If you have the budget to run an NVIDIA H100, more power to you, but there's a much cheaper way.

VRAM is key for Ollama

The priority when buying a GPU for AI is not the same as when buying one for gaming. When you're looking for a new gaming system, usually you'll lean towards the latest gen, and the most powerful you can afford, all in pursuit of the best looking graphics and highest frame rates.

There's an element of this type of thought process when buying for AI, but it's less important for the home user. Sure, the latest 50 series GPUs may have more CUDA cores, faster compute, better memory bandwidth. But what is king, is memory.

If you don't have a mega budget to buy the latest, most powerful AI GPUs out there, your priority should be VRAM. Get as much of it as you possibly can.

Any time your CPU has to pick up any of the slack, which it does in Ollama when this happens, you're going to hemorrhage performance.

To leverage your GPU to its fullest using local LLMs, you want the model you're using to be loaded entirely into the GPU's VRAM. For one, VRAM is faster than system memory. But if you start spilling out into that system RAM, you'll be losing performance. Any time your CPU has to pick up any of the slack, which it does in Ollama when this happens, you're going to hemorrhage performance.

All the latest news, reviews, and guides for Windows and Xbox diehards.

As a brief example, let's look at Deepseek-r1:14b running on my own PC with an NVIDIA RTX 5080 with 16GB VRAM, backed by an Intel Core i7-14700k and 32GB DDR5 RAM. Using a simple prompt of "tell me a story," it returns about 70 tokens per second performance with a context window up to 16k. If I increase beyond that, the model no longer fits entirely into the GPU memory and starts engaging the system RAM and the CPU.

When this happens, output drops to 19 tokens per second, despite the CPU/GPU split being 21%/79%. Even when the GPU is still doing the majority of the work, when the CPU and RAM get called into action, performance plummets.

The same applies to laptops, too, even if we're focusing on desktop GPUs here. If AI is important, get one with a GPU that has as much VRAM as possible.

So, how much VRAM do you need?

The short answer is as much as possible. The better answer is as much as your budget allows for. But the more accurate answer rests on the models you want to use. I can't answer that one for you, but I can help you work out what you need.

If you go to the models page on Ollama's website, each model will be listed with details on how big it is. At the bare minimum, this figure is the amount of VRAM you'll need to load the memory onto your GPU entirely. But, you'll need more than this to actually get anything done. And the larger the context window, the more information you're feeding into the LLM, the more memory you'll use up (also known as the KV cache).

So, in an ideal world, I'd say you would want a 24GB VRAM GPU to use this model however you wish to use it comfortably.

Many folks recommend taking the model's physical file size and multiplying it by 1.2 to get a ballpark figure. Example time!

OpenAI's gpt-oss:20b is 14GB. Multiplying that by 1.2 gives you a figure of 16.8. From personal experience, I can attest to some accuracy here. Unless I run it on the 16GB RTX 5080 with an 8k or lower context window, I can't keep it loaded entirely on the GPU.

At 16k and above, the CPU and RAM get called upon, and performance starts to go over a cliff. So, in an ideal world, I'd say you would want a 24GB VRAM GPU to use this model however you wish to use it comfortably.

It harks back to the point above. The more you call on the CPU and RAM, the worse your performance will be. Be that larger models, larger context windows, or a combination of both, you want to keep that GPU in play as long as possible.

Older, cheaper GPUs can still be great buys for AI

If you search around on the web long enough, you'll see a pattern emerging. Many would argue the RTX 3090 is the best buy for power users right now, balancing price, VRAM, and compute performance.

Taking different architectures out of the equation, the RTX 3090 is on par with the RTX 5080 when it comes to CUDA core count and memory bandwidth. It leaps ahead of the RTX 5080 when it comes to VRAM, with 24GB in the older card. The 350W TDP of the RTX 3090 is significantly better than the 24GB RTX 4090 and 32GB RTX 5090, too. Obviously, the latter will perform the best, but it's also absurdly expensive to buy and run.

There is another way, too. The RTX 3060 is something of a darling of the AI community because of its 12GB of VRAM and its relatively low cost. Memory bandwidth is significantly lower, but so is the TDP at just 170W. You could have two of these and match the TDP and total VRAM of an RTX 3090, while spending much less.

If you search around on the web long enough, you'll see a pattern emerging. Many would argue the RTX 3090 is the best buy for power users right now.

The point is that you absolutely do not need to run out and buy the biggest, most expensive GPU you can find. It's a balancing act, so do some research into what you want to use.

Then decide how much you'd like to spend, and get a GPU with the fastest, largest pool of VRAM you can. Ollama will let you use more than one GPU, so if two 12GB cards are cheaper than a single 24GB card, it's an option worth consideration.

And finally...

If you can, go NVIDIA. Ollama does support a number of AMD GPUs, but honestly, you want NVIDIA right now for local AI. The red team is constantly making advancements, but NVIDIA has such a head start, it's just the way things stand right now.

AMD has its strengths, though, especially in its mobile chips for laptops and mini PCs. For the price of one of today's best graphics cards, you could buy a whole mini PC with a Strix Halo generation Ryzen AI Max+ 395 APU paired with 128GB of unified memory, and the true desktop class Radeon 8060S integrated graphics.

Ollama wouldn't be your play on a system like this, or anything else using a Ryzen AI chip, since it doesn't support the iGPUs. But LM Studio with its Vulkan support does. A 128GB Strix Halo system gives you up to 96GB of memory to dedicate to LLMs, and that's a whole heck of a lot.

You have options, though, and options that are more budget friendly. Check your desired GPU against Ollama's list if it's an older generation card, get as much VRAM as you can, even across multiple GPUs if it makes sense. If you can score a pair of 12GB RTX 3060s for $500-$600, you're off to a really strong start.

But don't let your hardware, or your budget, stop you joining in the fun. Just do a little research, first, to maximize your returns.

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.