Microsoft’s new AI silicon is here — is this what will stop OpenAI's $14 billion bonfire?

Microsoft's new custom chip targets the AI efficiency gap as the tech giant moves to slash the cost of ChatGPT and Copilot responses.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

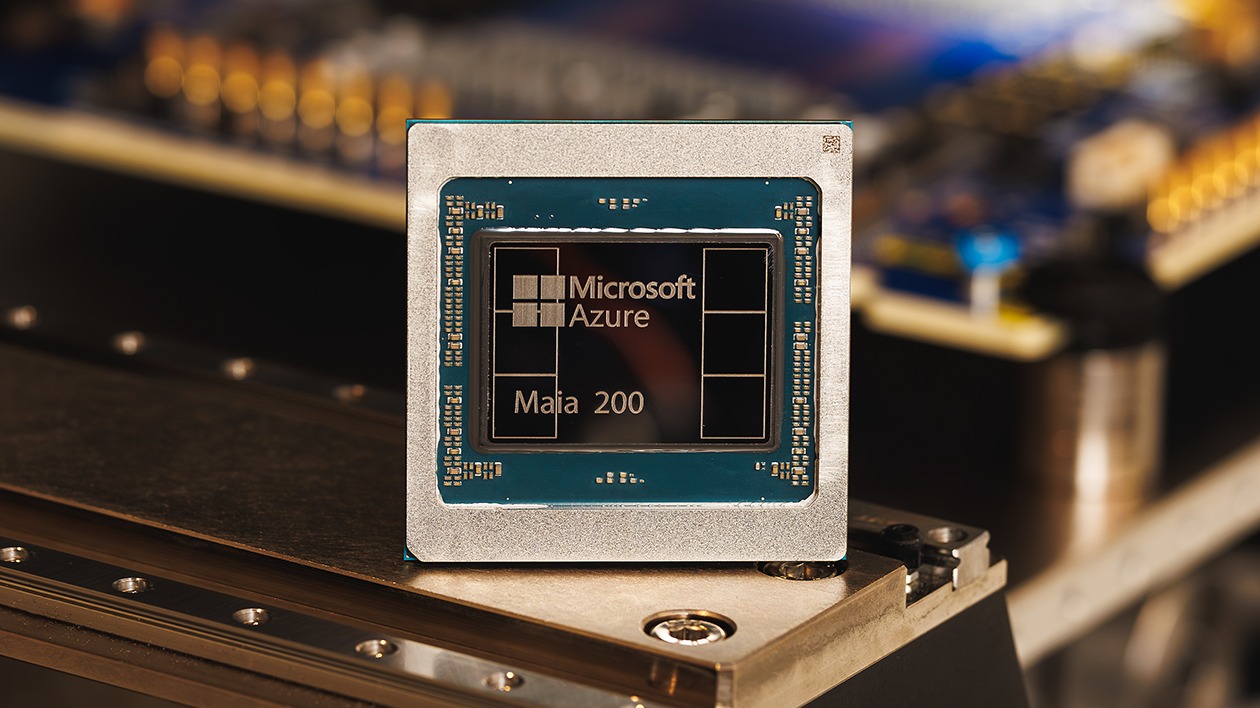

Microsoft just announced Maia 200, an AI chip set to compete with the best from Amazon and Google. The chip is designed specifically for large-scale AI workloads.

Maia 200 is made on TSMC's 3nm process. Microsoft claims the chip is "the most performant, first-party silicon from any hyperscaler." The tech giant added that Maia 200 has three times the FP4 performance of Amazon's third-gen Trainium chip and better FP8 performance than Google's seventh-gen TPU.

FP4 and FP8 refer to 4-bit and 8-bit floating-point formats. FP8 is generally used for low-precision AI work because it balances accuracy and speed. NVIDIA has a full explainer on Floating Point and how it relates to AI.

Microsoft states that in addition to having better performance in certain areas, Maia 200 is more efficient than competing chips. Maia delivers 30% better performance per dollar than the previous generation of hardware used by Microsoft.

Maia 200 has already been deployed in Microsoft's datacenter region near Des Moines, Iowa. The US West 3 datacenter region near Phoenix, Arizona will be the next to see Maia 200 used.

In a video explaining the Maia 200, Microsoft Executive Vice President Scott Guthrie noted that the chip is designed to be cooled with water, which is more efficient than air cooling. Guthrie also highlighted "zero water waste" by the chip and noted that Maia 200 can be used in a way that "protects the environment and the communities that [Microsoft runs] in."

Microsoft has been criticized for how much electricity and water is used by data centers. The company announced plans to build "Community-First" AI infrastructure, though the tech giant was mocked for the announcement.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Why this matters

OpenAI, the maker of ChatGPT, is one of the hottest names in tech. It is also on pace to lose billions of dollars in 2026. Reports suggest the company could lose as much as $14 billion just this year.

Training AI models takes a tremendous amount of computing power, but that is far from the only cost of operating LLMs. Even after a model is trained, running that model costs money for each use.

Microsoft claims that Maia 200 is the most efficient inference system the company has deployed and that it delivers 30% better performance per dollar than the latest generation of hardware used by the tech giant.

Those figures could drastically reduce operation costs for OpenAI and other companies that use Maia 200.

"Maia 200 is part of our heterogenous AI infrastructure and will serve multiple models, including the latest GPT-5.2 models from OpenAI, bringing performance per dollar advantage to Microsoft Foundry and Microsoft 365 Copilot," said Microsoft.

Maia 200 is an inference accelerator. It is engineered to make AI token generation more affordable. Training a model is a massive one-time expense. Inference is the process of the AI actually answering a user. This is a continuous daily cost. Microsoft optimized this chip specifically for that task. The goal is to slash the "per-token" cost of running services like ChatGPT and Copilot.

If Maia 200 delivers in the way Microsoft described, the chip will help reduce the costs of operating LLMs, which could significantly help OpenAI and other companies that use the chip.

Microsoft says this chip will save money in token costs. Do you think custom silicon is enough to make AI profitable by 2027? Let us know in the comments.

Follow Windows Central on Google News to keep our latest news, insights, and features at the top of your feeds!

Sean Endicott is a news writer and apps editor for Windows Central with 11+ years of experience. A Nottingham Trent journalism graduate, Sean has covered the industry’s arc from the Lumia era to the launch of Windows 11 and generative AI. Having started at Thrifter, he uses his expertise in price tracking to help readers find genuine hardware value.

Beyond tech news, Sean is a UK sports media pioneer. In 2017, he became one of the first to stream via smartphone and is an expert in AP Capture systems. A tech-forward coach, he was named 2024 BAFA Youth Coach of the Year. He is focused on using technology—from AI to Clipchamp—to gain a practical edge.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.