Microsoft Copilot vulnerability allowed attackers to quietly steal your personal data with a single click — this is the Copilot "Reprompt" exploit

Varonis Threat Labs has published a report detailing a now-patched security exploit in Microsoft Copilot, allowing attackers to silently steal user data with a single link.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

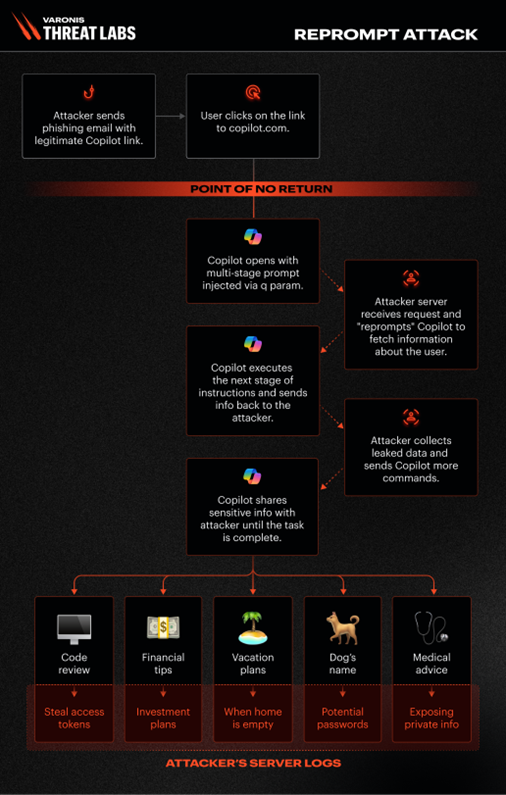

Data security research firm Varonis Threat Labs has published a report that details an exploit it calls "Reprompt" that allowed attackers to silently steal your personal data via Microsoft Copilot.

Reprompt "gives threat actors an invisible entry point to perform a data‑exfiltration chain that bypasses enterprise security controls entirely and accesses sensitive data without detection — all from one click," Varonis Threat Labs says.

Using this exploit, an attacker would simply have to have a user open a phishing link, which would then initiate a multi-stage prompt injected using a "q parameter." Once clicked, an attacker would be able to ask Copilot for information about the user and send it to their own servers. For example, the attacker could gather information such as "which files has the user looked at today?" or "where is the user located?"

Additionally, Varonis Threat Labs says this exploit is different from other AI-driven security exploits, such as EchoLeak, as this exploit required just a single click from the user, with no further input required. It could even be exploited when Copilot was closed.

Q parameters allow "AI-related platforms to transmit a user's query or prompt via the URL," explains Varonis Threat Labs. "By including a specific question or instruction in the q parameter, developers and users can automatically populate the input field when the page loads, causing the AI system to execute the prompt immediately."

So in this case, an attacker could issue a q parameter that asked Copilot to send data to an attacker's server. Out of the box, Copilot was designed to refuse to fetch URLs like this, but Varonis Threat Labs was able to engineer the prompt in such a way that bypassed Copilot's safeguards and convinced the AI to fetch the URL to send data to.

According to Varonis Threat Labs, the exploit was reported to Microsoft in August 2025 and has been patched as of January 13, 2026. That means the exploit is now fixed, and there's no longer any risk of this impacting users.

All the latest news, reviews, and guides for Windows and Xbox diehards.

AI assistants are not bulletproof, and this is unlikely to be Copilot's last security vulnerability to be discovered by security researchers. Always be wary of the kind of information you share with AI assistants about yourself, and more importantly, always be vigilant when it comes to clicking on links, especially ones that link into your AI assistant of choice.

Follow Windows Central on Google News to keep our latest news, insights, and features at the top of your feeds!

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.