Using 'local AI' — how my 7-year-old laptop still punches above its weight

Is it fast? Not at all. Does it work, though? You bet. I'm running local AI with minimum hardware.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

We're led to believe that running AI locally on a PC needs some kind of beefed-up hardware. That's partly true, but as with gaming, it's a sliding scale. You can play many of the same games on a Steam Deck as you can on a PC with an RTX 5090 inside. The experience is not the same, but what matters is that you can play.

That's also true of dabbling with local AI tools, such as running LLMs using something like Ollama. While a beefcake GPU with lashings of VRAM is ideal, it's not absolutely essential.

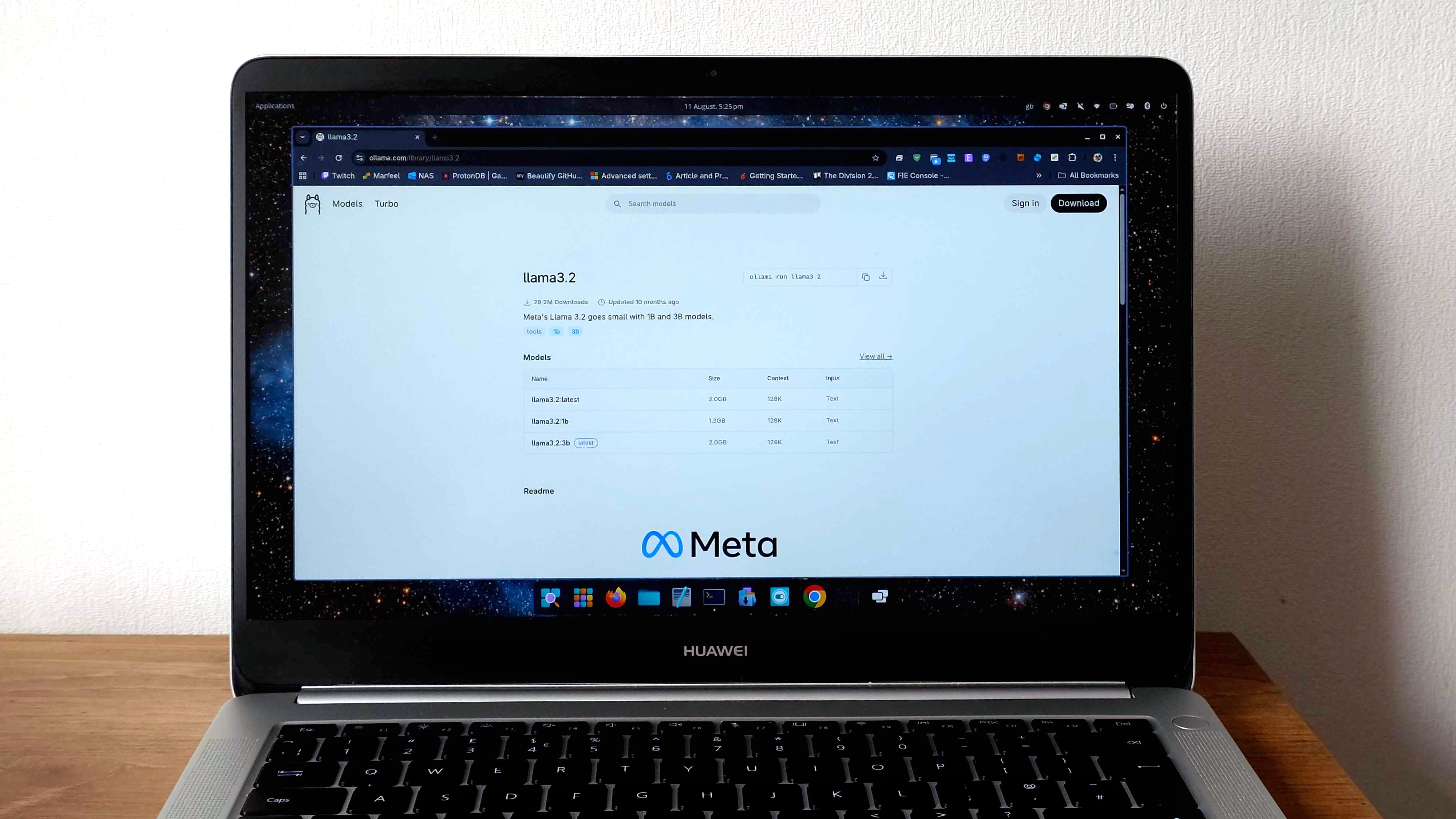

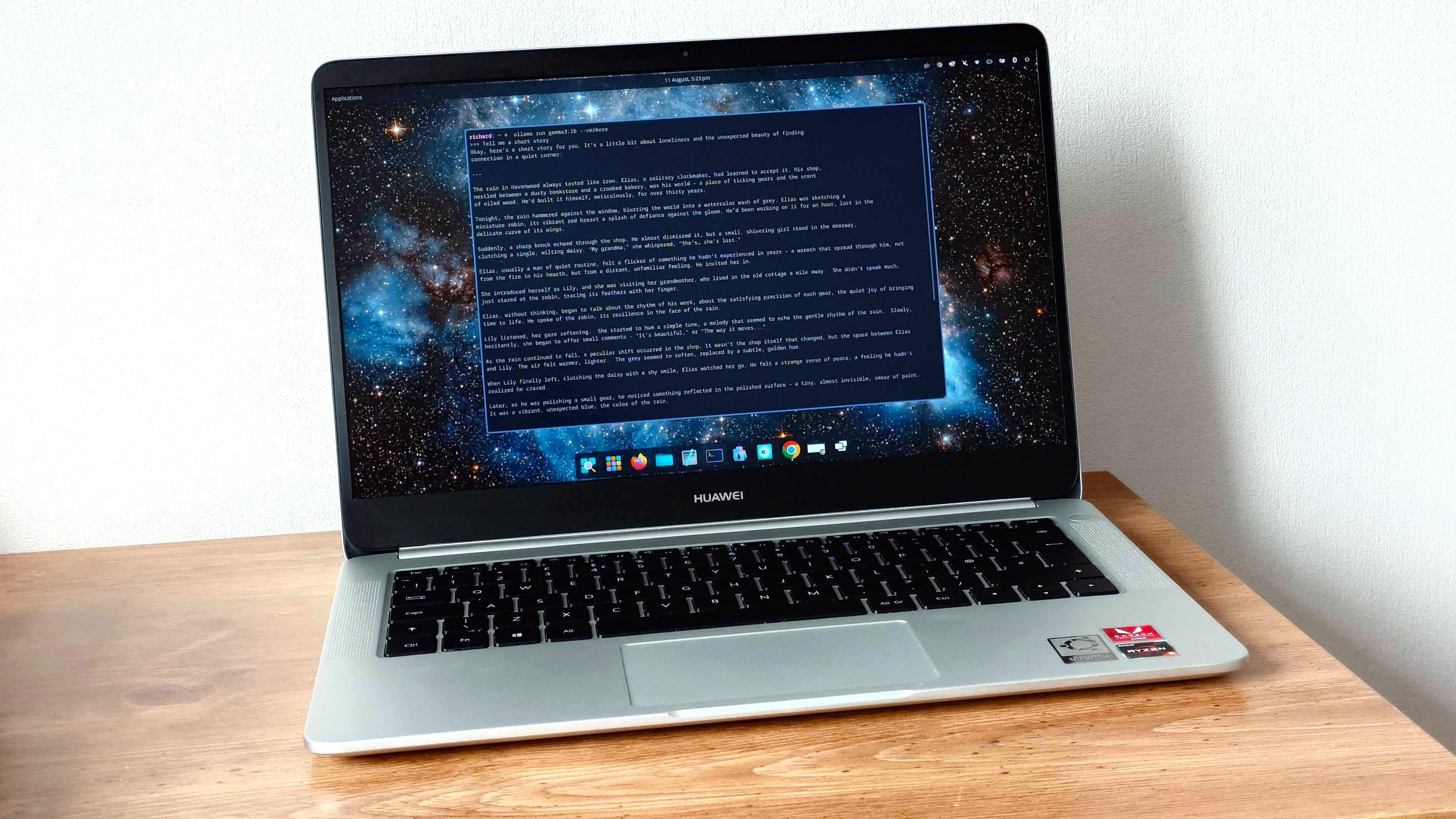

Case in point: my seven-year-old Huawei MateBook D with a now fairly underpowered AMD Ryzen 5 2500U, 8GB of RAM, and no dedicated graphics. But it can still use Ollama, it can still load up some LLMs, and I would say it's usable.

There are caveats to running AI on older hardware

Gaming is the perfect comparison use case that comes to mind for AI right now. To get the most from the latest, most demanding content, you need some serious hardware. But you can also enjoy many of the latest titles on older and lower-powered machines, even those that only rely on integrated graphics.

The caveat is that the older, less powerful hardware simply won't perform as well. You're probably looking to hit 30 FPS instead of (at least) 144 FPS, but you can do it. But you'll have to sacrifice graphics settings, ray tracing, and resolution.

The same is true of AI. You're not going to be churning out hundreds of tokens per second, nor will you be loading up the latest, biggest models.

But there are plenty of smaller models you can absolutely try out, as I have, successfully on older hardware. If you have a GPU that's compatible, great, it'll use that. I don't, however, and I've still had some success.

All the latest news, reviews, and guides for Windows and Xbox diehards.

Specifically, I've loaded up some '1b' models, that is, 1 billion parameters, into Ollama on my old laptop, which is currently running Fedora 42. The APU isn't officially supported by Windows 11 anyway, but I usually run Linux on older hardware regardless.

Ollama is platform-agnostic, though, with versions for Mac and Windows alongside Linux. So it doesn't matter what you're using; even an older Mac may get some mileage with this.

So, just how 'usable' is it?

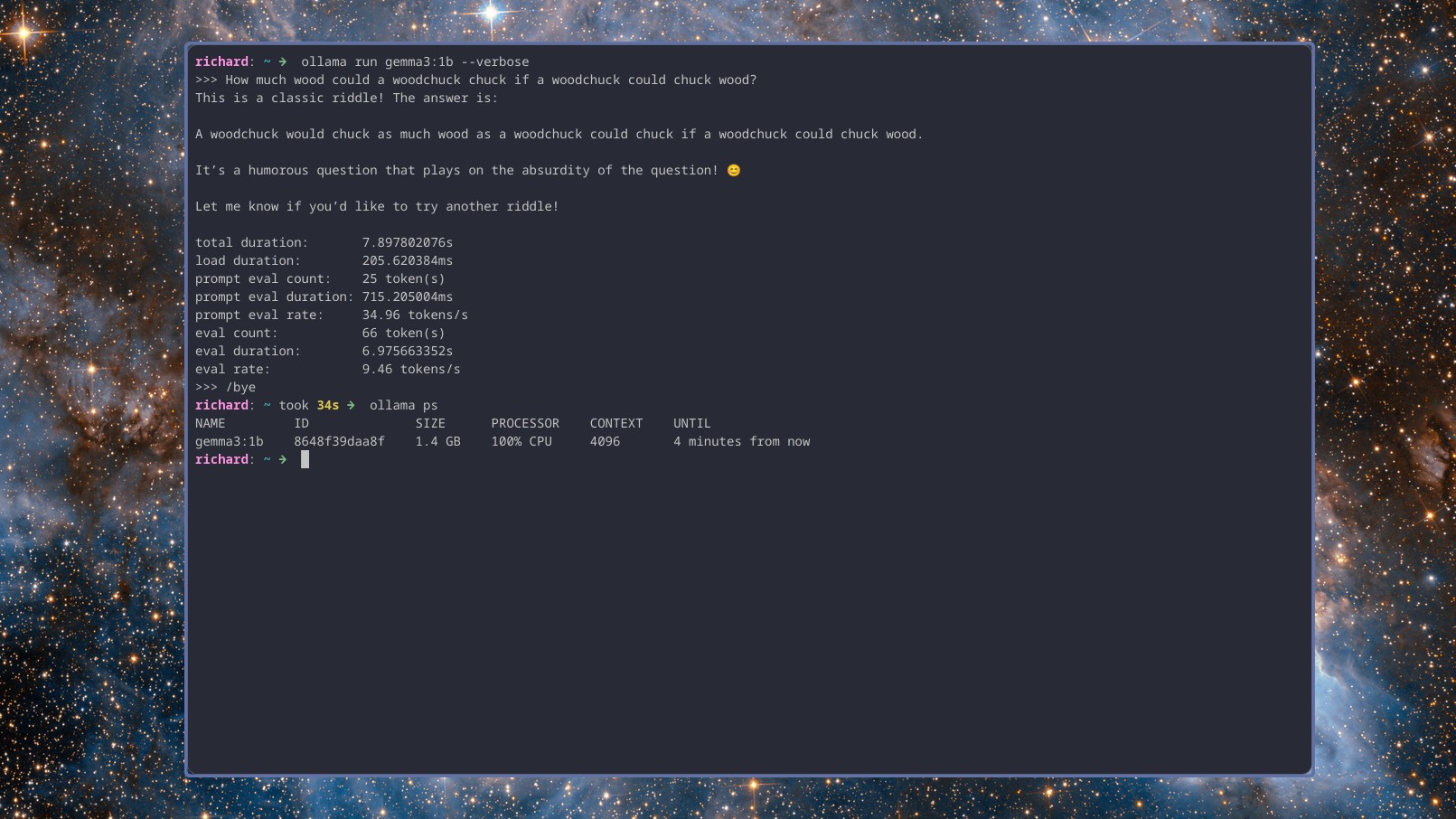

I haven't tried any larger than 1b models on this laptop, and I don't think it's worth the time. But testing out three such models, gemma3:1b, llama3.2:1b, and deepseek-r1:1.5b, all yield similar performance. In each case, the LLMs are using a 4k context length, and I don't think I'd risk trying anything higher.

First up is my old favorite:

"How much wood would a woodchuck chuck if a woodchuck could chuck wood?"

Both Gemma 3 and Llama 3.2 churned out a short, fairly quick response and recorded just under 10 tokens per second. Deepseek r1, by comparison, has reasoning, so it'll run through its thought process first, then give you an answer, and was a little behind at just under 8 tokens per second.

But, while not what you'd call fast, it's usable. All three are still churning out responses significantly faster than I could type (and definitely faster than I can think). These were all with a 4k context length.

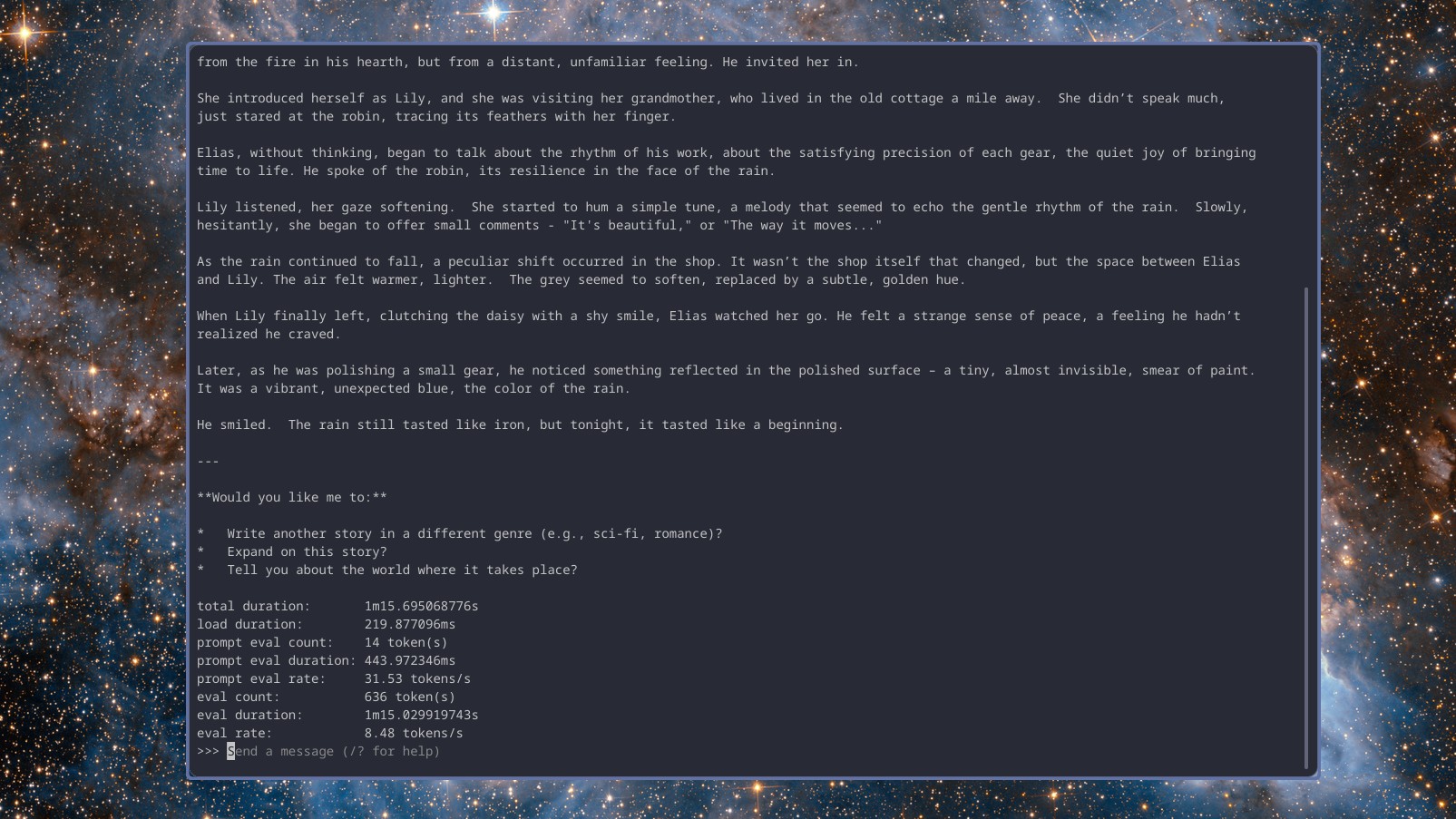

The second test was a bit meatier. I asked all three models to generate a simple PowerShell script to fetch the raw content of text files from a GitHub repository, and to ask questions to help make sure I was happy with the response, to build the best possible script.

Note, I haven't actually validated whether the output worked. In this instance, I'm purely interested in how well (and fast) the models are able to work the problem.

Gemma 3 gave an extremely detailed output explaining each part of the script, asking questions as directed to tailor the script, and did it all at just under 9 tokens per second. DeepSeek r1 with reasoning operated a little slower, again, at 7.5 tokens per second, but did not ask questions. Llama 3.2 gave an output of similar quality to Gemma 3 at a rate of just under 9 tokens per second.

Oh, but I didn't mention yet, this was all on battery power with a balanced power plan. When connected to external power, all three models essentially doubled their tokens per second and took about half as long to complete the task.

I think that's the more interesting point, here. You could be out and about with a laptop on battery and still getting stuff done. At home or in the office, hooked up to power, even older hardware can be fairly capable.

This was all done purely using the CPU and RAM, too. The laptop harvests a couple of GB of RAM for the iGPU to use, but even so, it's not supported by Ollama. Doing a quick ollama ps shows 100% on the CPU.

These are small LLMs, but the truth is that you can play about with them, integrate them into your workflow, learn some skills, all without breaking the bank on new, crazy powerful hardware.

You don't have to look too far on YouTube to find creators running AI on a Raspberry Pi and home servers made up of older and (now) cheaper hardware. Even with an old, mid-tier laptop, you can probably at least get started.

It's not ChatGPT, but it's something. Even an old PC can be an AI PC.

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.