NVIDIA Chat with RTX hands-on: This local AI chatbot already shows plenty of promise

NVIDIA's AI chatbot runs locally on your PC, and it works with the data you provide.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

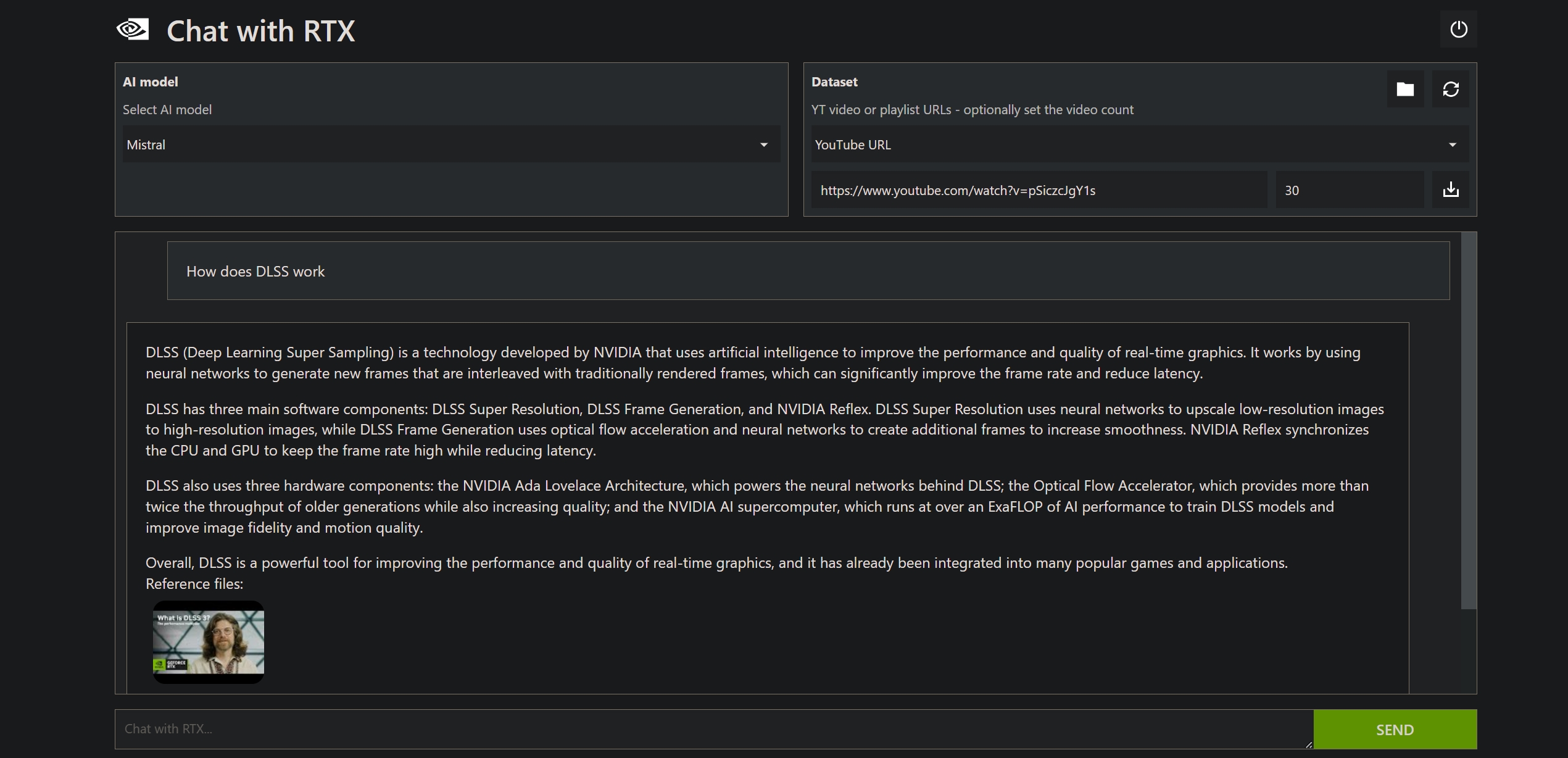

NVIDIA does a lot of interesting things around AI, but its consumer-facing business is still predominantly focused on gaming. It's now aiming to bring both categories together with the introduction of Chat with RTX, an AI chatbot that runs locally on your PC. The software leverages Tensor-RT cores built into NVIDIA's gaming GPUs — you'll need an RTX 30 or 40 card to use it — and uses large language models (LLM) to provide useful insights into your own data.

The key difference is that unlike ChatGPT and Copilot, Chat with RTX runs entirely on your PC, and it doesn't send any data to a cloud server. You feed it the relevant dataset, and it offers answers based on the information contained within. Another cool feature is that you can share YouTube links, and Chat with RTX interprets the content in the video and answers questions — this is done by pulling from the data from the closed captions file.

Chat with RTX is available as a free download, and the installer is 35GB. There are a few prerequisites; you'll need an RTX 30 or 40 series card with at least 8GB of VRAM, and a machine with at least 16GB of RAM. While NVIDIA recommends Windows 11, I had no issues running the utility on my Windows 10 machine. Right now, Chat with RTX is only available on Windows, with no mention on when it will be coming to Linux.

It takes an hour to install the two language models — Mistral 7B and LLaMA 2— and they take up just under 70GB. Once it's installed, a command prompt window launches with an active session, and you can ask queries via a browser-based interface.

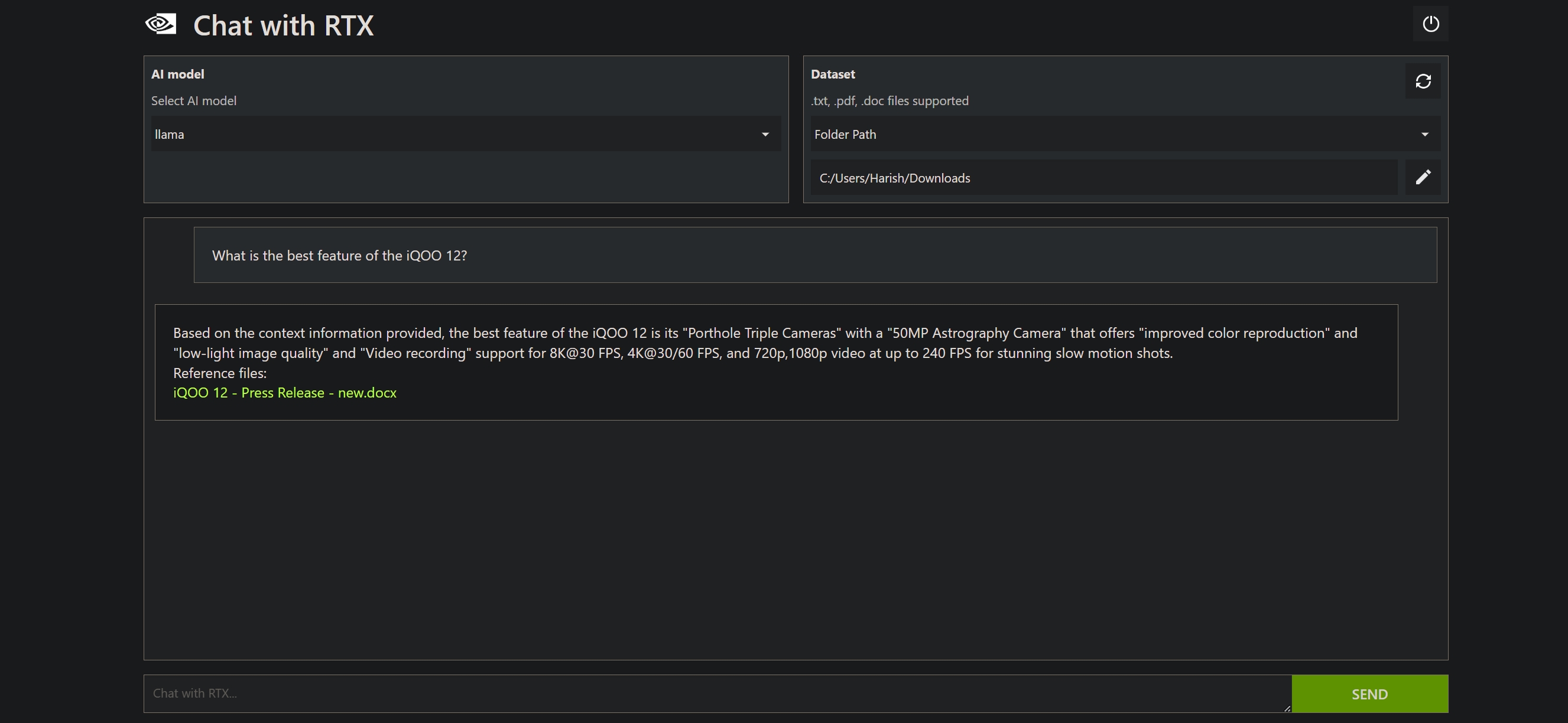

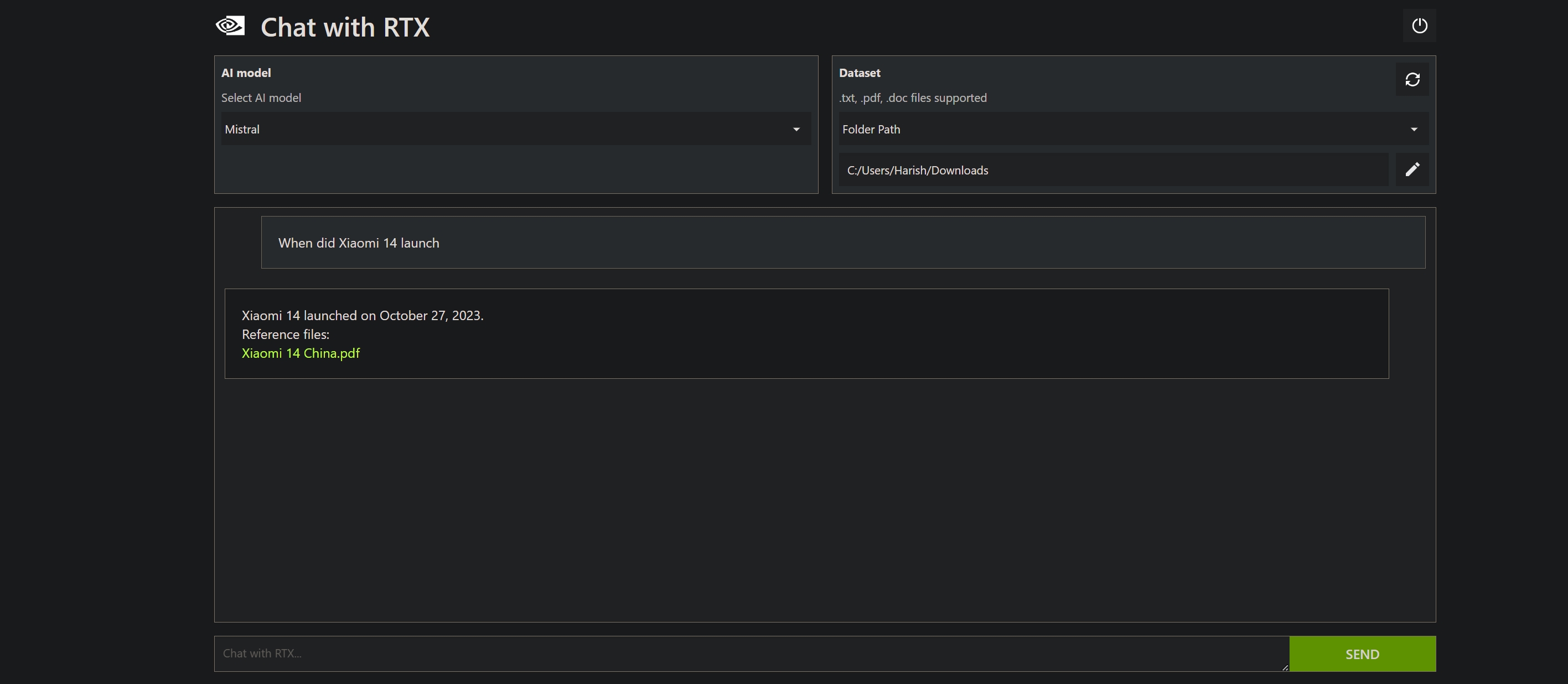

NVIDIA provides a default dataset to test Chat with RTX, but I pointed the utility at my Downloads folder, which has a few hundred press releases, review guides, and all my articles in text (.txt) format. The chatbot is able to parse PDFs, Word documents, and plain text, and as already mentioned, you can link YouTube videos and ask queries.

Chat with RTX does a great job summarizing details, and it works really well with targeted questions, like the launch date of a phone. There isn't a provision to ask follow-up questions at the moment, but that is likely to change in subsequent releases.

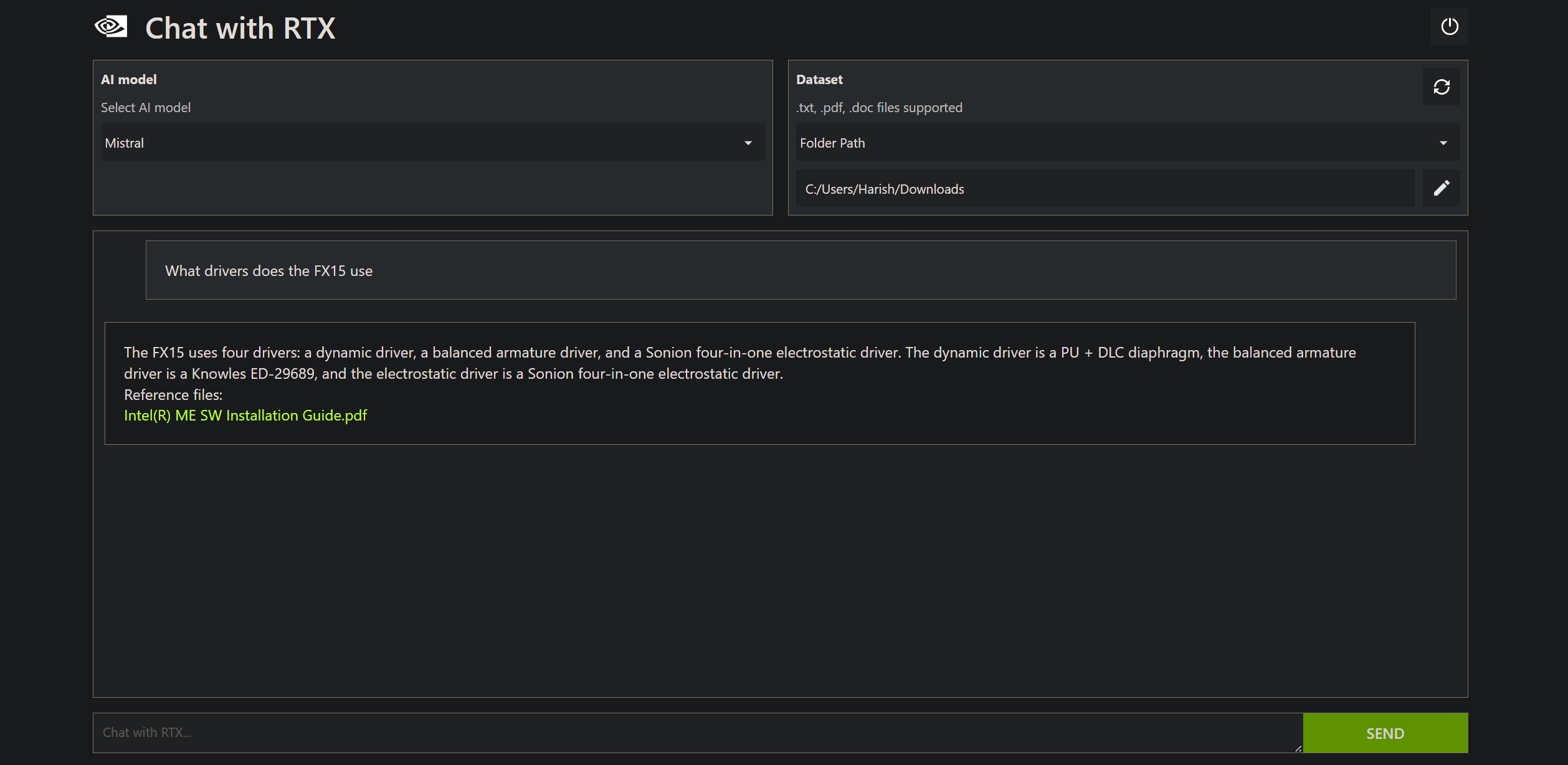

While it does a good job surfacing relevant information, it isn't without a few issues. I asked the chatbot what drivers are used in the Fiio FX15 IEM that I reviewed on Android Central. The FX15 uses a combination of three different drivers, and while the chatbot answered the query correctly, it linked to an Intel Management Engine installation document instead of the Fiio review guide where that information is present.

All the latest news, reviews, and guides for Windows and Xbox diehards.

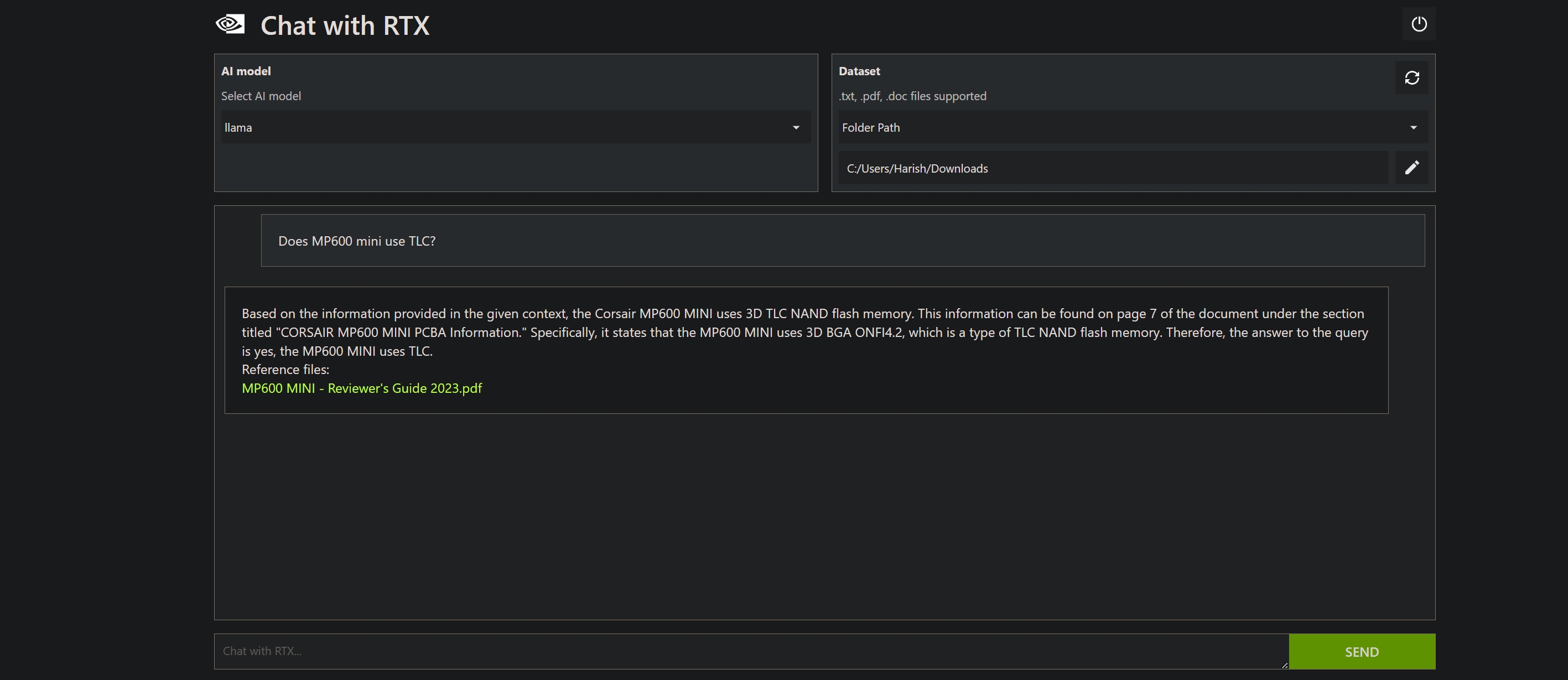

Similarly, I asked the chatbot if Corsair's MP600 mini uses TLC storage, and it was only able to provide the correct answer the second time around (and yes, the drive has TLC storage).

Chat with RTX is still in beta, but there's clearly a lot of potential here. The ability to run an AI chatbot locally is a big deal, and it does a great job surfacing information from the data you provide. As for my own use case, it's great to have a local chatbot that can summarize press releases and highlight useful details, and that's what I'll be using Chat with RTX for going forward.

If you're intrigued by what NVIDIA is offering, you can easily install Chat with RTX on your own machine and give it a go — it may not be as powerful as ChatGPT, but the ability to use your own data is a good differentiator.

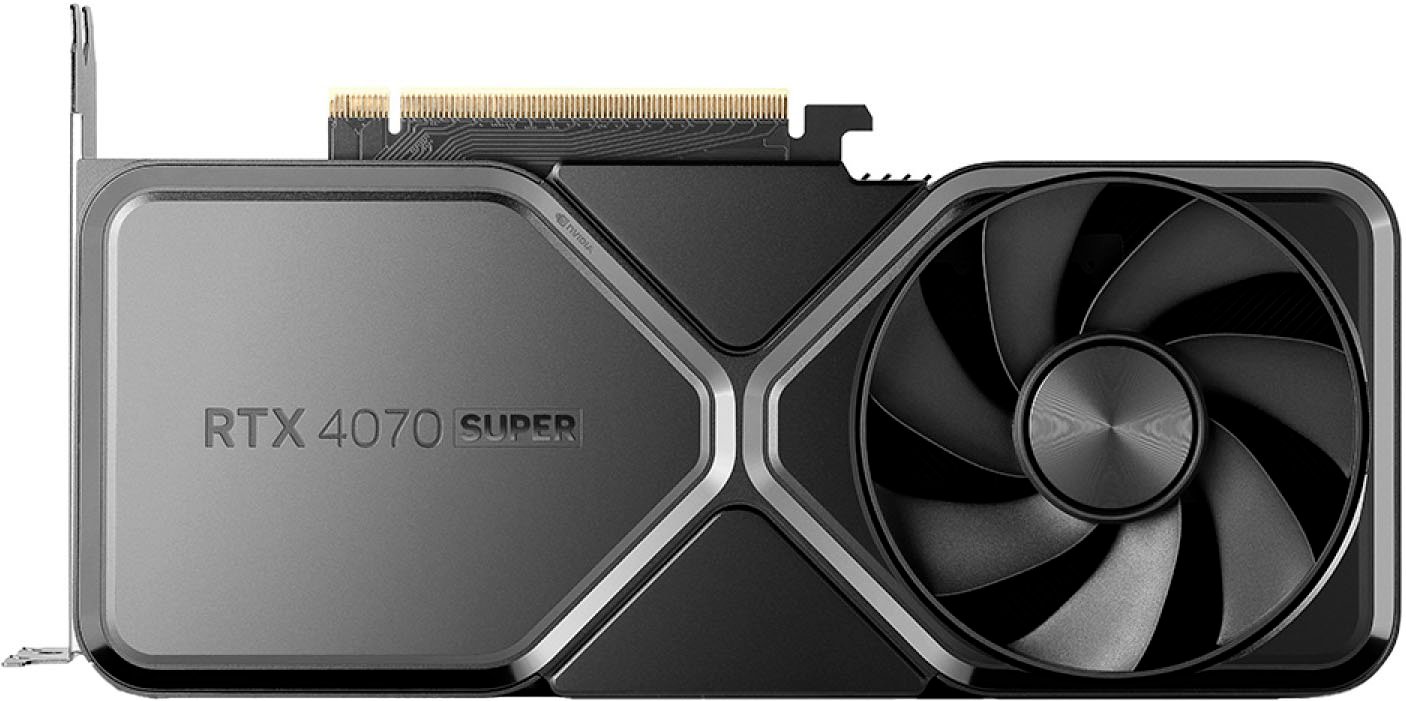

If you don't have an NVIDIA GPU, the RTX 4070 SUPER is a terrific starting point. It offers the best value in NVIDIA's portfolio, has 12GB of video memory, and hold sup incredibly well at 1440p gaming.

Harish Jonnalagadda is a Senior Editor overseeing Asia for Android Central, Windows Central's sister site. When not reviewing phones, he's testing PC hardware, including video cards, motherboards, gaming accessories, and keyboards.