I tried to replace my favorite Copilot feature with Ollama — but I failed (sort-of)

Copilot is insanely useful at summarizing web pages, and despite my best attempts, I'm not as happy using local AI to do the same.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

One thing I learned in a world after having children is that time is precious. Specifically, here, I'm talking about the amount of web pages I read on a daily basis. Be it research, reading news or reviews, or extracting specific pieces of data, when you work in this business, you're reading a lot of content.

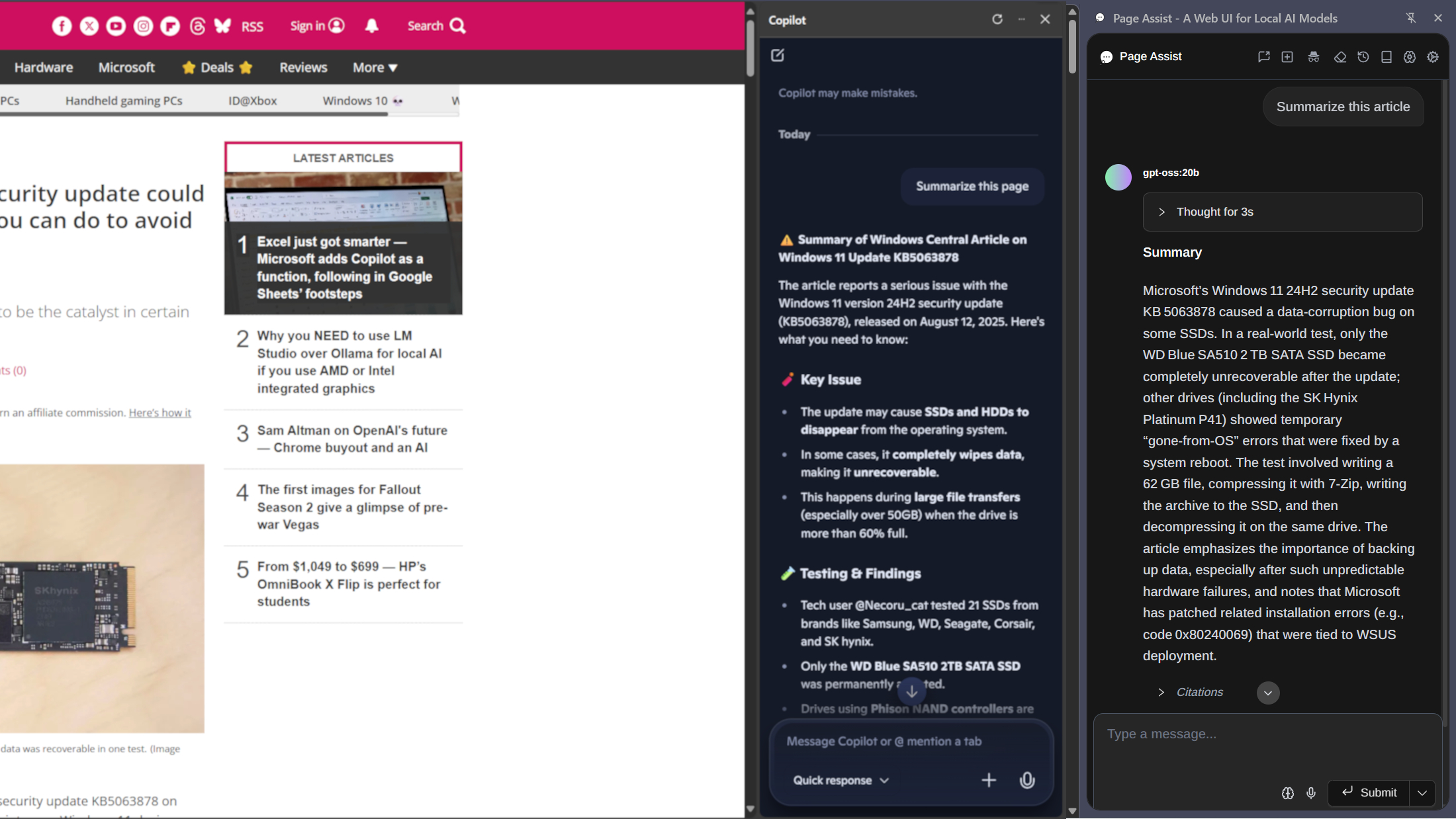

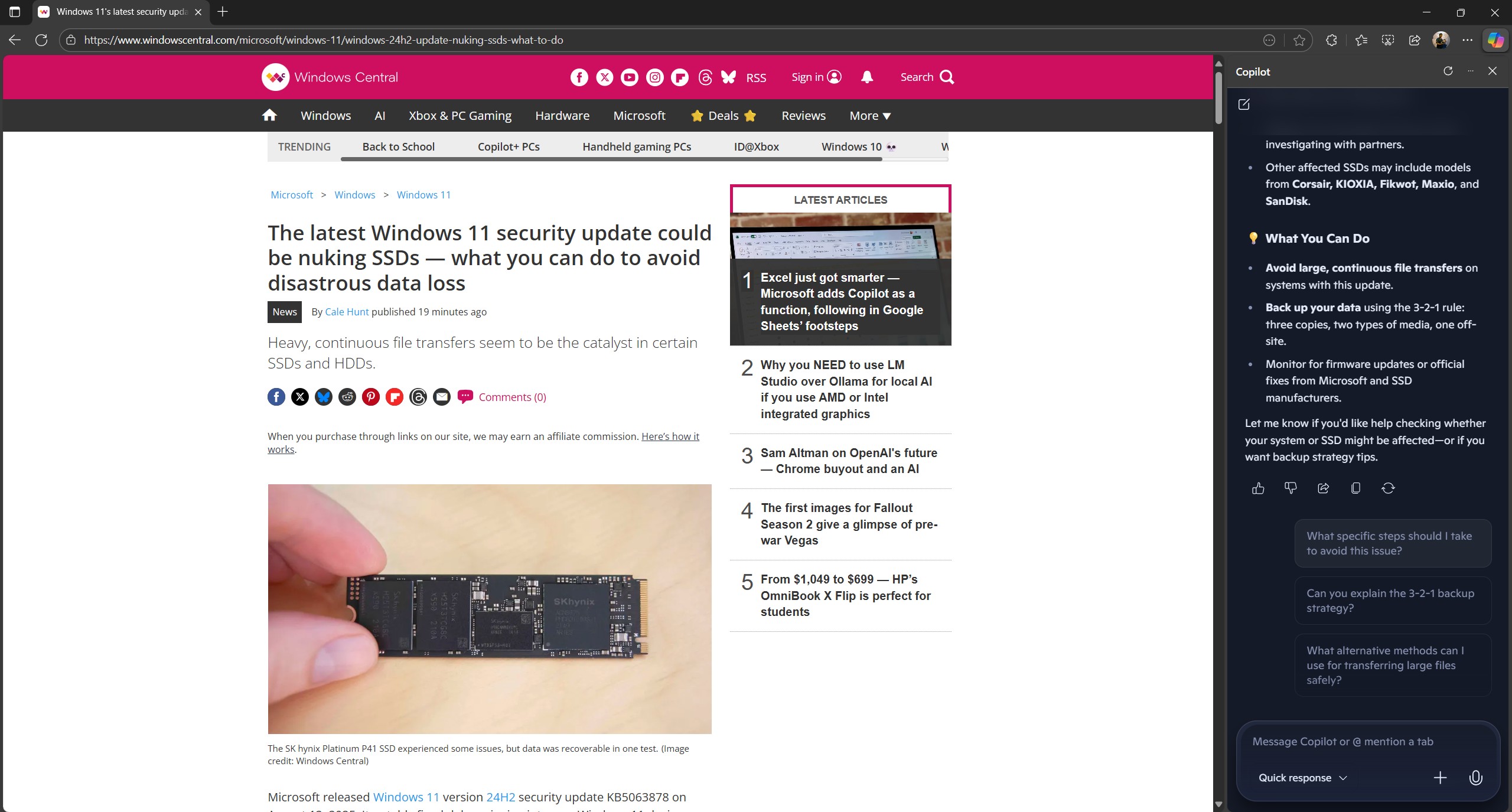

Copilot is fantastic at creating summaries, and it's been getting better and better. In the older days, I'd need to specify what I was looking for, the format I wanted the information in. Now, it just does it, and does it well.

But, I've been experimenting a lot more in recent times with local AI, such as Ollama and LM Studio, rather than relying entirely on the likes of Copilot, ChatGPT, and Google Gemini.

Many of the methods to replicate this feature seem to involve Python. While I'm not allergic to a little tinkering, for this, I just wanted to see if there was something simple and browser-based to do what I'm looking for.

The good news is that I found something. The bad news is that it's still not as good as Copilot at this task.

Ollama in the back and Page Assist in the front

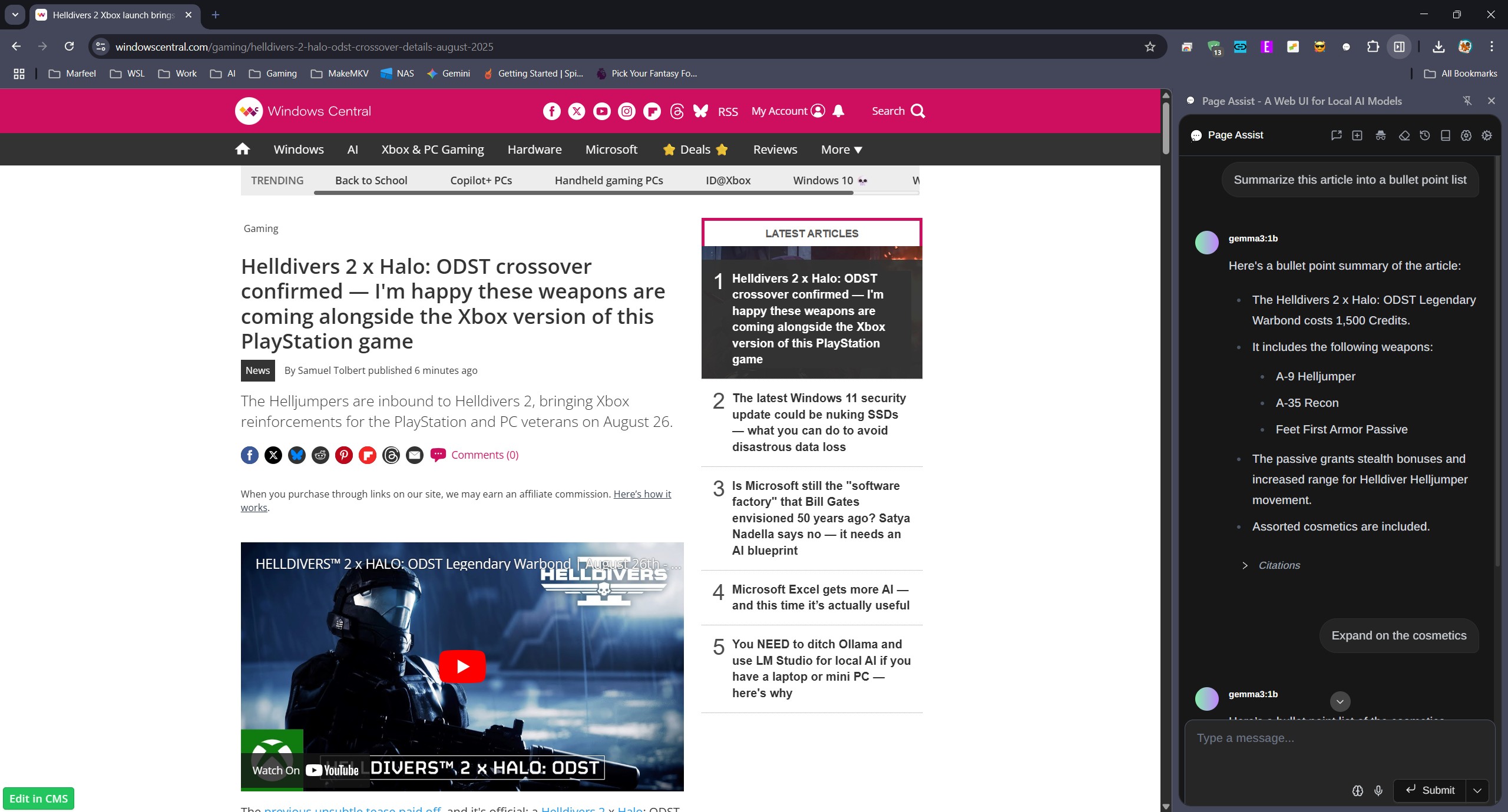

While searching for solutions, I came across Page Assist, a browser extension in the Chrome Web Store (or Microsoft Edge Addons if you prefer). It's more than just an extension, though; it's a full-featured web app that acts as a GUI frontend for Ollama.

It's extremely useful and absolutely jam-packed with features. There's probably something not here, but I haven't figured out what it might be yet. It makes interacting with and managing local LLMs an absolute breeze, and it's significantly more advanced than the official Ollama app.

All the latest news, reviews, and guides for Windows and Xbox diehards.

The full interface isn't what I'm interested in here, though. The golden goose is the sidebar, with the ability to interact with a currently open web page. I found it!

While Page Assist will see your Ollama installation automatically, there's a little more work to do to make it talk to a web page. You need some RAG.

Retrieval-Augmented Generation. I'm not going to attempt to explain it because I'm still trying to get my head around it myself. There are plenty of articles out there, such as this one by IBM, that can do a better job than I ever would.

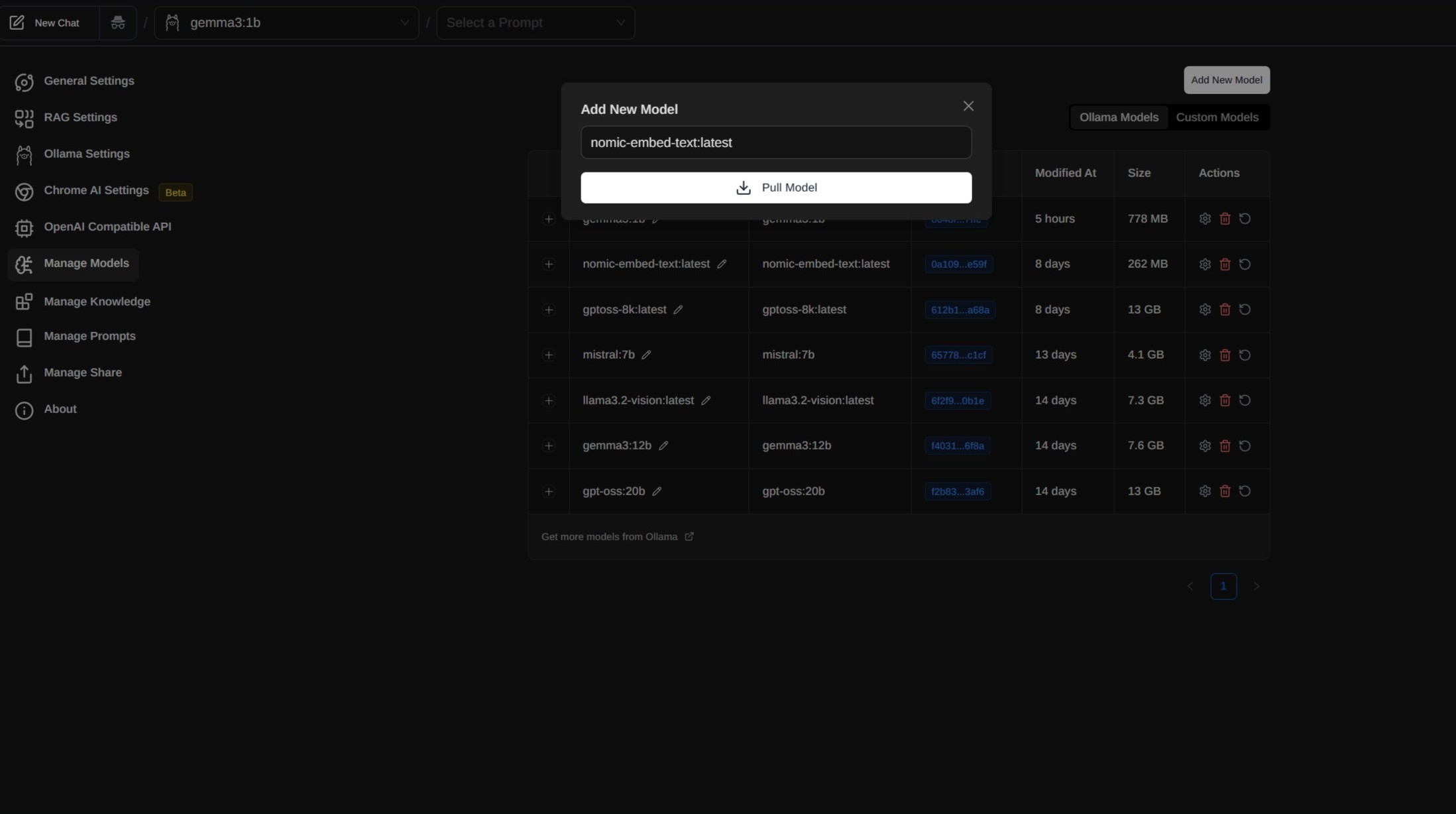

To use Page Assist to talk to a web page, we need an embedding model pulled into Ollama. The app makes it easy, recommending Nomic Embed. So that's what I did, right from Page Assist using the Manage Models menu in Settings, clicking to add a new model and entering nomic-embed-text:latest into the box.

The only other step is figuring out how to launch the side panel. By default, it's in the right-click menu. So you're on a page you want to talk about, right-click, open the side panel to chat.

It's good, but not AS good as Copilot

Does it actually work? Yes, it does. But it doesn't work as well as Copilot, sadly.

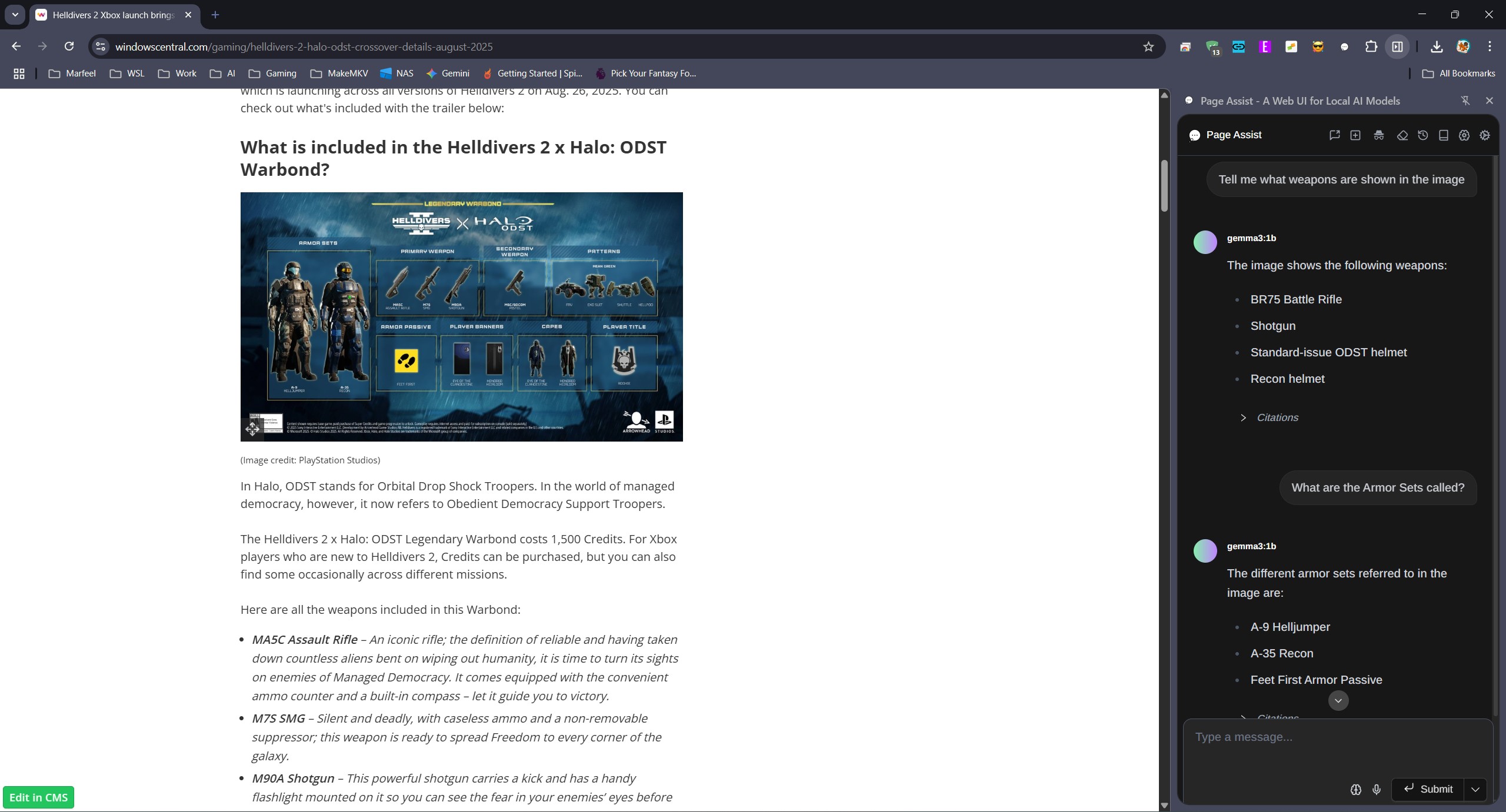

I'll start with the good. Using a variety of different models to test it out, I can indeed summarize articles. The bigger the model, the better job it does. It'd be foolish to think gemma3:1b could do as much as gpt-oss:20b. But it does what I ask it to do in summarizing web articles.

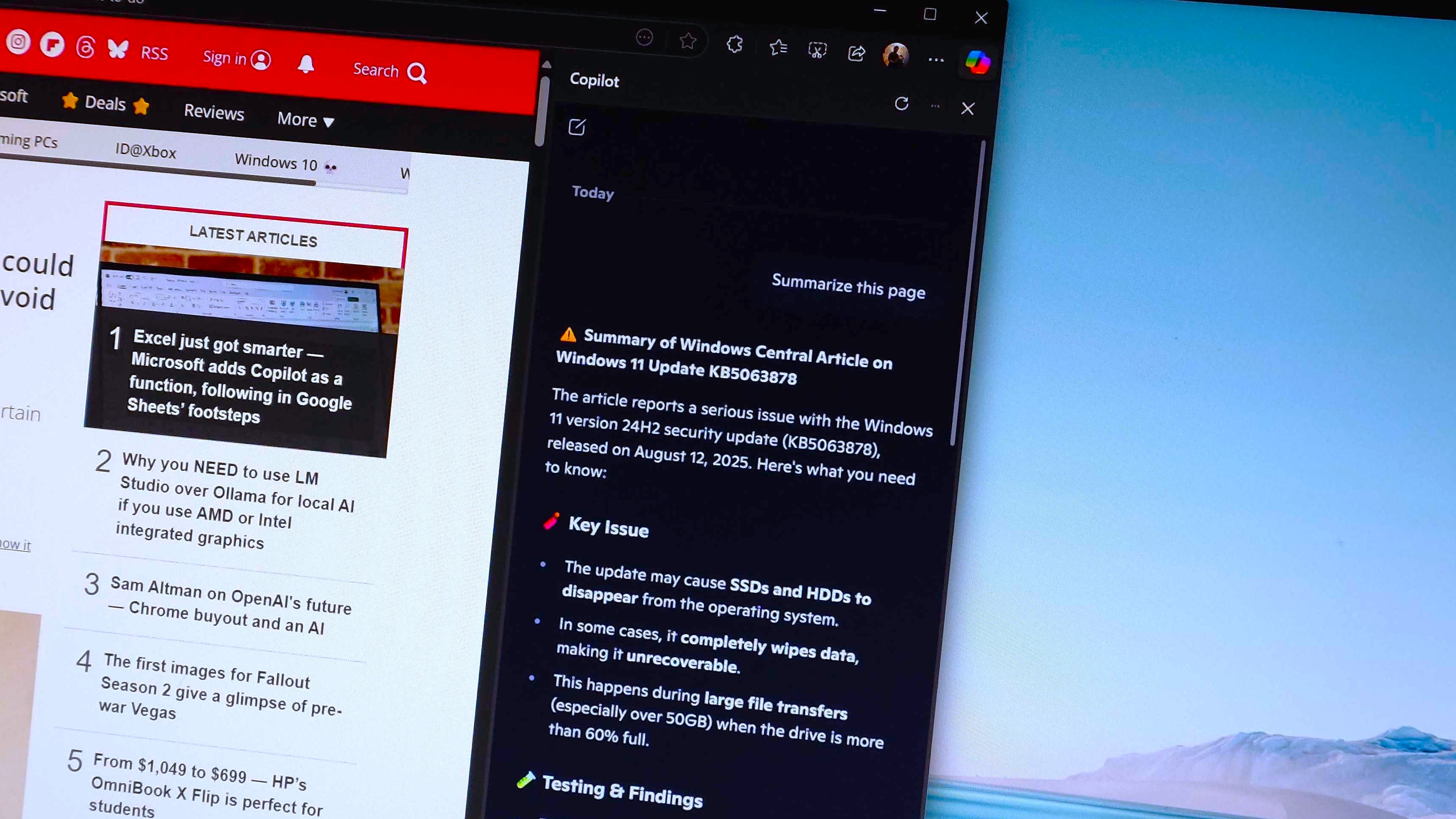

It will also use vision on images if you load a model with that capability. I probably shouldn't have used a small model, though, as you can see in the image above. But it's perfectly able to see the images, use OCR, and whatnot.

So far, so good. But the biggest problem is that it doesn't seem to recognize when you move from one web page to another. The way I work is that if I'm in speed reading mode, I leave the side panel open and just rerun the prompt as I move from page to page.

If I don't start a new chat or physically tell the model to forget the previous article, and now look at and summarize the new one, it keeps repeating itself based on what it saw on the earlier one. It's not a dealbreaker, but it's an annoyance.

Whichever model I've tried so far also just doesn't give the same quality breakdown as Copilot seems to. Obviously, I want a summary, but Copilot just gives a better summary without needing to have a very specific prompt to begin with.

I'm sure over time I could make something better from this, but that's work I need to do. And this is about speed and convenience. Copilot is so much more conversational, whereas these local models seem very matter-of-fact.

The other thing I like about Copilot in this instance is that it provides some follow-up questions. I don't always use them, but from time to time, a lightbulb goes off, and one of them presents me with an angle I hadn't thought of.

A case where I think I might stick with online AI

There are some good reasons to use local AI over online equivalents. However, in this instance, I have to be online to read the web pages I want summarizing, anyway. So as long as it works as well as it does, there's no reason not to continue.

I do still encourage folks to have a look into local AI, though. A big part of it right now, personally, is education. Every day I'm learning something new.

In pursuit of what I've discussed in this article, I've also found a new favorite way to use Ollama. Page Assist is brilliant, and I'll probably write more about it in the future, after I've spent more time diving into its features.

But when I'm working, I think I'll stick to the online tools for this task. At least for now.

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.