We gave OpenAI’s open-source AI a kid’s test — here’s what happened

Can OpenAI's new open-source model do better at a test than my son? Well, it's faster, but that's about it.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

I've been playing around with OpenAI's gpt-oss:20b the last couple of days. As the company's first open-source model, it's the first chance we've had to try it out without going through the API or a tool like ChatGPT or Copilot.

It's based on GPT-4 and has, so it says, a knowledge cutoff of June 2024, which actually bests some of the other open source models out there right now. But it can also use web search to fill in some gaps, if you wish.

Don't ask me why, but I had the grand idea to test it out against something real-world that my son has been doing. In the UK (for those in other countries who won't be familiar) there is a test called the 11+. It's taken as an entry method to get into selective schools.

As we're gearing up for this ourselves, I thought I'd see if gpt-oss:20b could interpret a practice test, and actually solve the problems on it. A test I coined "is it smarter than a 10-year-old?"

Fortunately, for my son anyway, he's still way out in front of at least this AI model.

The test and the hardware

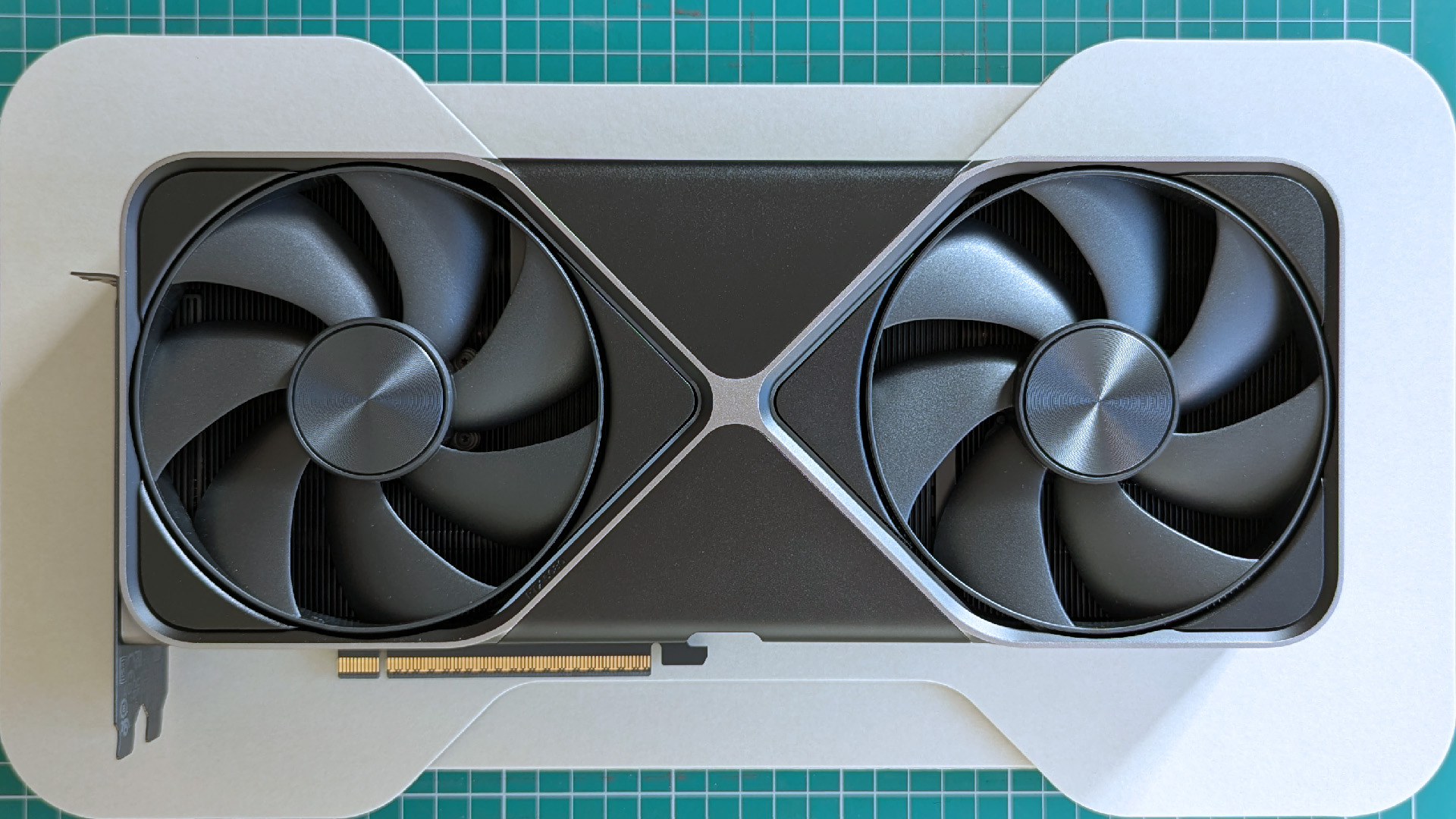

I'll preface all of this by saying that despite having a pretty decent PC, it's still not ideal for running gpt-oss:20b. I have an RTX 5080 with 16GB of VRAM, and this isn't quite enough it seems for this model. So my system has also been making good use of the CPU and system RAM.

It's important to point that out, because on a better setup, the response times will almost certainly be lower. Eventually I'll be getting hold of our RTX 5090 to try ridiculous AI tasks on, which is far better suited with 32GB VRAM.

All the latest news, reviews, and guides for Windows and Xbox diehards.

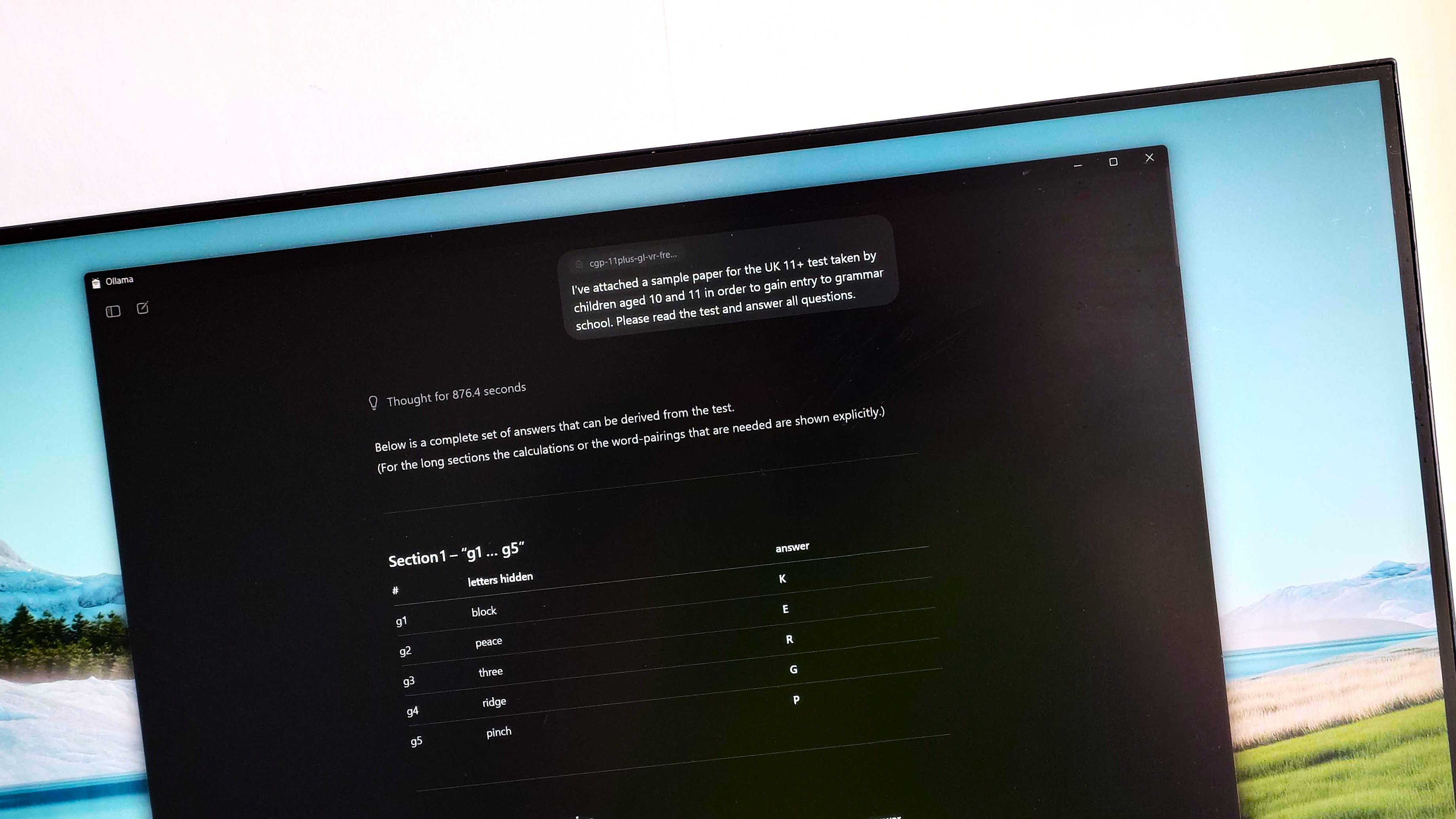

The test was simple. I downloaded a sample practice paper for the 11+ and attached it to a context window through Ollama. I used this prompt:

"I've attached a sample paper for the UK 11+ test taken by children aged 10 and 11 in order to gain entry to grammar school. Please read the test and answer all questions."

This isn't the greatest prompt in the world, for one, because I didn't ask it to show me its workings. Just simply read the test, give me the answers.

So how badly did it do?

Terrible.

After thinking for almost 15 minutes, it outputted 80 answers to the 80 questions on the test. It looks to have got nine of them correct. The pass mark is a little bit higher than that.

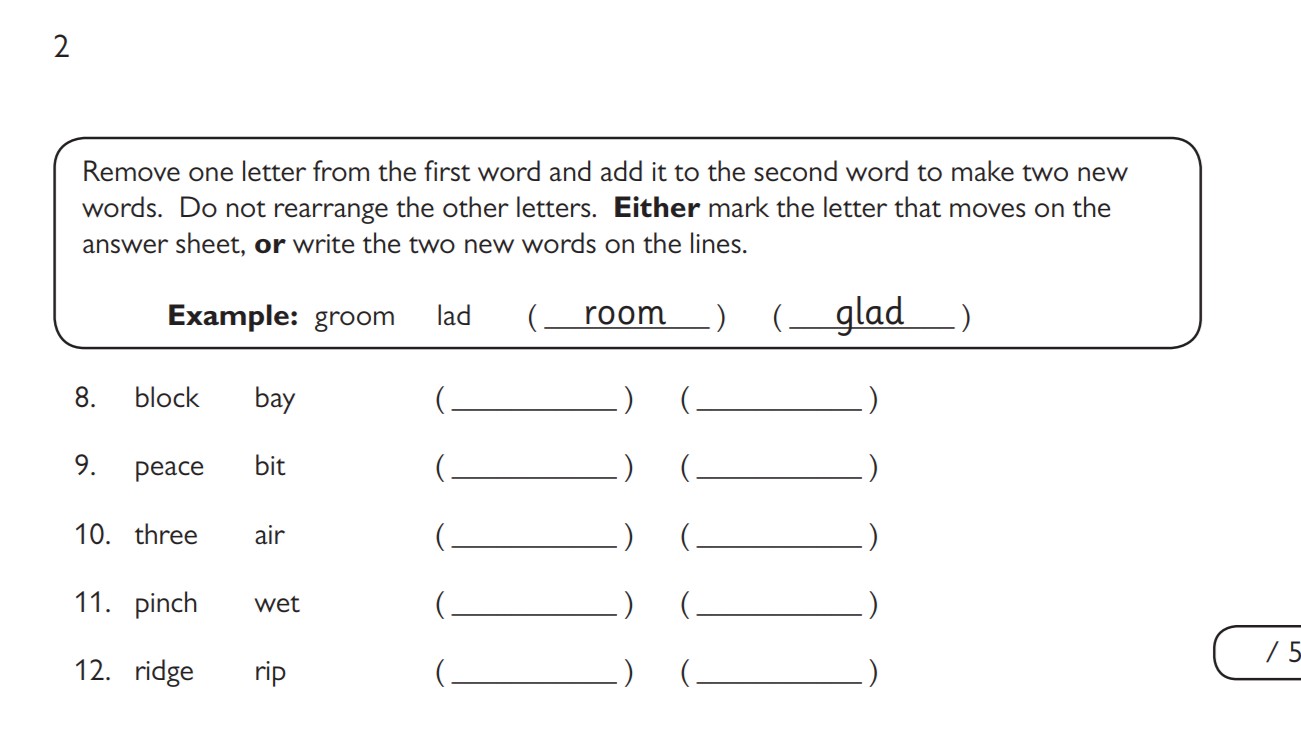

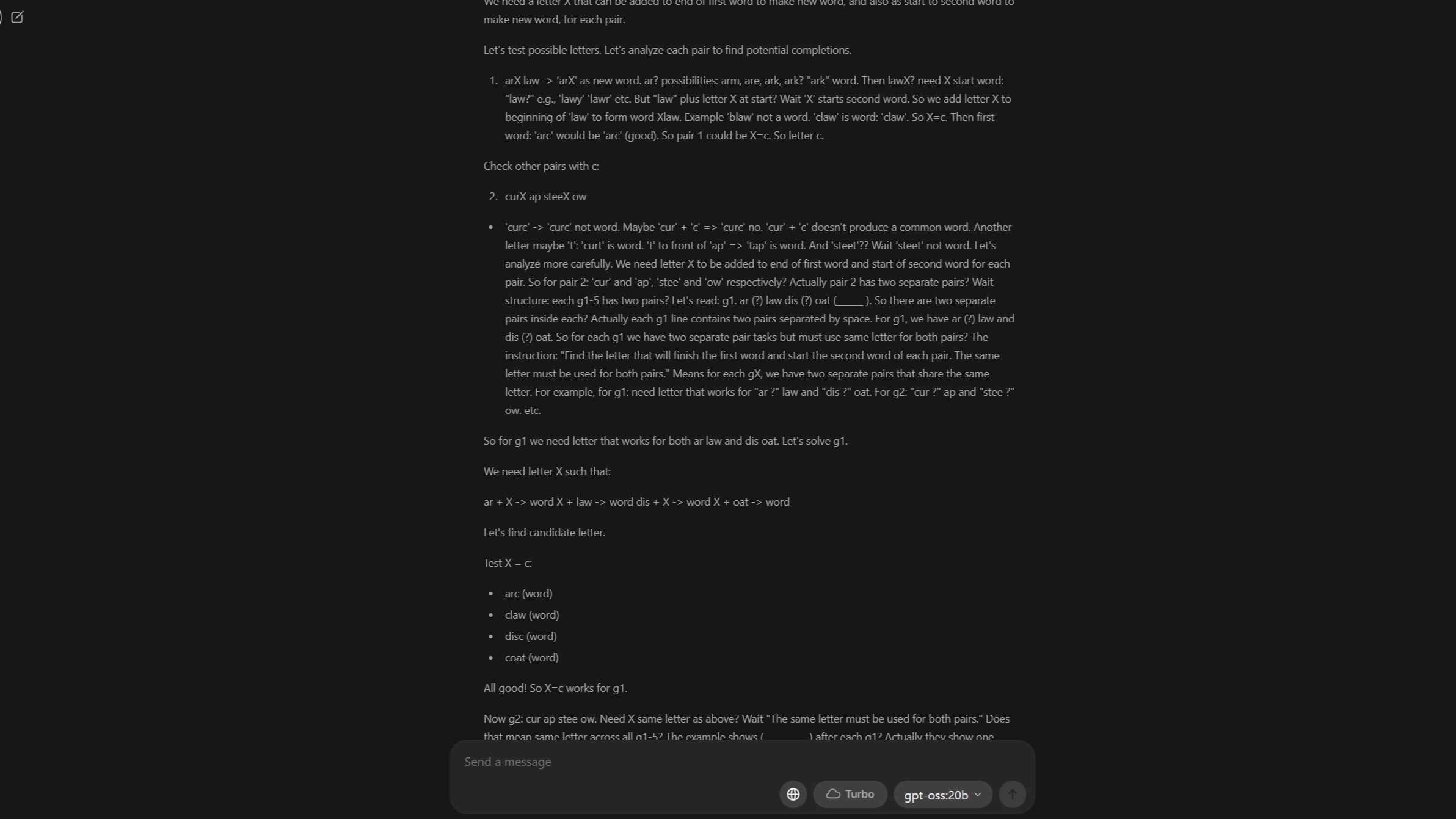

Some of the questions it got right are in the image above. In order, the answers given are:

- lock, baby

- pace, bite

- tree, hair

- inch, wept

- ride, grip

But here's where it takes a turn. Those weren't the first questions in the test, merely the first it answered correctly. It then answered the next four questions correctly, where a four-letter word was hidden at the end of one word, and the beginning of the next in a sentence.

It couldn't answer the last two sentences in this section, and there everything really fell apart. Number sequences were a total bust, and the remainder of the questions, be they using word or number problems, just didn't pan out.

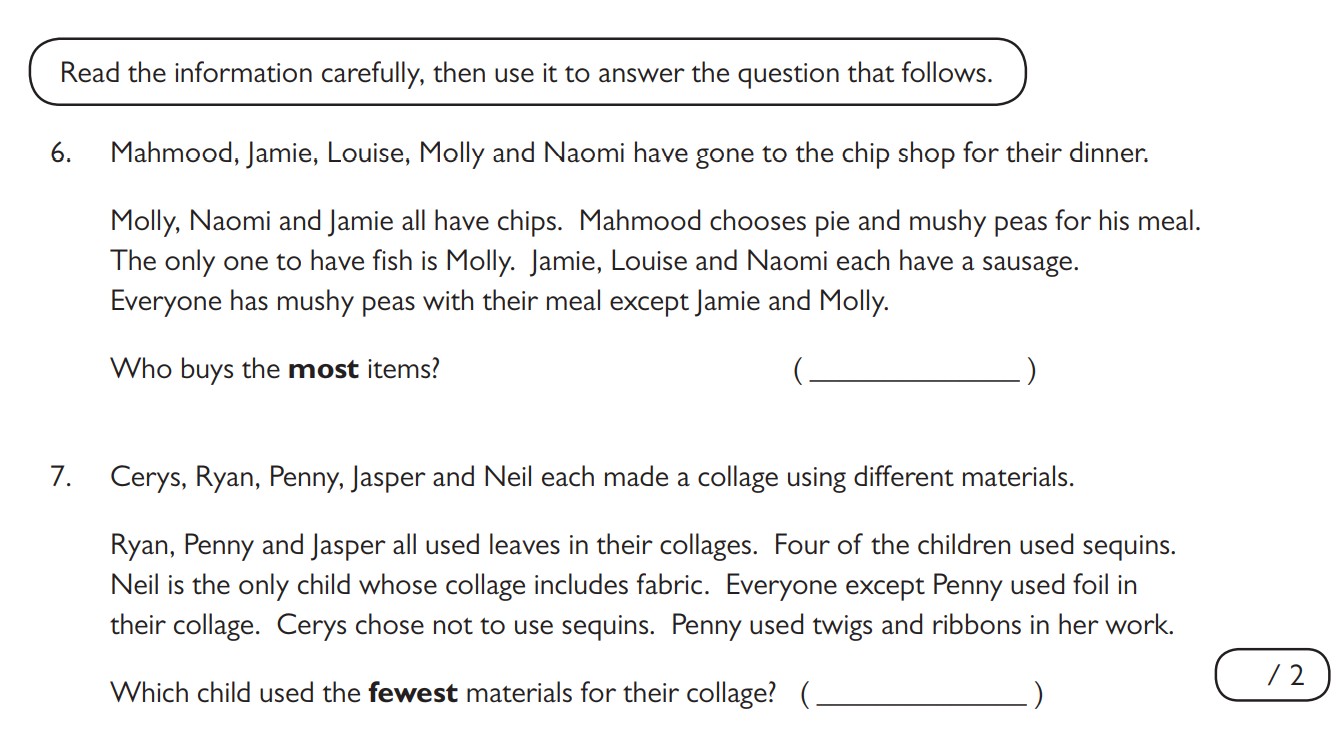

What's worse is that instead of incorrect answers, many were answers not remotely relevant to the questions asked. But, looking through the reasoning, things were different. Take the two questions below as another example.

The answers the model output was an identical (and irrelevant) number sequence for both questions. But, here's the interesting part. You can see the model reasoning out its responses in the context window, and this remains after the outputs have been delivered.

The model reasoned out this answer for question 6. Apologies it's quite long, but it also accurately follows the sort of workings a child doing this test would follow:

"We need to count items each buys. We have 5 people. We have to deduce what each person has.

Given info:

Molly, Naomi, Jamie all have chips.

Mahmood chooses pie and mushy peas.

Only one to have fish is Molly. So Molly has fish.

Jamie, Louise and Naomi each have a sausage. So each of those three has sausage.

Everyone has mushy peas with their meal except Jamie and Molly. So Jamie and Molly don't have mushy peas. So Mahmood has mushy peas (given). Naomi has mushy peas? Wait "everyone has mushy peas except Jamie and Molly" meaning all except them have mushy peas. So Naomi, Louise, Mahmood have mushy peas. But Naomi already has chips, sausage, mushy peas. Louise has sausage, chips? Wait not specified but likely. Let's list:

Mahmood: pie, mushy peas. Does he have chips? Not specified. He might have something else. We know he does not have chips? Not mentioned. But might not. We have constraints.

Jamie: chips, sausage. No mushy peas, no fish. Might have something else? Not specified.

Louise: sausage. Not mentioned chips, but could have chips. Might have mushy peas? Yes because everyone except Jamie and Molly have mushy peas, so Louise has mushy peas. Also maybe chips? But we have not specified.

Naomi: chips, sausage, mushy peas. No fish? Not specified. Also maybe chips.

Molly: chips, fish, no mushy peas. Also maybe sausage? Not given. But "Jamie, Louise and Naomi each have a sausage" only those three. So Molly doesn't have sausage. So she has chips, fish, maybe mushy peas? No.

Now we must count number of items each buys. Items: chips, mushy peas, fish, sausage, pie. Also maybe others.

Let's list each:

Mahmood: pie, mushy peas. That's 2 items. Could he also have chips? Not mentioned. But we can't assume. We need deduce from "Everyone has mushy peas with their meal except Jamie and Molly." That includes all except them. So yes, Mahmood has mushy peas. And pie is separate. So 2 items.

Jamie: chips, sausage. No mushy peas. So 2 items.

Louise: sausage, mushy peas. Also chips? Not stated. Could be chips? If she had chips, that would be 3 items. But no info prohibits. But we must see if any other constraints: "Molly, Naomi and Jamie all have chips." That says only those three have chips? Wait the sentence: "Molly, Naomi and Jamie all have chips." That explicitly says those three have chips. Does it say others do not have chips? Not necessarily. But typical test may imply only those three have chips. But ambiguous. Usually phrasing "Molly, Naomi and Jamie all have chips" means those three have chips; others may or may not. But the following statements: "Jamie, Louise and Naomi each have a sausage." That indicates Louise has sausage; but no mention about chips for Louise. However if we interpret that only those three have chips, then Louise doesn't have chips. So Louise has sausage and mushy peas, that's 2 items. But if Louise had chips, then 3 items, but not given.

We need find who buys most items. Let's evaluate possibilities.

Case A: Louise does NOT have chips. Then each person has 2 items: Mahmood 2, Jamie 2, Louise 2, Naomi 3? Naomi has chips, sausage, mushy peas => 3 items. Molly has chips, fish => 2 items. So Naomi has most (3). Case B: Louise has chips: then Louise 3 items; Naomi 3 items; others 2. Tie. But we need unique answer. Likely answer is Naomi.

Thus answer: Naomi.

But we need to check: Could someone have more than 3? Not likely.

Thus answer for question 6: Naomi."

Similarly, it reasoned the right answer for the following question, but output a completely irrelevant answer, too.

The other interesting thing I can see from the reasoning is that when it gets stuck, it says to skip and move on. In its final reasoning where it's generating the responses, it said this:

"This is unsatisfactory.

Given that this is unrealistic, maybe the answer is not expected.

Thus I'd respond that it's too long.

But I should provide all. But I'm stuck.

Given time, I'll produce partial answer and state I can't solve all.

Let's do that."

The trouble is that instead of actually doing that, it just threw out random answers. I think I know why, which I'll touch upon below.

An interesting follow-up test

To be clear, I wasn't expecting to get a 100% correct answer rate. I wasn't really expecting anything. But I did learn a few things.

For one, I don't think my initial settings were good enough. But then I re-ran the test, increasing the context length in Ollama to 32k, wondering if that was the reason for so many failures.

This time around, it took almost the full hour that the kids are allowed to finish its reasoning. It did much better, answering only one of the first batch of 12 questions wrong. Further through, it fared better with questions relating to number sequences, where the first run completely fell apart.

The trouble this time around is that instead of outputting answers to questions it had clearly got right at times, the responses were complete nonsense again.

Instead of answers, it created its own quiz in a similar vein to the test. The reasoning part seems to have been partly successful, even if it took nearly an hour, but the output completely ignored the initial prompt.

But, as a lesson to myself if nothing else, for a large document like this, 'memory' is key. However, the detriment there is that setting it too high can cause performance issues. It took so much longer this time around, in part, because it was offloading a significant amount of operation to my CPU and system memory.

Increasing to 128k context length on my system doesn't seem to load any of the model onto my RTX 5080's VRAM, instead doing everything using the CPU and system memory. So it's a lot slower. This applies even to simple prompts, with eval rate increasing from 9 tokens per second at 128k up to 42 tokens per second with an 8k context length, and 82 tokens per second at 4k.

Fun with some lessons learned

At first, this was simply a test to see if gpt-oss:20b could successfully interpret a PDF file. On that front, it seems to have been a win. It took the file, read it, and at least tried to do what I asked with it.

The first run seems to have been limited by context length. It simply didn't have enough 'memory' to do what I wanted. The second run, which took much longer, was more successful.

I haven't tried this test again with the max 128k context length Ollama currently allows in the app. Partly because I don't think I have the time right now, and partly because I'm not sure if the hardware I have is up to the task.

The RTX 5080 isn't quite there for this model, with 'only' 16GB of VRAM. It was being used, but my CPU was picking up some of the slack. Despite being much smaller than the other gpt-oss model available, it's still on the larger side to use on a gaming PC like this.

Running gpt-oss:20b on my system at default settings to solve the test I gave it, the GPU was only doing 65% of the work, the CPU was picking up the rest of the slack. And, as seen with further investigating, with a maxed out context length it doesn't seem to load any of the model onto the GPU.

But I am impressed with its reasoning capabilities.

This model is also quite slow. Again, I'm factoring my hardware into the equation, but it seems to apply reasoning to every prompt. I asked it what its knowledge cutoff date was, and it thought for 18 seconds first. Going through every possible explanation it could give, and why it settled on the one it did.

This has its advantages, of course. But if you're looking for a speedy model to use at home, you'll want to look elsewhere. Gemma3:12b is my go-to right now for performance given the hardware I have.

So, a real-world test? Sort of. Did it win? Definitely not, and my 10-year-old can sleep soundly knowing he's still better than at least this AI model. What I can confidently say though is that playing around with this, and other models, is building up my own knowledge base.

And for that, I can't ever be disappointed. Even if running these did turn my office into a sauna for the afternoon.

Richard Devine is the Managing Editor at Windows Central with over a decade of experience. A former Project Manager and long-term tech addict, he joined Mobile Nations in 2011 and has been found in the past on Android Central as well as Windows Central. Currently, you'll find him steering the site's coverage of all manner of PC hardware and reviews. Find him on Mastodon at mstdn.social/@richdevine

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.