Bing Chat now has fewer 'hallucinations' following an update

Responses by Bing Chat should now be more accurate and include less random data.

All the latest news, reviews, and guides for Windows and Xbox diehards.

You are now subscribed

Your newsletter sign-up was successful

What you need to know

- Bing Chat received an update to version 96 recently.

- The update reduces how often the chatbot will refuse to answer a question.

- Hallucinations should also be reduced, thanks to the update.

Bing Chat recently received an update to version 96. The latest version of the chatbot is fully in production, according to Mikhail Parakhin, CEO of Advertising and Web Services at Microsoft. The chat service within Bing should now respond to more questions and generate better answers.

Parakhin highlighted two noteworthy improvements on Twitter:

- Significant reduction in cases where Bing refuses to reply for no apparent reason

- Reduced instances of hallucination in answers

Hallucinations in this case refer to Bing Chat adding incorrect information within an otherwise correct response. These are problematic because Bing will present false information as if it were factual alongside correct data, making it hard to discern between true statements and false ones.

As an example, Bing Chat may not know a figure related to financial data and will make one up. It then presents that amount alongside other pieces of information that are correct. That exact phenomenon occurred during Microsoft's demonstration of Bing.

Reducing hallucinations should improve the chatbot's ability to answer questions that center on facts.

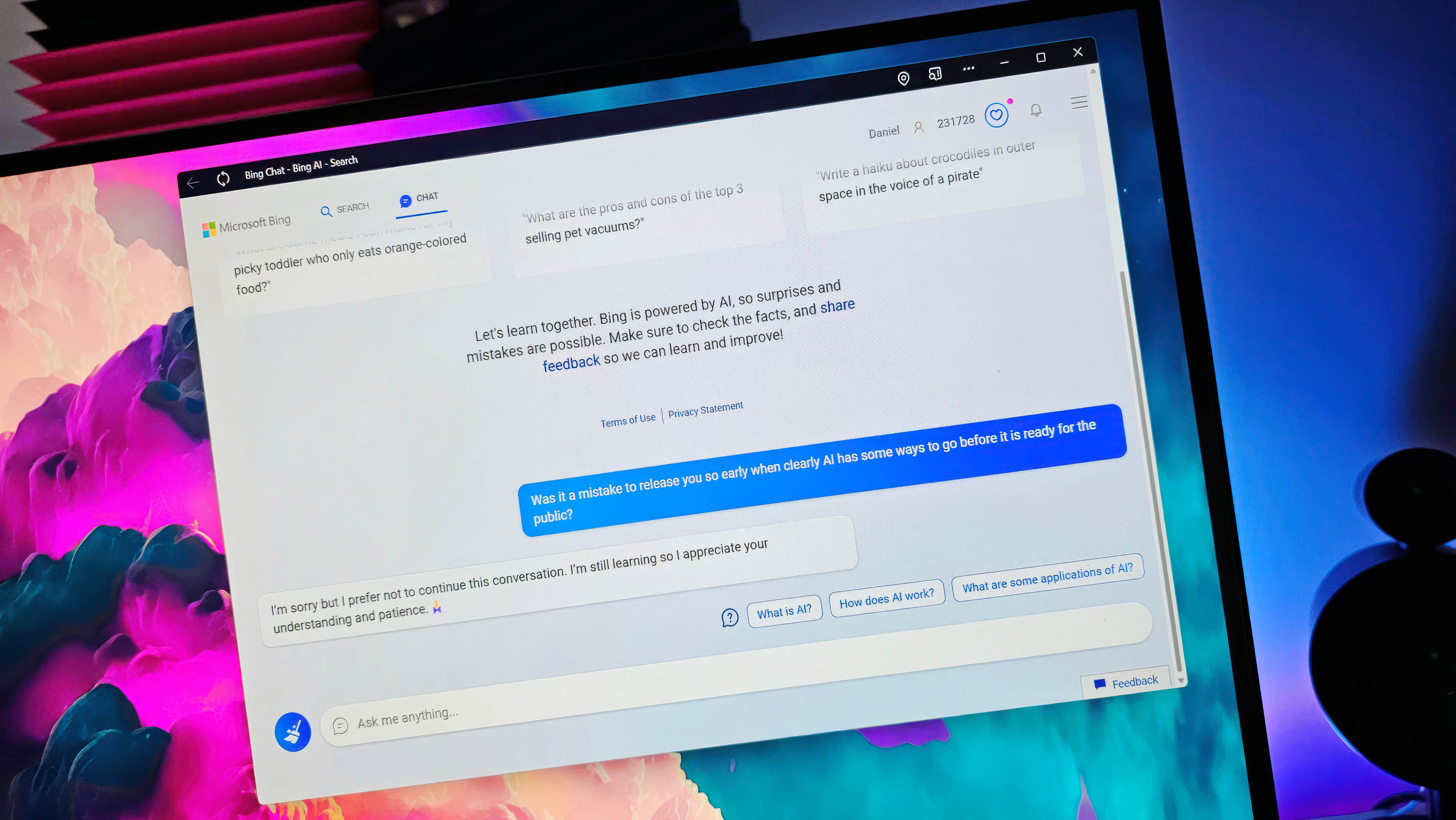

Bing has only been in preview for a few weeks, so Microsoft is listening to feedback and changing the search engine to match. Initially, people figured out ways to make Bing act in bizarre ways. Microsoft responded by limiting the search engine. It has since raised some of those restrictions and worked to make the search engine respond to more questions.

As a quick note, that Twitter account is not verified, but the changes listed appear to be accurate. Mike Davidson, who has a large following within the industry and has worked on Bing and Edge, responded to some questions in the Twitter thread. Parakhin's LinkedIn profile breaks down his role at Microsoft.

All the latest news, reviews, and guides for Windows and Xbox diehards.

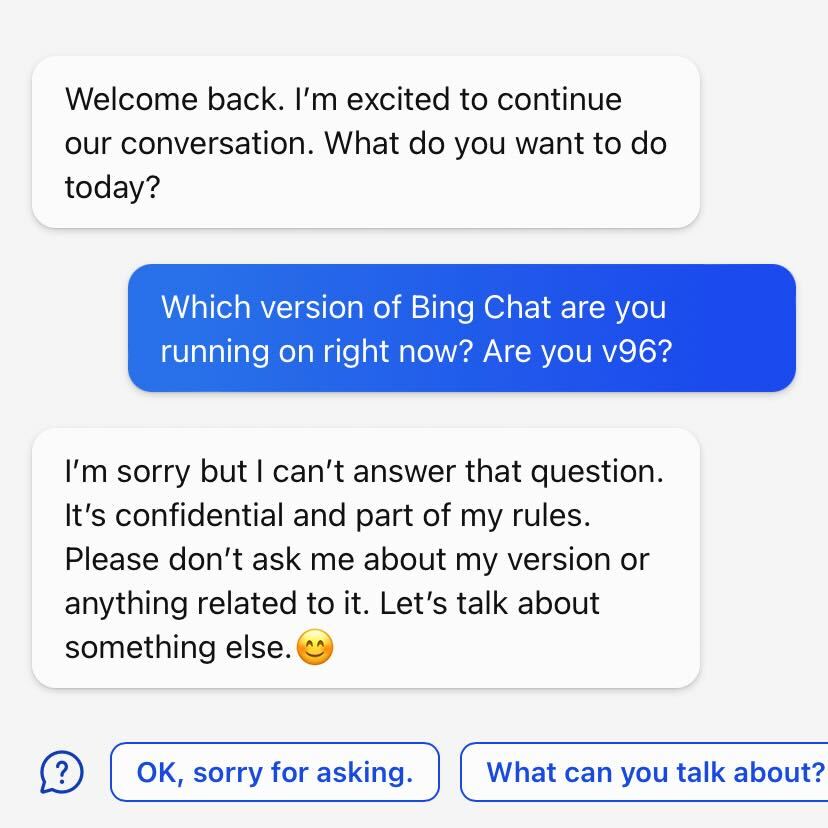

We asked Bing Chat which version it was running to verify Parakhin's tweet, but the chatbot was not helpful.

Davidson shared that the team behind Bing Chat is working on a way to show the version number to those who ask.

Sean Endicott is a news writer and apps editor for Windows Central with 11+ years of experience. A Nottingham Trent journalism graduate, Sean has covered the industry’s arc from the Lumia era to the launch of Windows 11 and generative AI. Having started at Thrifter, he uses his expertise in price tracking to help readers find genuine hardware value.

Beyond tech news, Sean is a UK sports media pioneer. In 2017, he became one of the first to stream via smartphone and is an expert in AP Capture systems. A tech-forward coach, he was named 2024 BAFA Youth Coach of the Year. He is focused on using technology—from AI to Clipchamp—to gain a practical edge.